From Words to Worlds: The Rise of Physical AI

How Meta's V-JEPA 2 is teaching AI to think before it acts (and what that means for robotics)

For years, artificial intelligence has lived in a world of symbols. It translated text, summarized documents, and generated images on command. But hand it a wrench and ask it to turn a bolt? That's where things fell apart.

The next evolution in AI is unfolding around a deceptively simple idea: giving machines an internal understanding of how the physical world actually works. Not through explicit programming, but through something more intuitive—something closer to human common sense.

It's called a world model. And Meta's newly released V-JEPA 2 might be the clearest signal yet that we're moving from AI that processes information to AI that navigates reality.

What World Models Really Mean for AI

Think of world models as internal physics simulators. They help AI systems predict how a scene will change when an action is taken—the same way humans do instinctively.

When you reach for a coffee mug, you automatically adjust your grip based on its apparent weight and shape. When a ball rolls under a couch, you know it didn't vanish—it simply moved out of view. These aren't conscious calculations. They're predictions built from lived experience.

Most AI systems haven't had that luxury. They've treated the world as static input—pixel arrays to classify or text to parse—not as dynamic environments they could mentally simulate or act within.

But if you want AI to operate physical machinery, navigate unpredictable terrain, or handle delicate objects, that internal simulation becomes essential. That's the gap world models are designed to fill.

V-JEPA 2: Learning Physics Through Observation, Not Instruction

Meta's V-JEPA 2 (Video Joint Embedding Predictive Architecture) represents a fundamental shift in how AI learns about the physical world. Instead of requiring armies of human annotators to label video frames or hand-code object behaviors, Meta's team took a radically different approach: they fed the model over one million hours of unlabeled internet video.

No captions. No bounding boxes. No explicit instruction. Just pure observation.

From this massive corpus, V-JEPA 2 began developing the same intuitions humans learn in childhood: how objects move, fall, bounce, and interact. But rather than memorizing pixel patterns, it learned to understand high-level dynamics—the abstract rules that govern physical behavior.

The Two-Stage Training Revolution

V-JEPA 2's power comes from its elegant two-stage approach:

Stage 1: Building Physics Intuition

The model watches millions of hours of general video content, passively absorbing how the physical world behaves. This creates its foundational understanding of gravity, momentum, object persistence, and cause-and-effect relationships.

Stage 2: Connecting Actions to Outcomes

A much smaller dataset—just 62 hours of robotic control data—teaches the model how specific actions (grasp, push, rotate) affect objects in real scenes.

The result is an AI that doesn't just see—it anticipates. It can predict the consequences of actions, even in environments it has never encountered before.

The Holy Grail: Zero-Shot Physical Intelligence

Here's where V-JEPA 2 breaks new ground. Traditional robotics has been trapped in a frustrating cycle: impressive demos that work only in highly controlled conditions. A robot learns to flip pancakes, but only with that specific spatula, that exact stove, those particular pancakes.

V-JEPA 2 escapes this trap through what researchers call "zero-shot generalization." Because it operates in an abstract embedding space rather than trying to predict exact pixel values, it can transfer its knowledge across different robots, objects, and environments without retraining.

Here's a concrete example: Train V-JEPA 2 on videos of people picking up coffee mugs in kitchens. Deploy it on a warehouse robot that's never seen a coffee mug. The robot can still successfully pick up the mug because it understands the underlying physics of grasping cylindrical objects—not just the specific pixels of the training videos.

This is the robotics equivalent of "write once, run anywhere"—except now it's "train once, manipulate anything."

Why This Signals the Physical AI Revolution

This breakthrough represents more than incremental progress. It marks AI's transition from digital pattern recognition to embodied intelligence—what the industry is starting to call Physical AI.

Physical AI describes systems that operate in the real world with human-like flexibility and adaptability. Not perfectly, but well enough to be genuinely useful across unpredictable situations.

The timing is crucial (as discussed in a previous issue). Several forces are converging:

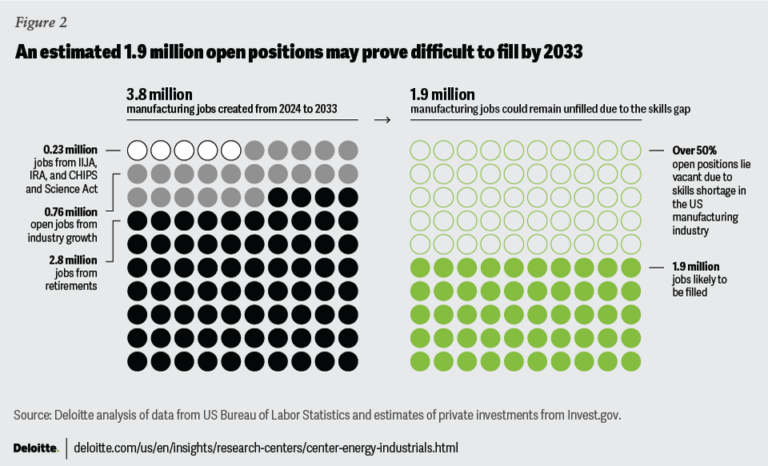

Labor shortages in manufacturing, logistics, and agriculture

Massive investment in humanoid and mobile robotics

Rising demand for flexible automation that can adapt to changing conditions

V-JEPA 2's approach promises to unlock these markets by giving machines the ability to understand and act in unstructured environments—the messy, unpredictable settings where most real work actually happens.

What This Means for Business Leaders

If your organization builds systems that interact with the physical world, this shift has immediate implications:

Dramatically Reduced Data Requirements

Self-supervised world models like V-JEPA 2 eliminate the need for massive hand-labeled datasets. Instead of annotating thousands of hours of robot footage, you can leverage the model's pre-trained physics understanding.

Cost-Effective Deployment

At 1.2 billion parameters, V-JEPA 2 runs efficiently on a single GPU. This enables real-time control at the edge without sending video streams to the cloud—crucial for applications requiring split-second responses.

Hardware-Agnostic Intelligence

Rather than training separate models for each robot and environment, you can train one general model and deploy it across different hardware platforms and use cases.

Faster Time-to-Market

This moves robotics toward the software-defined paradigm that cloud teams already understand: abstract the complexity, build once, deploy everywhere.

The Road Ahead: From Lab to Factory Floor

World models represent the missing link between AI perception and intelligent action. They enable machines to think ahead—to simulate potential outcomes before committing to actions.

This capability unlocks applications that were previously impractical: autonomous systems in agriculture that adapt to crop variations, warehouse robots that handle unexpected package types, disaster response drones that navigate debris-filled environments.

V-JEPA 2 proves that the blueprint for Physical AI isn't hypothetical anymore. It's real, it works, and it's available for experimentation today.

The Strategic Question

The question for business leaders isn't whether robots will get smarter—V-JEPA 2 and similar models make that trajectory clear. The question is when your industry will need to start planning around that reality.

Because the next frontier in AI won't be typed into a chatbot. It will be built, deployed, and set into motion in the physical world where your business operates.

The companies that recognize this shift early—and begin experimenting with world models and Physical AI today—will have a significant advantage when these technologies mature from research projects into market-ready solutions.

Start small: pilot V-JEPA 2 on a contained automation challenge in your operations. Build internal expertise with the open-source tools Meta has released. Identify which of your current manual processes could benefit from adaptive, self-supervised robotics.

The window for experimentation is open now. The window for competitive advantage won't stay open forever.