Why the Future of AI Is Local, Not Cloud-Based

How edge AI is redefining performance, architecture, and the semiconductor landscape

Over the past decade, artificial intelligence has been defined by the cloud. Complex models—spanning everything from computer vision to language understanding—have relied on massive data centers powered by GPUs and specialized AI accelerators. But that era is entering a new phase.

A growing share of AI workloads is moving from centralized compute environments to the edge—on-device, local, and always on. This shift is being driven by advances in semiconductor design, the proliferation of neural network architectures optimized for inference, and increasing demand for privacy, responsiveness, and autonomy.

Edge AI is no longer a peripheral use case. It is becoming the default.

Whether it's in smartphones, vehicles, industrial systems, or consumer electronics, AI is being embedded into the physical world at scale. As detailed in the SHD Group’s 2025 Edge-AI Report, this transition is already reshaping chip design, model development, and software infrastructure—bringing new challenges and even greater opportunities across the ecosystem.

Key Takeaways

Edge-AI SoC Market Growth: Unit shipments are forecast to grow from 2.2 billion in 2024 to 8.7 billion by 2030, with revenues projected to triple from $32.4B to $102.9B.

CNNs Still Dominate, Transformers Rising: Convolutional neural networks remain central to current edge deployments, while transformer support is expanding to enable future multimodal and agentic applications.

Multimodal and Agentic AI: Devices are being re-architected to support AI agents that integrate vision, audio, and context-awareness—particularly in mobile and consumer environments.

SIP Market Expansion: AI-focused semiconductor IP (SIP) is expected to grow from $633M to $2.2B by 2030, with NPUs leading in performance optimization and adoption.

Shift in Performance Metrics: Traditional metrics like TOPS are becoming less meaningful; energy per inference, software stack maturity, and real-time latency now define competitive differentiation.

SoC Design Complexity: Gate counts are projected to rise 3.6× by 2030, with increased pressure on integration, power efficiency, and software co-development.

From Cloud Titans to Tiny SoCs

The accelerating growth in Edge-AI SoC shipments is not just a story of volume—it’s a signal that intelligence is being woven into the fabric of every connected product.

When the report projects a tripling of Edge-AI revenues to over $100B by 2030, that growth isn’t driven by one segment—it’s distributed across smartphones, industrial sensors, wearables, and consumer electronics. And it's fueled by three interlocking forces: performance-per-watt improvements in silicon, increasingly AI-native product experiences, and demand for privacy-preserving computation at the edge.

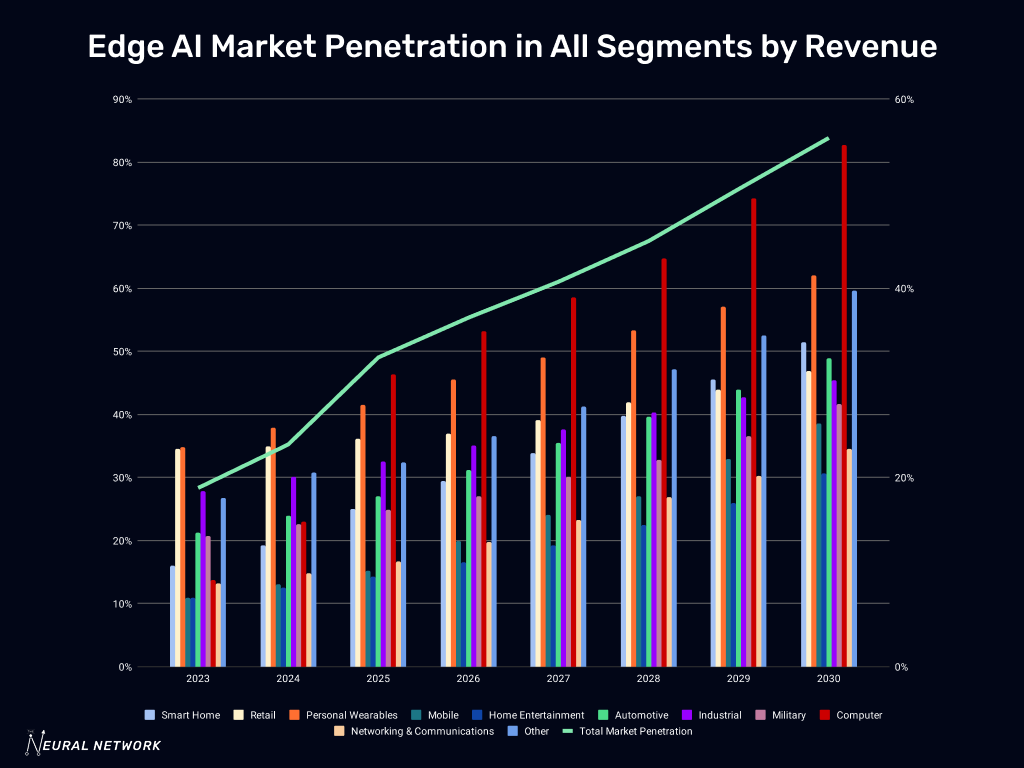

This shift is reshaping SoC architecture. The report’s forecast—market penetration of AI SoC for revenue growing from 23.5% in 2024 to 55.9% by 2030—implies that embedded AI is not just being added to products, it is becoming the defining feature of next-generation hardware platforms.

In other words, the SoC is no longer just the engine of the product; it’s becoming the brain.

CNNs Still Rule, But Transformers Are Knocking

If you're designing for the edge today, you’re still thinking in convolutional neural networks. CNNs are the workhorses—optimized into silicon, from ResNet to MobileNet to Yolo. They account for the vast majority of real-world edge deployments, particularly in vision systems.

Survey data backs this up: CNNs remain the most commonly supported and deployed network type, thanks to their efficiency and maturity.

But under the surface, the ground is shifting.

Transformer models are being added by vendors not necessarily because of immediate need, but as a hedge against obsolescence. They're more common in R&D pipelines than production today—but every vendor knows that tomorrow’s agents, LLMs, and multimodal systems will depend on transformer-like architectures.

Interestingly, very few vendors report full support for advanced models like BERT, highlighting that transformer adoption at the edge is still immature. But the demand signal is clear, and compilers, NPUs, and SDKs are already starting to evolve.

Multimodality and the Rise of Agentic AI

We’re approaching the next inflection point: Agentic AI. Not just models that respond, but ones that act. Not just inputs and outputs, but memory, reasoning, goals.

These agents aren’t far-off sci-fi—they’re already trickling into consumer devices. Think voice assistants that don’t just execute commands but proactively suggest actions. Or AR glasses that see what you see, hear what you hear, and infer what you need.

According to the report, edge devices are already being adapted to support multimodal models—those that combine video, audio, touch, and contextual inputs. And the introduction of Agentic AI is listed as a major driver of innovation, with the report predicting that such agents will be primarily deployed on mobile platforms with high performance-per-watt requirements.

This shift will define the next generation of smart devices.

The SIP Behind the Curtain

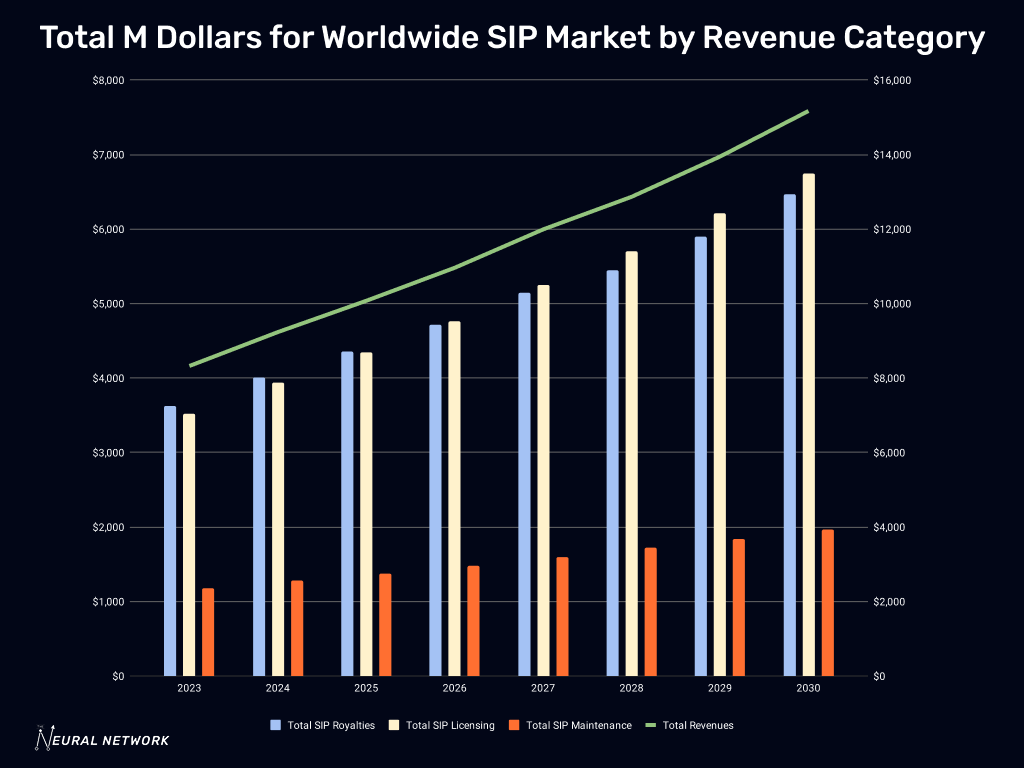

All of this intelligence needs silicon—and increasingly, that silicon is built on licensed IP. The AI SIP (Semiconductor Intellectual Property) market is forecast to grow from $633 million in 2024 to $2.2 billion by 2030, a CAGR of 23.2%.

Within that market:

NPU SIPs are leading the charge due to their role in accelerating inference.

AI-SIP’s share of the overall SIP market is expected to more than double, from 6.9% to 14.6%.

But not all silicon is created equal.

While vendors love to brag about TOPS (tera-operations per second), practitioners know that energy per inference and real-time latency matter far more at the edge. Memory access patterns, activation function support, and operator coverage define what your chip can actually run.

As the report points out, TOPS is a weak metric that fails to account for bottlenecks like memory bandwidth and model complexity. Batch size also matters—many NPUs that look fast on paper crumble in single-inference, real-time applications.

Complexity is the Cost of Progress

Modern SoCs are astonishingly complex. The report notes a projected 3.6× increase in average gate count between 2025 and 2030, reflecting the effort to integrate AI accelerators, power management, memory subsystems, and security—all into a single chip.

This isn't an add-on anymore. AI is defining the architecture.

Yet this complexity isn’t just technical. It’s organizational. Supporting customer-supplied models, retraining pipelines, and model compression flows requires tight coordination between hardware, software, and ML teams. And with most edge design teams lacking in-house data scientists, vendors are under pressure to provide easier-to-use software tooling.

What This Means for Builders

If you’re building for the edge—or betting on it—here’s what to watch:

CNNs still dominate, but transformer readiness is a non-negotiable if you plan to compete past 2026.

Energy per inference > raw performance. Low-latency, single-shot inference is the actual bottleneck.

Agentic AI is real—and edge-native by necessity. Keep an eye on multimodal fusion and context modeling.

Software stacks and model compatibility are the battlefield. If your toolchain requires deep ML ops expertise, you’ve already lost.

SIP matters more than ever. But make sure your NPU doesn’t just “run AI”—it must run your customer’s model, in real time, on-device, with minimal power.

One More Thing

The big story isn’t just that AI is leaving the cloud. It’s that intelligence is becoming ambient. Woven into the physical world. Perceptive, contextual, persistent.

This won’t happen overnight. But it’s already underway. If you're still designing for the past—models that assume connectivity, batch processing, or cloud latency—you’re going to miss it.

The edge isn’t a constraint. It’s an opportunity.

And the companies that realize that first are the ones who will shape the next decade.