Why Integration Will Define Enterprise AI Success

Google Cloud Next highlights, full-stack AI and ease of integration as a value proposition

As someone who’s observed numerous technology adoption cycles, I’m increasingly convinced that we’re entering a new phase of enterprise AI. In this phase, integration capabilities will matter far more than just raw model performance.

The next wave of AI adoption won’t be defined by which company builds the most impressive model that tops the benchmark charts, but rather by which technology stack can most effectively solve the tough challenge of enterprise technology integration. That is, connecting AI to existing systems, workflows, and empowering users to build their own solutions–all while addressing the messy realities of organizational adoption.

Google Cloud’s latest announcements at Google Cloud Next provide a fascinating window into how this transition is unfolding. What we’re seeing is a shift from “can we build an AI that does X?” to “how do we integrate AI capabilities seamlessly across our organization?”

Time-to-Value as a Key Selling Point

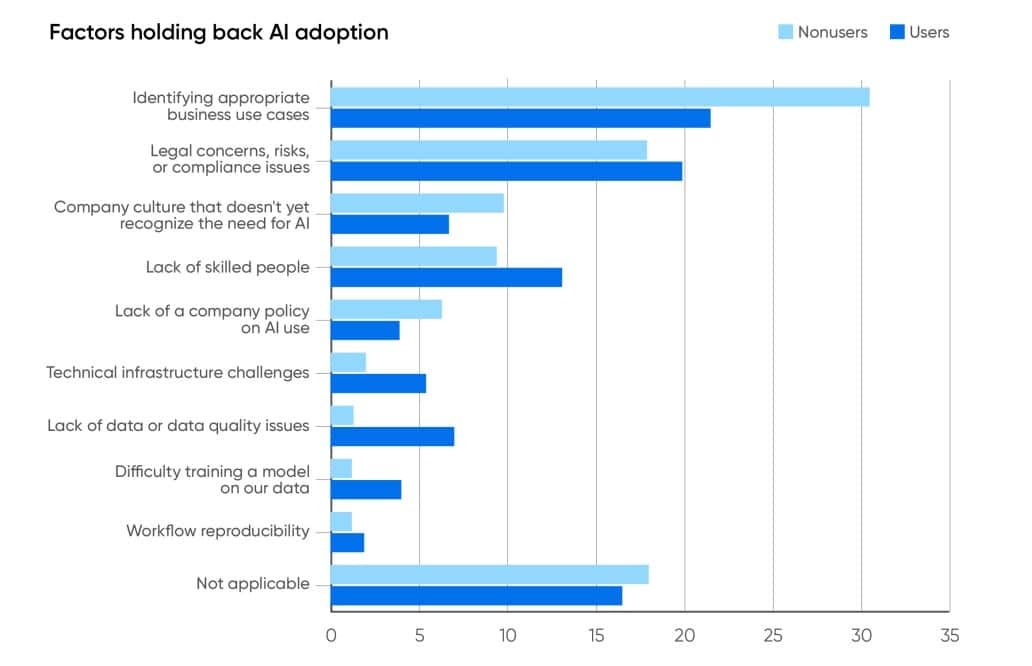

When I talk to executives about their AI efforts, I hear a consistent theme that harkens back to the early SaaS days: their greatest efforts aren’t in accessing advanced models, but in making those models work within their existing organizational context.

Consider for a moment that you’re a leader in a business that wants to integrate AI beyond just giving employees access to ChatGPT or Claude. In order to leverage this technology to its fullest extent, it would need to connect with:

Existing data sources (often siloed across departments)

Legacy systems that aren’t going away anytime soon

Security and compliance requirements

Existing workflows and business processes

People with varying levels of technical expertise

This is where Google’s investment in developing the full AI stack, from infrastructure to application-level agents, becomes particularly interesting. The aim is to speed up the time-to-value for AI initiatives by bringing AI to the data regardless of its location.

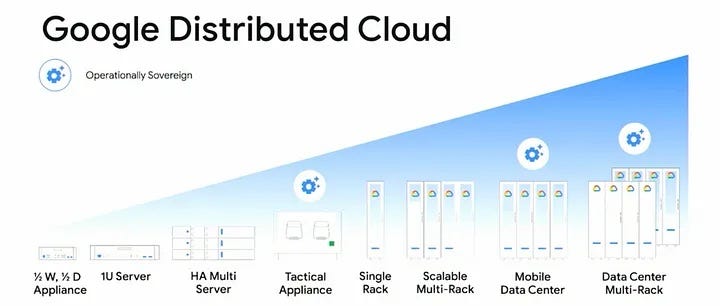

The On-Premises Revolution

Perhaps the most significant development that came out of Cloud Next is Google’s move to enable running Gemini models through Google Distributed Cloud (GDC). As an extension of Google Cloud, GDC provides fully managed software and hardware solutions for data centers and edge locations. This isn’t just another deployment option, it represents a profound strategic shift.

For the Fortune 1000 companies that make up the backbone of the global economy, on-premises deployment solves several critical challenges for AI deployment:

Regulatory and data sovereignty requirements: Many organizations in regulated industries or crucial government sectors have strict requirements about where their data can reside and be processed.

Latency and data volume constraints: Moving petabytes of enterprise data to the cloud for processing is neither practical nor cost-effective.

Air-gapped security needs: Some applications, particularly in defense and sensitive industries (like healthcare or finance), require completely isolated environments.

What’s interesting is that Google is taking a fundamentally different approach than some of its competitors. While OpenAI and Anthropic have largely avoided on-premises deployments because it gives them less control over the quality and speed of their model usage, Google has recognized that this creates an integration barrier for many enterprises.

By offering Gemini on GDC, Google has eliminated a major friction point. Organizations no longer need to compromise between the best AI performance and the need to keep data on-premises. This is a big bet that the market share for enterprise AI will be won by whoever can make integration into existing systems and workflows as seamless as possible.

The Rise of Multi-Agent Systems

One of the more forward-looking elements of Google’s strategy is its embrace of agents and a multi-agent paradigm.

With Agentspace, users across an org, no matter their technical expertise, will have access to specialized agents that are already integrated with enterprise systems and can be readily tailored to individual workflows.

By lowering the barrier to entry, Google is enabling the kind of grassroots adoption that often drives successful technology transformations.

Additionally, the introduction of the Agent Development Kit and Agent2Agent Protocol (A2A) signals an understanding that the future of enterprise AI won't be dominated by a single monolithic agent, but rather by ecosystems of specialized agents that collaborate to solve complex tasks.

This is reminiscent of how complex organizations work in the real world—specialized teams with distinct expertise working together, rather than a single generalist trying to do everything.

Enterprises inevitably operate in heterogeneous environments. The ability to automate work across diverse systems and integrate a wide range of data sources becomes a force multiplier for AI’s value.

The Real Enterprise Game

The battle for enterprise AI dominance is increasingly looking less like a model performance competition and more like an integration race. Google's strategy—with its emphasis on deployment flexibility, comprehensive agent frameworks, and interoperability protocols—reflects this understanding.

The next wave of enterprise AI won't be defined by technological breakthroughs, but by how effectively organizations can integrate AI into their existing operations. The question at hand is shifting from “which AI is best?” to “which AI ecosystem best fits into our existing technology landscape and workflows?”

If you’re a business leader looking to deploy AI at scale, here are some key principles to consider:

Start with business problems, not technology: Identify specific workflows where AI can drive measurable improvements.

Prioritize integration over model performance: A slightly less sophisticated model that integrates well with existing systems will outperform a more advanced model that exists in isolation.

Address data access early: Establish connections to enterprise data sources with proper security and governance.

Enable decentralized creation: Provide tools that allow teams across the organization to create purpose-specific agents.

Build for interoperability: Ensure AI systems can communicate with each other and with human workflows.

The organizations that will extract the most value from AI won't necessarily be those with access to the most advanced models. Rather, they'll be the ones that successfully weave AI capabilities into their existing business processes, technology stacks, and organizational structures with minimal friction.