Where Real Power Lies in the AI War

Why the winners of the AI revolution won’t be the ones building the smartest models—but the ones controlling the power, the data, and the distribution.

The Illusion of Power

When OpenAI announced its $6.5 billion acquisition of Jony Ive’s secretive hardware startup, I/O, it seemed like a design story.

The goal: to ship 100 million “AI companions”—screen-free, voice-aware devices that might one day replace the iPhone.

But the deeper meaning wasn’t aesthetic—it was geopolitical.

This was a declaration of war against Apple’s dominance, and a signal that OpenAI doesn’t just want to power the apps of the AI era. It wants to own the platform itself.

Yet the real story isn’t about Ive, Altman, or Apple. It’s about where value is actually being captured in the new AI economy.

For all the noise about model benchmarks and chat interfaces, power in AI isn’t where most people are looking.

Behind every AI breakthrough lies a network of industrial bottlenecks—megawatts, chip fabs, data rights, and distribution pipelines—that determine who survives and who fades.

This is the quiet war unfolding beneath the hype.

The Foundations of Intelligence

“Intelligence consumes power—a lot of it.”

That single statement captures the first truth of the new era: AI is limited not by creativity, but by physics.

OpenAI’s Stargate data center in Texas—reportedly costing up to $500 billion—will require its own power plant just to operate.

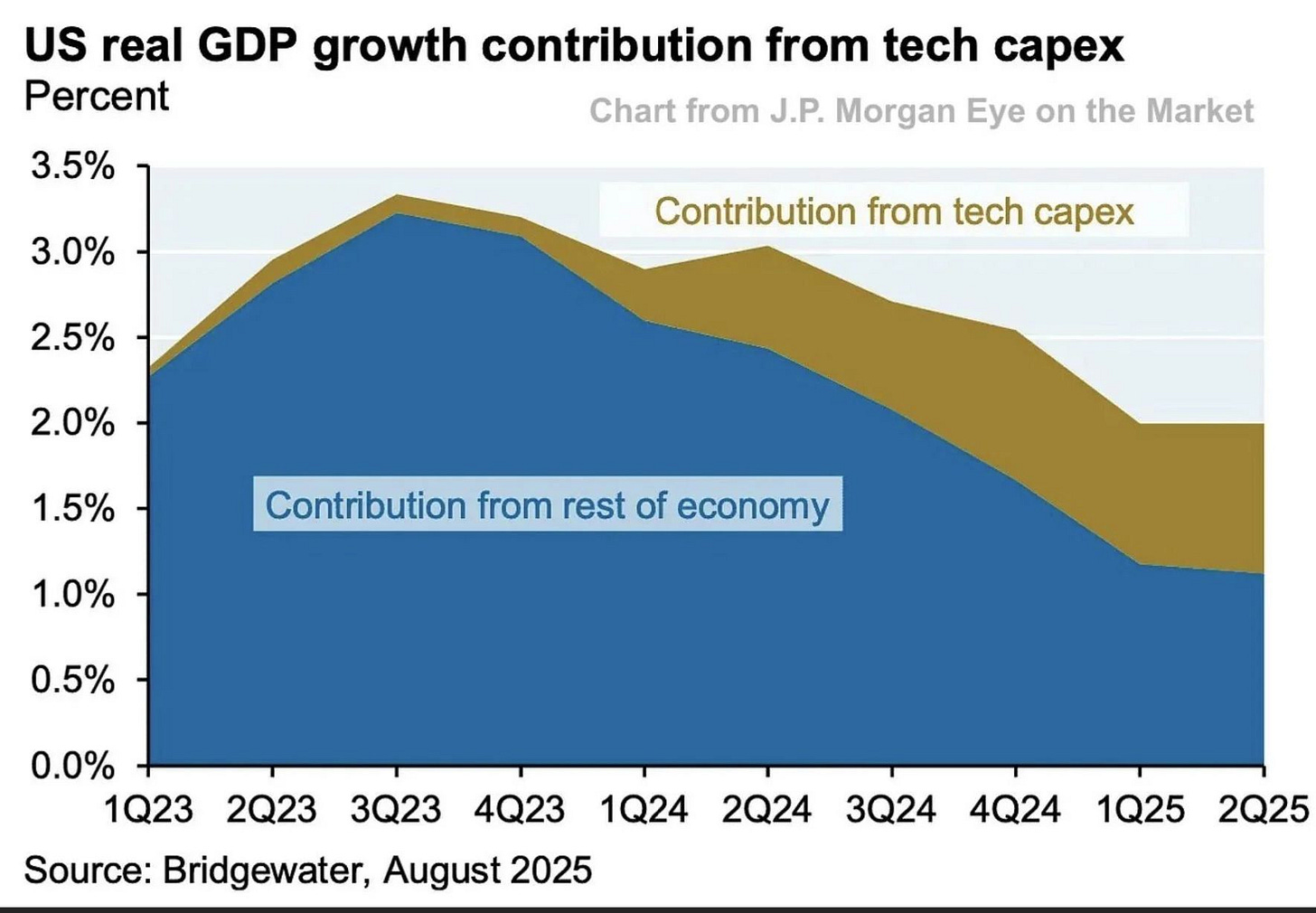

Bank of America now projects global AI capital expenditures will hit $432 billion next year. These aren’t software numbers. They’re industrial ones.

The New Landlords

Hyperscalers like Amazon, Microsoft, and Oracle are in a global land grab—not for users, but for land and power.

Whoever builds fastest wins. Construction speed has become a competitive advantage; each delay costs millions in foregone compute.

Even Bitcoin miners—long dismissed as speculative—have become unexpected suppliers. Their data centers, cooling systems, and long-term power contracts make them early owners of the new commodity: megawatts. CoreWeave, a former crypto miner turned AI cloud provider, is now a key node in OpenAI’s supply chain.

The Physics of Scarcity

The International Energy Agency projects that data centers could consume as much electricity as Japan by 2030.

Power has become the number-one constraint—ahead of chips or land. Every hyperscaler is now an energy company in disguise, quietly striking nuclear and natural gas deals to secure long-term supply.

In this world, the language of strategy has shifted from features and functions to gigawatts and cooling capacity.

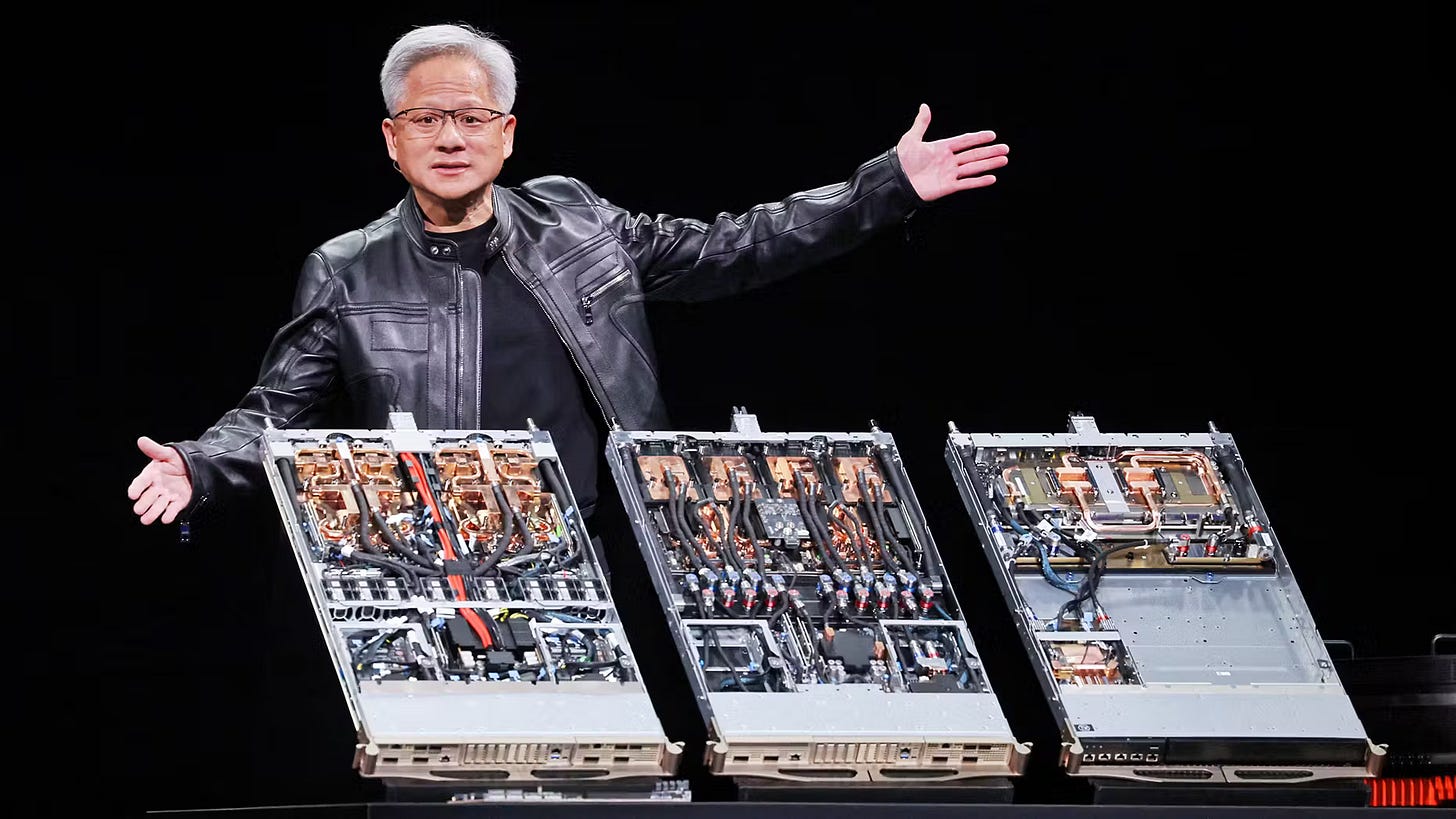

Nvidia’s Empire of Silicon

At the center of it all sits Nvidia. The company now controls 92% of the AI data center market, and its moat isn’t just faster chips—it’s the entire ecosystem around them.

CUDA has become the lingua franca of AI research. NVLink connects thousands of GPUs into a single distributed brain. Nvidia’s vertical integration—from networking to software—forms a fortress that’s nearly impossible to replicate.

The result is what insiders call the Nvidia tax: 80% gross margins on chips, gladly paid by hyperscalers racing to stay competitive. Jensen Huang often describes AI economics in terms of “revenue per megawatt.” Whoever controls the ratio of energy to compute now controls the economy.

Every $1 of AI software revenue depends on roughly $100 of infrastructure investment.

The foundations of this industry are literal: steel, silicon, and power.

The Fuel: Data as the New Legal Commodity

“Compute is the engine; data is the fuel.”

For years, AI labs treated the open web as a free buffet—scraping trillions of words and images from blogs, forums, and news sites. That era is over.

The lawsuits reshaping data ownership—led by The New York Times v. OpenAI/Microsoft—will determine who truly owns the raw material of machine intelligence.

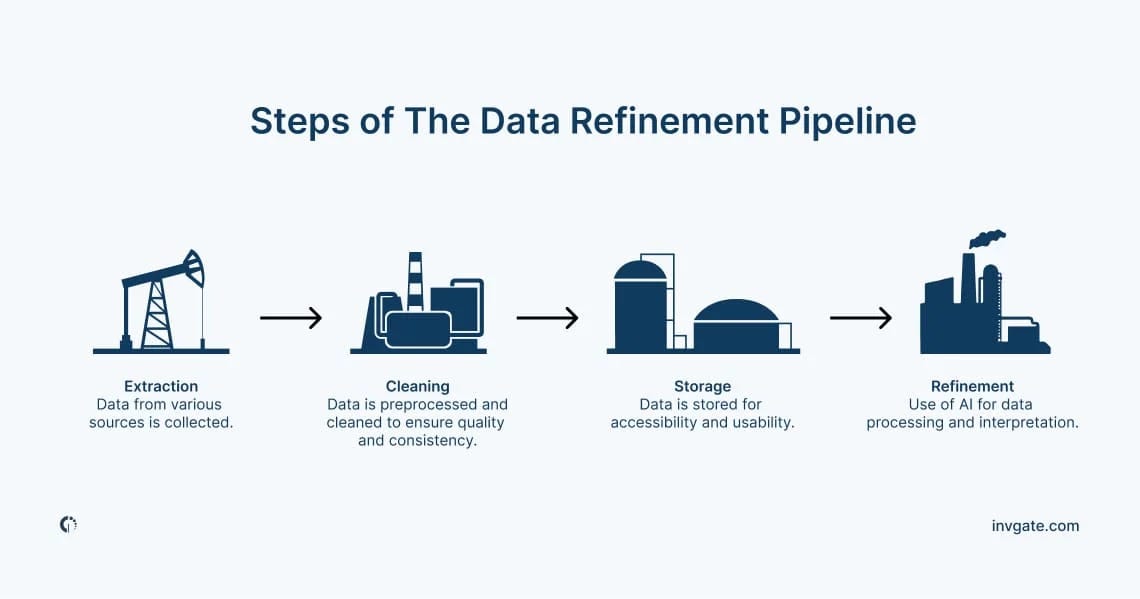

The Rise of Data Refineries

Companies like Scale AI now sit at the center of the AI supply chain, performing the unglamorous work of cleaning, labeling, and structuring data. Their value is logistical, not algorithmic—and that’s precisely what makes it defensible.

Meta’s $14.3 billion investment for a 49% stake in Scale AI signals the shift: control the refinery, and you control the flow.

Synthetic Data and Collapse Risk

As legal and financial barriers to human data grow, labs are turning inward—training models on their own outputs to manufacture synthetic datasets. It’s a clever workaround with a dark side.

When AI learns from itself, quality degrades. Researchers call this model collapse: a kind of digital inbreeding that produces more confident but less accurate systems.

The data war is no longer about quantity—it’s about legitimacy. The companies that secure exclusive, high-quality, and lawful data pipelines will fuel every layer above them.

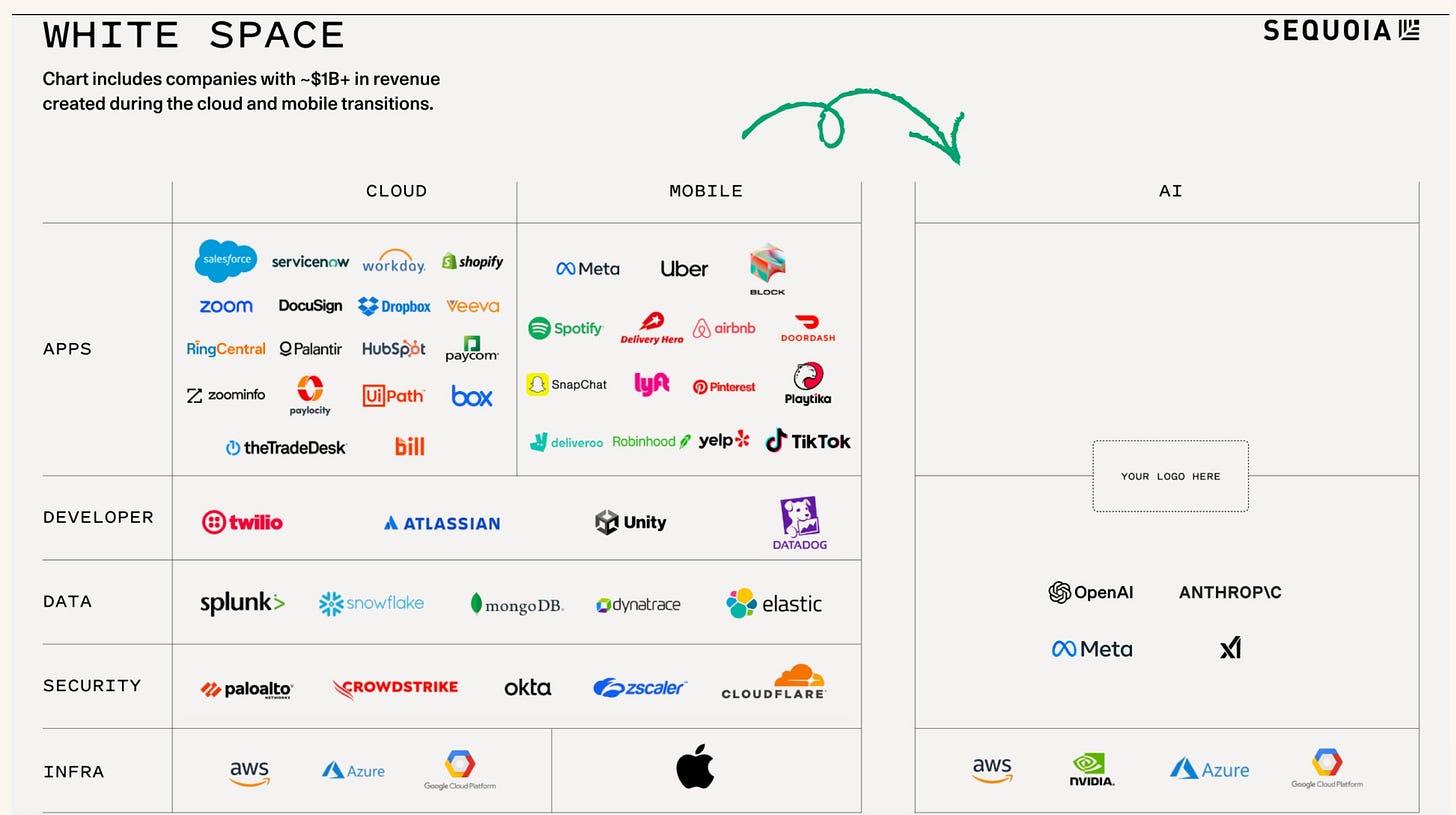

The Fragile Middle: Models and Interfaces

The public face of AI—the models and the devices they inhabit—sits atop this industrial scaffolding, but it’s also the most unstable part of the stack.

The Model Arms Race

The talent market has become absurd: seven-figure signing bonuses, $100 million retention packages, and a few hundred elite researchers courted personally by CEOs.

Yet all that capital buys less differentiation every quarter. The “DeepSeek moment” in early 2025—when a small Chinese startup achieved frontier-level results on older chips—proved that breakthroughs are no longer gated by hardware budgets.

OpenAI, Anthropic, DeepMind, and xAI are now trapped on a treadmill of incremental releases (GPT-4.1, Gemini 2.5, Claude 3.5), each one quickly matched by rivals. The moat isn’t intelligence—it’s iteration speed.

The Interface Wars

Meanwhile, the front door to AI—the user interface—is undergoing its own transformation. Apple still dominates distribution with two billion active devices but is playing defense, outsourcing AI capability to OpenAI.

Meta’s Ray-Ban glasses, Google’s multimodal AI mode, and Perplexity’s Comet browser all challenge the notion that AI must live inside a phone or search box. Comet, backed by Jeff Bezos and Nvidia, reimagines the browser as an agentic operating system—a space where the web doesn’t just answer, but acts.

Owning the interface means owning the default—and in an AI world, the default is destiny.

From Potential to Profit: The Boomtown Layer

For all the hype around frontier models, the real money is flowing into the application layer—the companies quietly wiring AI into everyday business systems.

Salesforce’s $8 billion acquisition of Informatica was about data pipelines, not demos. Adobe’s Firefly and Sensei tools continue to lift revenue.

Accounting firm RSM reports 80% efficiency gains from autonomous compliance agents. Startups like Mechanis are building “robotic employees” that execute tasks end-to-end.

This is the boring but lucrative part of the AI economy: where intelligence becomes infrastructure.

The Age of Agentic Commerce

OpenAI’s latest DevDay unveiled its next big play—turning ChatGPT into an operating system for digital transactions.

Apps like Spotify, Uber, and Canva can now be called directly inside ChatGPT. Instant Checkout integrates Shopify, Stripe, and Etsy, letting users complete purchases without leaving the chat.

This isn’t about subscriptions anymore—it’s about transaction flow. ChatGPT becomes a super-aggregator, controlling both the conversation and the commerce that follows.

In Ben Thompson’s framing, OpenAI is trying to become the Windows of AI: the layer every user touches, and every developer must build for.

The Productive Bubble

Every technology revolution rides a bubble. The railroad bubble left tracks. The dot-com bubble left fiber. The AI bubble will leave power.

Even if many current players vanish, the infrastructure they’re funding—data centers, grids, and new energy capacity—will endure. That’s what makes this a productive bubble, not a destructive one.

As Ben Bajarin notes, the companies sitting on land and power “basically have gold mines.” AI demand will drive innovation in energy—especially in nuclear micro-reactors and natural-gas projects—over the next decade.

The industry’s biggest externality may also become its greatest legacy: a rebuilt electrical backbone for the digital world.

Industrial Strategy Returns

What’s emerging looks less like Silicon Valley and more like a 21st-century industrial economy.

Oracle has become the “TSMC of data centers,” a neutral infrastructure provider for anyone who can pay.

Intel is being revived as a national insurance policy—too critical to fail, too slow to survive without help.

AMD’s partnership with OpenAI echoes the old IBM–Intel–AMD triad of the PC era, creating a second-source strategy reminiscent of the early Windows ecosystem.

Nvidia, meanwhile, is quietly financing its customers—distributing GPUs and guarantees the way an empire distributes currency.

The parallels to history are striking. Just as Microsoft became indispensable to the PC economy, OpenAI is positioning itself as the linchpin of the AI buildout—organizing, intentionally or not, the ecosystem around its platform. And just as IBM needed AMD to keep Intel honest, OpenAI needs AMD (and possibly Intel) to keep Nvidia’s margins from swallowing the stack.

Where Real Power Lies

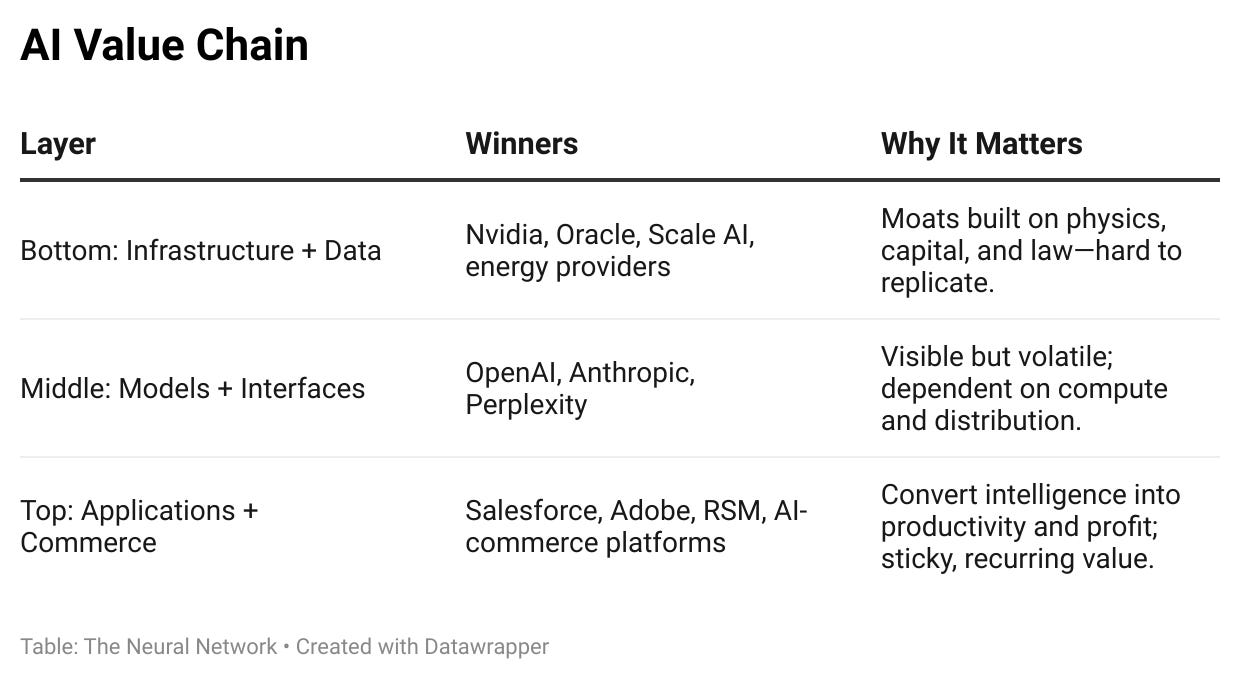

The glamour of AI hides a simple truth: power is consolidating at the bottom and the top of the value chain.

Everyone else is renting space in between.

Real strategic leverage comes from controlling what AI runs on, or where its value lands.

Everything in the middle—models, assistants, and APIs—is a bridge, not a fortress.

The Framework for Durable Power

A simple lens for separating signal from noise:

Infrastructure: Does this increase or reduce dependency on a single compute or energy provider?

Data: Does it generate proprietary data or recycle the commons?

Model: Is it a real performance leap or just an incremental benchmark?

Interface: Does it reinforce existing platforms or create a new one?

Application: Does it make someone money right now?

Ask these questions, and the power structure of any AI announcement becomes clear.

The New Industrial Order

The AI war is no longer defined by algorithms—it’s defined by infrastructure.

At the bottom of the stack, control over compute, power, and data pipelines determines the pace and scale of progress.

At the top, applications and agentic systems decide where value is ultimately realized.

The middle layers—models and interfaces—remain volatile, dependent on external suppliers and distribution channels.

Power sits at the bottom of the stack. Profit sits at the top.

Long-term advantage will accrue to:

Infrastructure owners optimizing the energy-to-compute ratio.

Application builders converting AI capability into measurable productivity or commerce.

Everyone else operates on rented ground.

In short: The future of AI will be shaped less by breakthroughs in intelligence and more by the efficiency of the systems that support it.

Real power lies in controlling the flow—from electrons to enterprise outcomes.