The Return of the Local Brain

Why on-premises, small-model AI is becoming the enterprise default

For years, the cloud seemed like the natural home for AI. If you needed GPUs, you spun up a cluster; if you wanted the latest model, you tapped an API. Everything about computing appeared to be moving toward centralization—abstracted, elastic, and infinitely scalable.

Yet somewhere between soaring API bills, data-residency headaches, and tightening compliance rules, that logic began to crack. More enterprises are quietly pulling workloads back in-house. IDC reports that most now repatriate at least part of their AI stack each year. The reason isn’t nostalgia, it’s necessity.

Cloud made AI accessible. On-prem is making it accountable.

From Model to Machine: The Agentic Shift

The last generation of AI systems answered questions; the next generation takes action.

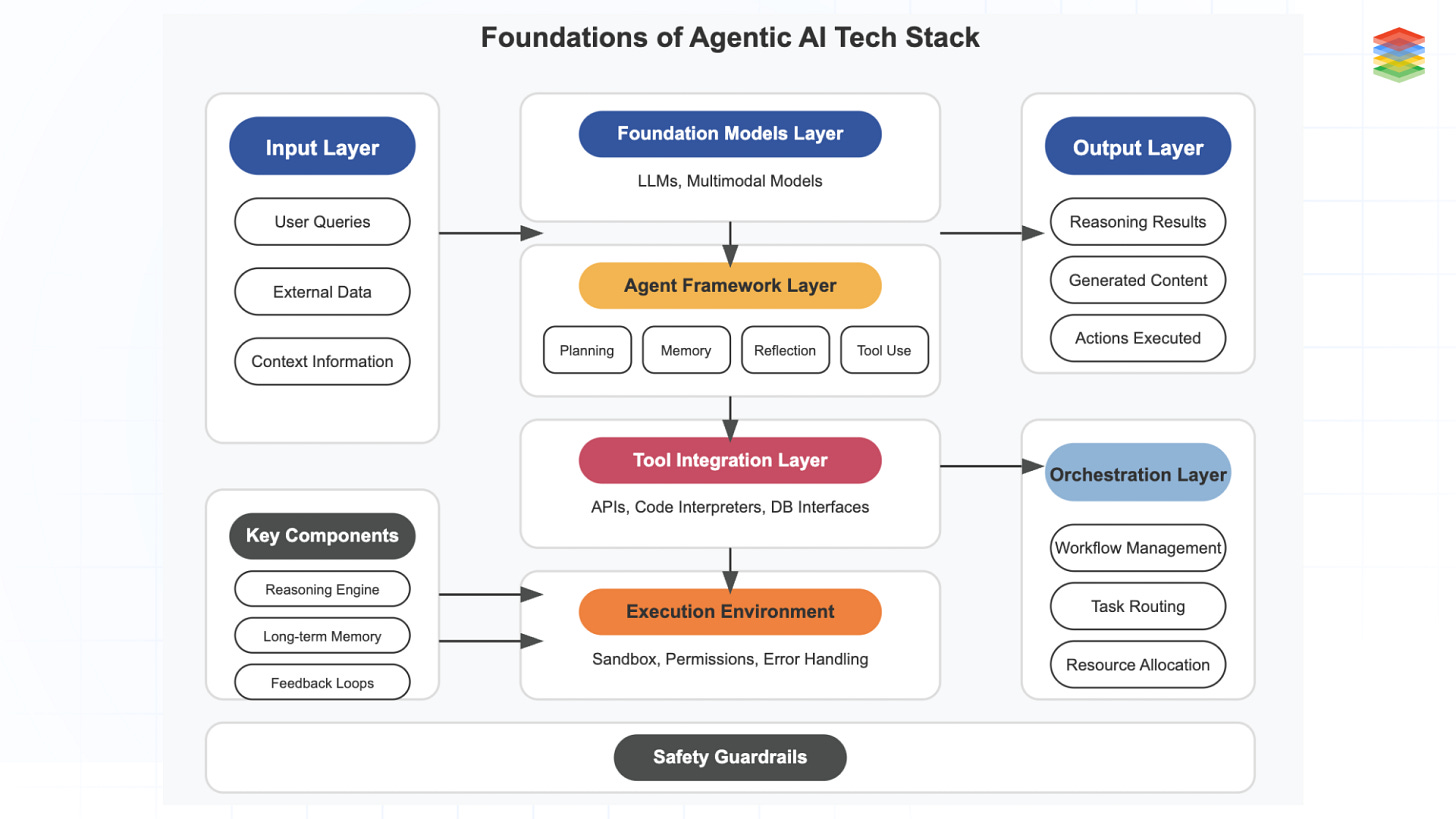

Agentic AI represents that evolution—models that can plan, reason, and execute autonomously. These systems don’t simply respond to prompts; they operate within workflows, drafting reports, monitoring pipelines, triggering alerts, and making real decisions with minimal human oversight.

That change—from inference to execution—exposes the limits of cloud-based architecture. Agents depend on continuous context from internal systems, ultra-low latency for real-time decisions, and persistent memory that respects corporate boundaries. When they process sensitive data—financial records, medical scans, or network telemetry—sending information to external servers becomes unacceptable.

Agentic AI and on-prem infrastructure are converging because autonomy demands proximity and control. When your AI holds the keys to your business, you don’t want it living in someone else’s house.

The Case for On-Premises Intelligence

On-prem AI isn’t a regression; it’s a return to sovereignty. It gives organizations complete authority over where their intelligence resides, how it behaves, and who has access to it.

By deploying agentic systems locally, companies gain more than privacy—they gain predictability. Data never leaves internal boundaries, aligning naturally with regulations such as HIPAA, GDPR, RBI, and DPDP. Inference happens beside the data itself, eliminating the lag that comes from remote processing. Costs stabilize because performance isn’t metered per call, and integrations become simpler when agents can communicate directly with enterprise systems like ERP or CRM.

Consider a regional bank that runs an on-prem agent to monitor transactions in real time. When the system detects anomalies, it can freeze accounts, generate compliance reports, and update logs—all within its private network. No external APIs, no latency, no exposure.

The result isn’t just greater security—it’s operational clarity.

Smaller Models, Bigger Impact

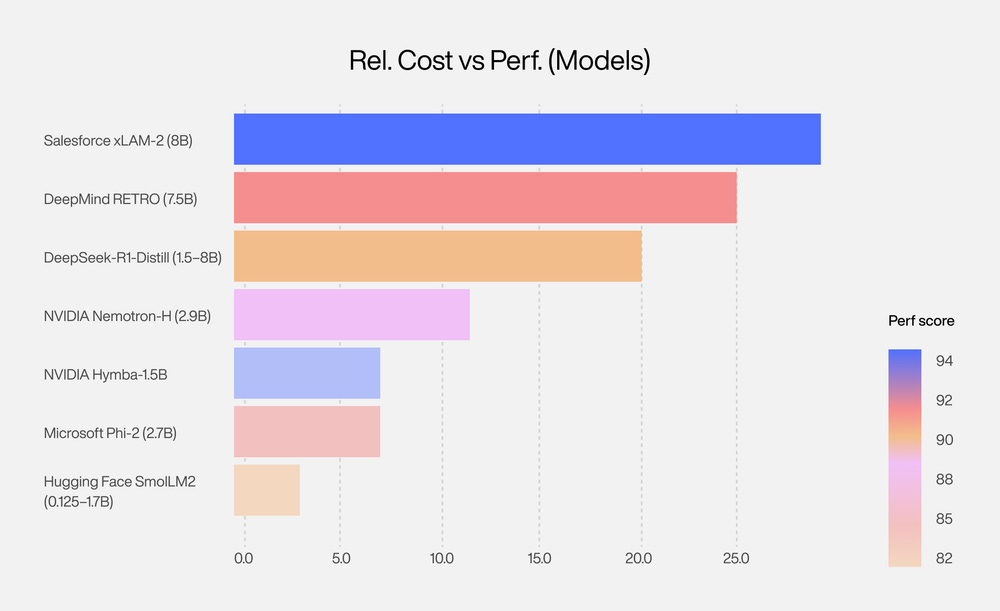

For years, the industry assumed that progress in AI meant building ever larger models. Then NVIDIA’s research paper, “Small Language Models Are the Future of Agentic AI,” challenged that assumption.

It confirmed what edge engineers already suspected: most agentic workloads don’t need massive, multi-billion-parameter systems. Small Language Models (SLMs) can handle a majority of enterprise tasks while running efficiently on a single GPU or even a CPU. They deliver sub-second latency, fine-tune easily on domain-specific data, and operate with far less overhead.

In tests across frameworks like MetaGPT, Open Operator, and Cradle, NVIDIA found that 40–70% of large-model calls could be replaced by smaller ones without loss in performance.

Use the right-sized brain for the right-sized problem.

The same principle that made microservices scalable applies here: specialization beats generalization. You don’t need a 70B-parameter model to summarize reports or flag anomalies—you need a focused, fine-tuned SLM running securely where your data already lives.

Building the On-Prem Agentic Stack

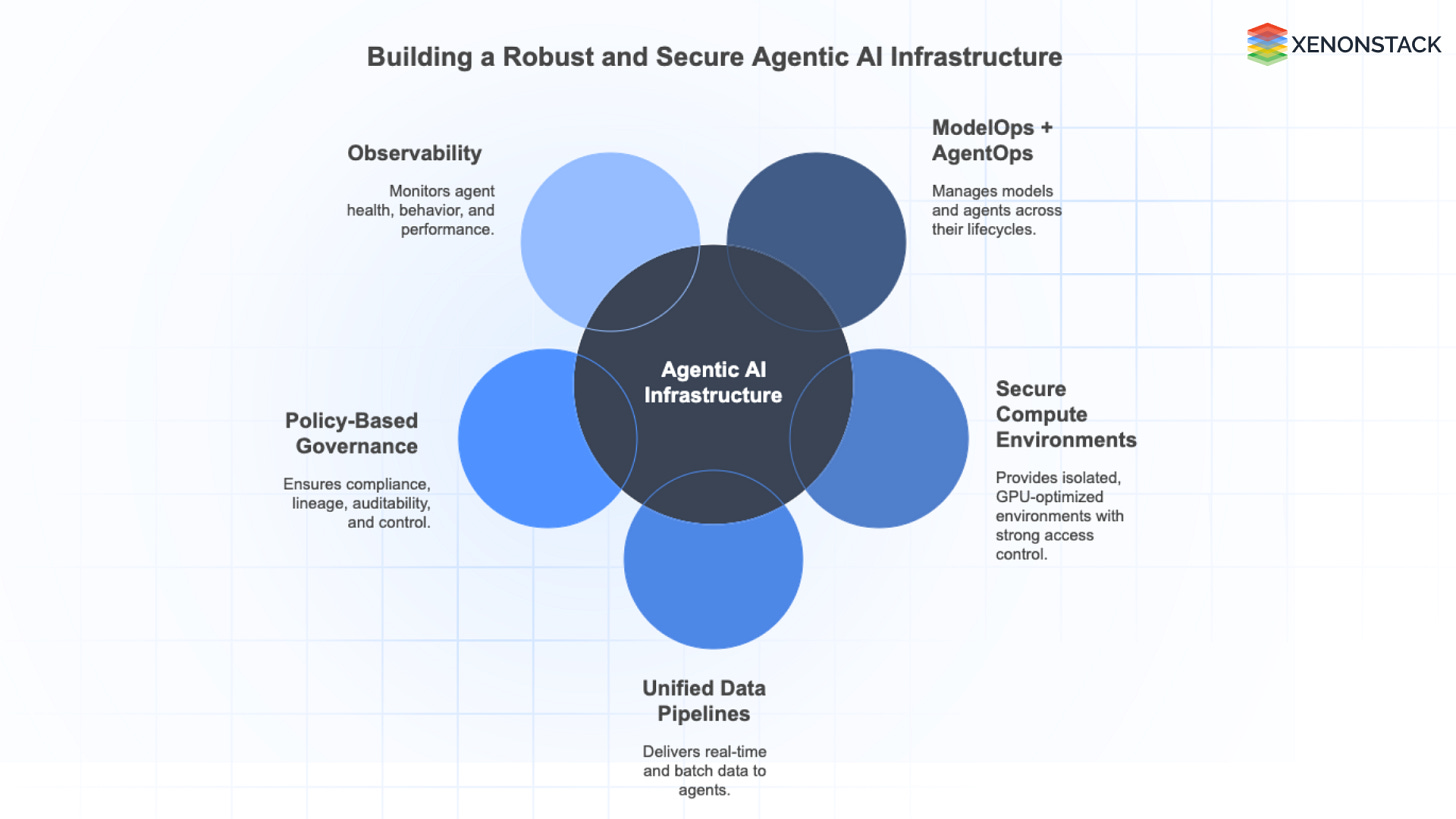

Bringing AI home isn’t about abandoning the cloud—it’s about gaining control and proximity. A modern on-prem stack blends three layers:

Hardware: local GPU clusters or accelerators with high-speed storage and networking.

Software: secure model repositories, orchestration tools, and APIs that connect agents to enterprise systems.

Governance: access controls, performance dashboards, and audit logs that keep operations transparent.

In practice, it’s simple: local inference → local reasoning → local action. The cloud still plays a role in retraining or analytics, but the core intelligence now runs inside your own walls.

The goal isn’t isolation—it’s sovereignty with flexibility.

Where It’s Already Happening

This shift isn’t theoretical—it’s happening across industries where real-time decisions and data control are non-negotiable.

In manufacturing, agents analyze factory sensor data to predict equipment failures before they occur, reducing downtime and maintenance costs.

In healthcare, hospitals process imaging data like X-rays or CT scans locally, keeping patient records private while accelerating diagnosis.

In telecom, agentic systems predict network outages and automatically reroute bandwidth to maintain service continuity.

In energy and utilities, distributed agents balance grid loads across substations—even in disconnected or air-gapped environments.

Different industries, but the same logic: speed, sovereignty, and reliability have become the new competitive edge.

Why “Hybrid” Isn’t the Compromise You Think

Hybrid architectures—part cloud, part on-prem—promise flexibility but often deliver friction. Splitting workloads across environments introduces synchronization delays, fragmented data contexts, and dual governance complexity.

Agentic systems thrive on unified context: every decision, every action, rooted in a single, consistent environment. When that continuity breaks, performance and compliance erode together. For organizations dealing with sensitive data or mission-critical operations, half-local quickly becomes half-secure.

Agentic AI works best when its world is whole.

The Economics of Bringing AI Home

At first, the cloud looks cheaper: no upfront costs, instant scalability, and no hardware to manage. But once AI becomes central to business operations, the economics shift dramatically.

Cloud deployments depend on per-token billing, unpredictable usage spikes, and vendor lock-in. On-prem solutions, while more expensive to set up, offer stable, predictable costs over time. Compute resources are fully utilized, latency is lower, and performance doesn’t degrade under load. Combined with small-model efficiency and hardware amortization, on-prem often becomes more cost-effective within a few quarters of deployment.

In AI, control isn’t just power—it’s ROI.

The Future: Distributed, Local, and Agentic

The pendulum is swinging back toward local intelligence—not because the cloud failed, but because data, context, and decisions belong together.

Tomorrow’s enterprises will run networks of small, specialized agents that operate locally, collaborate securely, and share insights only when needed. This model of federated autonomy—many focused models instead of one massive one—will define the next phase of AI.

The cloud will still matter, but as a coordination layer, not the core. The real thinking and acting will happen close to the data—at the edge, not in the ether.

Wow, autonomy needing proximity for agentic AI realy hit home. What next? Super insightful!