The Industrialization of Intelligence

Everyone’s chasing smarter models, but the real race is to power, cool, and deploy them fast enough.

We talk about AI like it’s software. It isn’t anymore.

The illusion of infinite compute—the sense that intelligence scales effortlessly in the cloud—has finally cracked. Behind every “smart” model is a warehouse full of chips, turbines, and coolant lines. Behind every frontier training run is a power grid, a transformer backlog, and a construction crew trying to build a gigawatt’s worth of capacity before the next model version drops.

Sam Altman once said he wants to “create a factory that can produce a gigawatt of new AI infrastructure every week.” It’s a poetic goal, but also a reminder: the next leap in AI capability won’t come from clever code. It will come from who can industrialize intelligence fastest.

The bottleneck has moved downstream — from models to metal.

The Great Buildout

The defining story of 2025 isn’t the next GPT. It’s the trillion-dollar race to build where those GPTs can live.

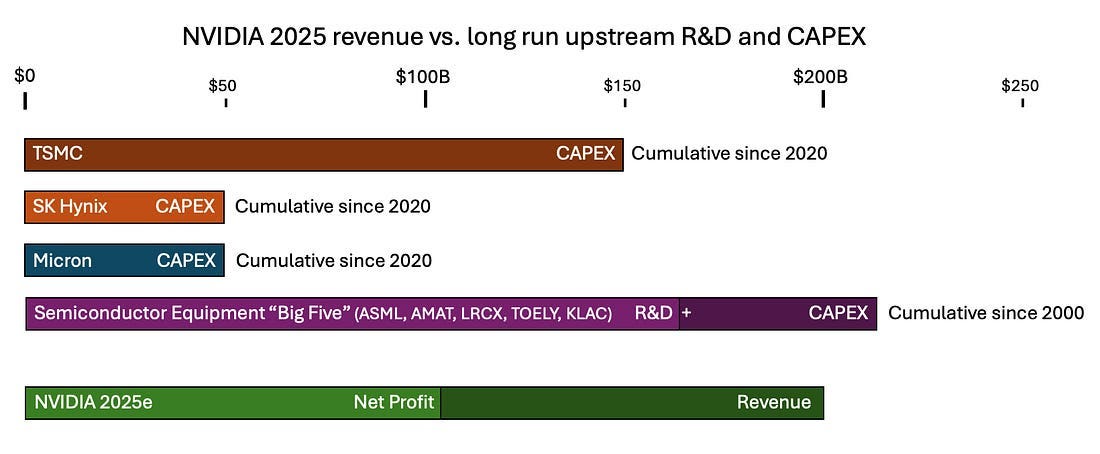

NVIDIA, with a single year of profit, could theoretically fund several years of TSMC’s fab buildout. Meta has pledged over $600 billion to AI infrastructure. Oracle is pouring $18 billion into new campuses. This is not a software cycle—it’s an energy-and-materials supercycle.

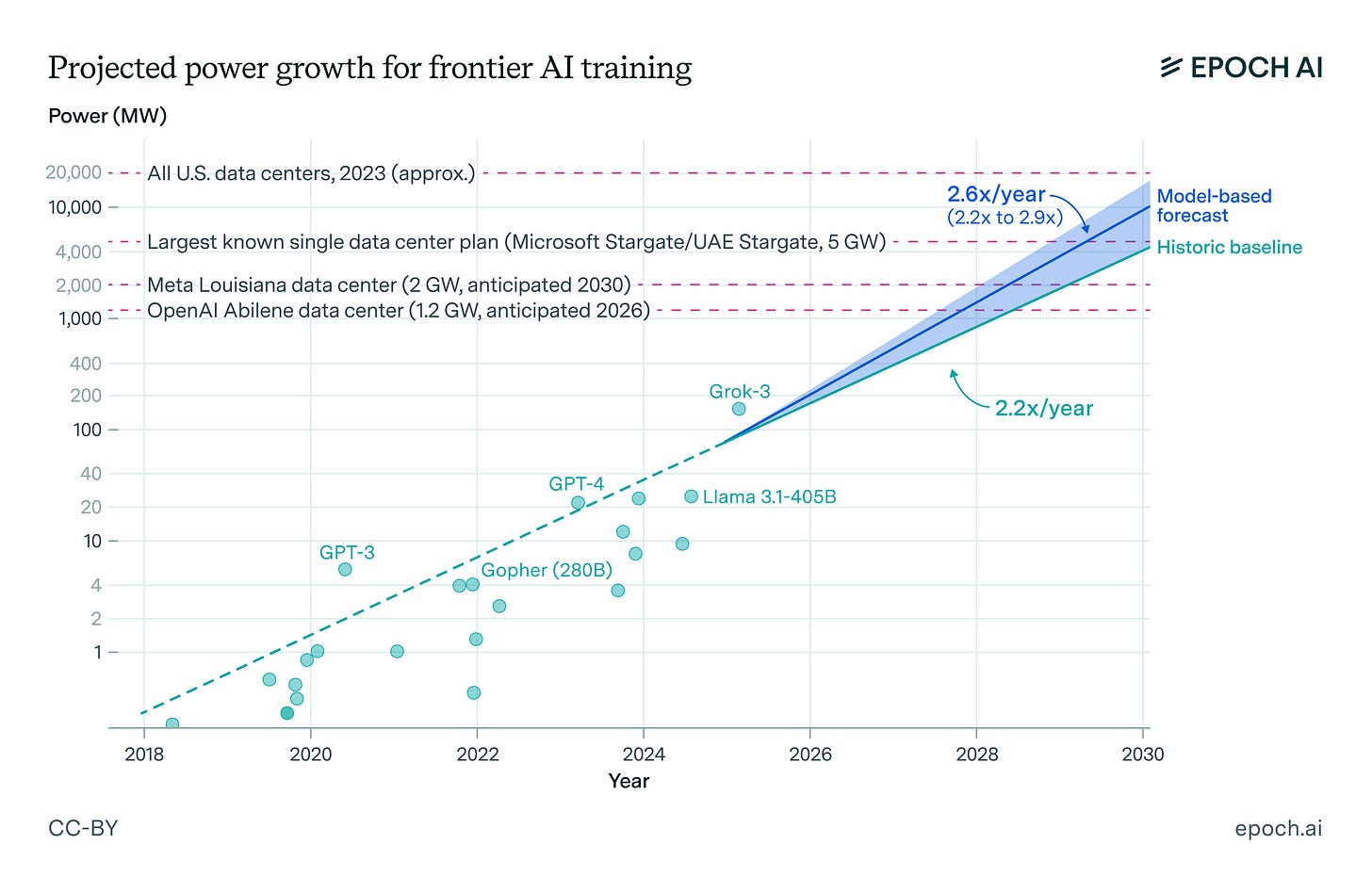

We used to think of data centers as digital warehouses. Now they’re AI factories: purpose-built facilities designed to convert electricity into cognition. NVIDIA coined the term, but it’s quickly become a reality. CoreWeave’s disclosures confirm what most engineers already suspected—there’s a direct, quantifiable relationship between compute scale and model quality. The more power you can marshal, the smarter your systems become.

And the numbers are staggering. A single Rubin Ultra Rack can draw nearly 600 kW, dwarfing traditional setups. Power and cooling are no longer infrastructure details—they’re the boundaries of innovation itself.

Compute capacity has become the new form of capital.

Energy Becomes the New Constraint

Every generation of computing has had a limiting reagent. For AI, it’s electricity.

Compute demand is outpacing the grid. Datacenters that once scavenged industrial leftovers—abandoned aluminum plants, defunct auto factories—now find themselves competing for raw generation capacity. Power availability has replaced silicon as the true gating factor for scale.

The trade-offs are brutally real:

Natural gas: quick to deploy, dirty, and politically fraught—but the only option that meets short timelines.

Nuclear: clean with low operating costs, yet bound by decade-long permitting cycles.

Solar: cheap in theory, but requires 4–7× overcapacity plus batteries to run 24/7. The world’s largest solar farm covers seven Manhattans to sustain just 3 GW of smoothed output.

Across the world, governments and utilities are scrambling to reconcile these pressures. The constraint is no longer technological—it’s infrastructural. Whoever learns to generate, distribute, and balance massive, continuous loads of power will shape the next decade of AI.

Every AI model is ultimately an energy story.

The Global Compute Divide

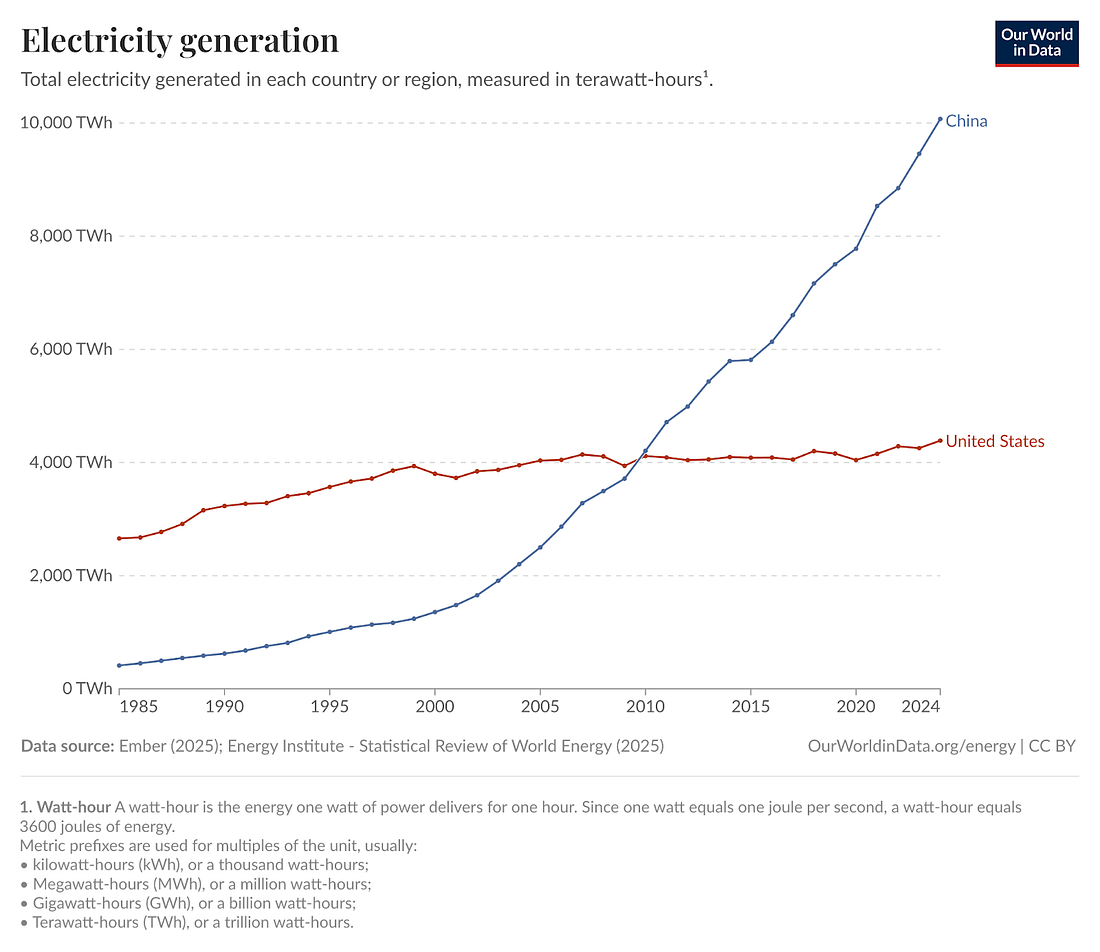

This is the new arms race—not for algorithms or data, but for energy and industrial throughput.

America still leads in chip design, model research, and capital velocity. But China is winning the long game of infrastructure scale. While U.S. hyperscalers fight grid delays and permitting backlogs, China continues to add power generation, transmission, and manufacturing capacity at an industrial pace. Its vertically integrated energy-to-compute pipeline—from solar fabrication to transformer production—lets it build datacenter power faster and cheaper than any rival. For every GPU cluster in the West, China is building the power plants that could host ten more.

The asymmetry is temporal as much as technical.

The U.S. runs on three-year depreciation cycles and quarterly CapEx reports.

China runs on decades.

If AI remains hardware-intensive, the winner may simply be whoever can sustain industrial expansion longest. The irony? Export controls might slow China’s chip supply today, but not forever. If the intelligence race turns physical—fueled by solar, steel, and labor—the balance of power could tilt eastward by 2030.

The Economics of Overbuild

Is all this spending sustainable? Maybe not—but that’s not the right question.

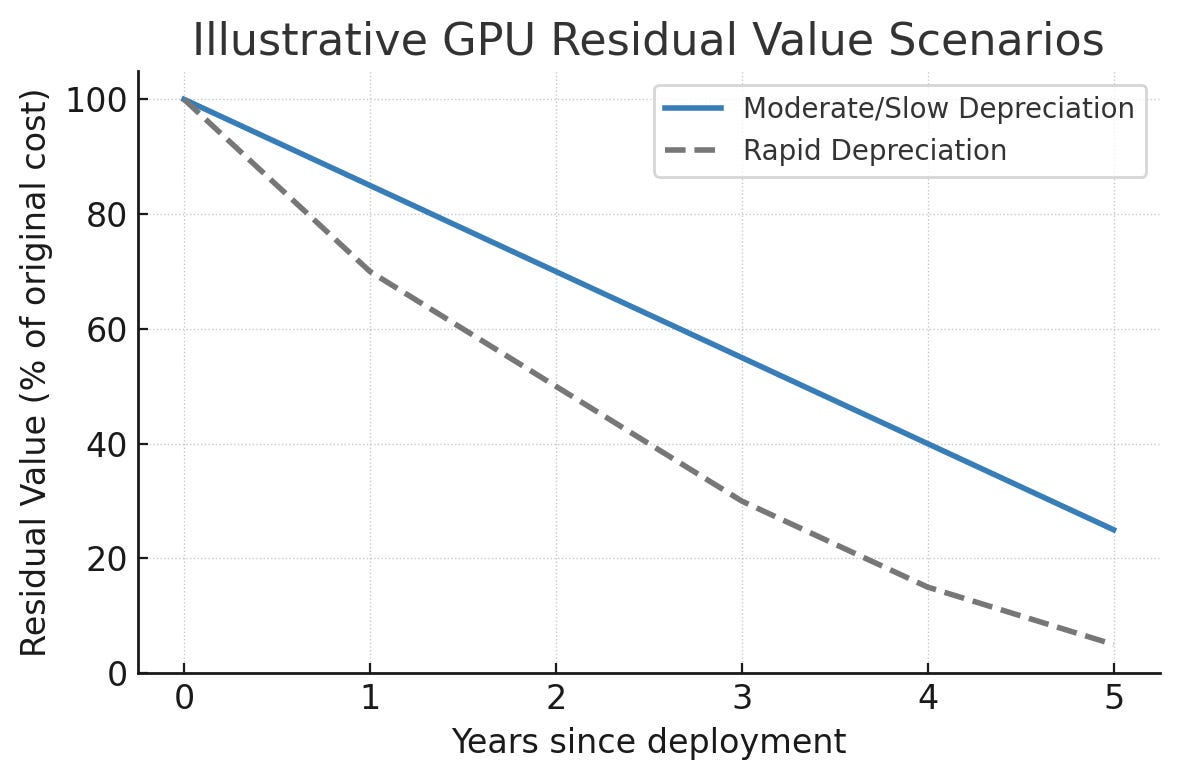

History shows that overbuilds often lay the groundwork for future revolutions. The dot-com bubble left us a global fiber network. The crypto boom built modular datacenter supply chains. Even if the AI bubble bursts, what remains is an industrial base capable of producing compute and power at unprecedented speed.

GPUs will depreciate in three years. The power plants and cooling systems will last decades. The enduring value might not be the models, but the manufacturing muscle we build along the way—the ability to turn capital into cognition on demand.

Some call it an AI bubble. I’d call it infrastructure compounding.

Even if the bubble bursts, the factories stay.

The Hidden Crisis and How Winners Will Build Differently

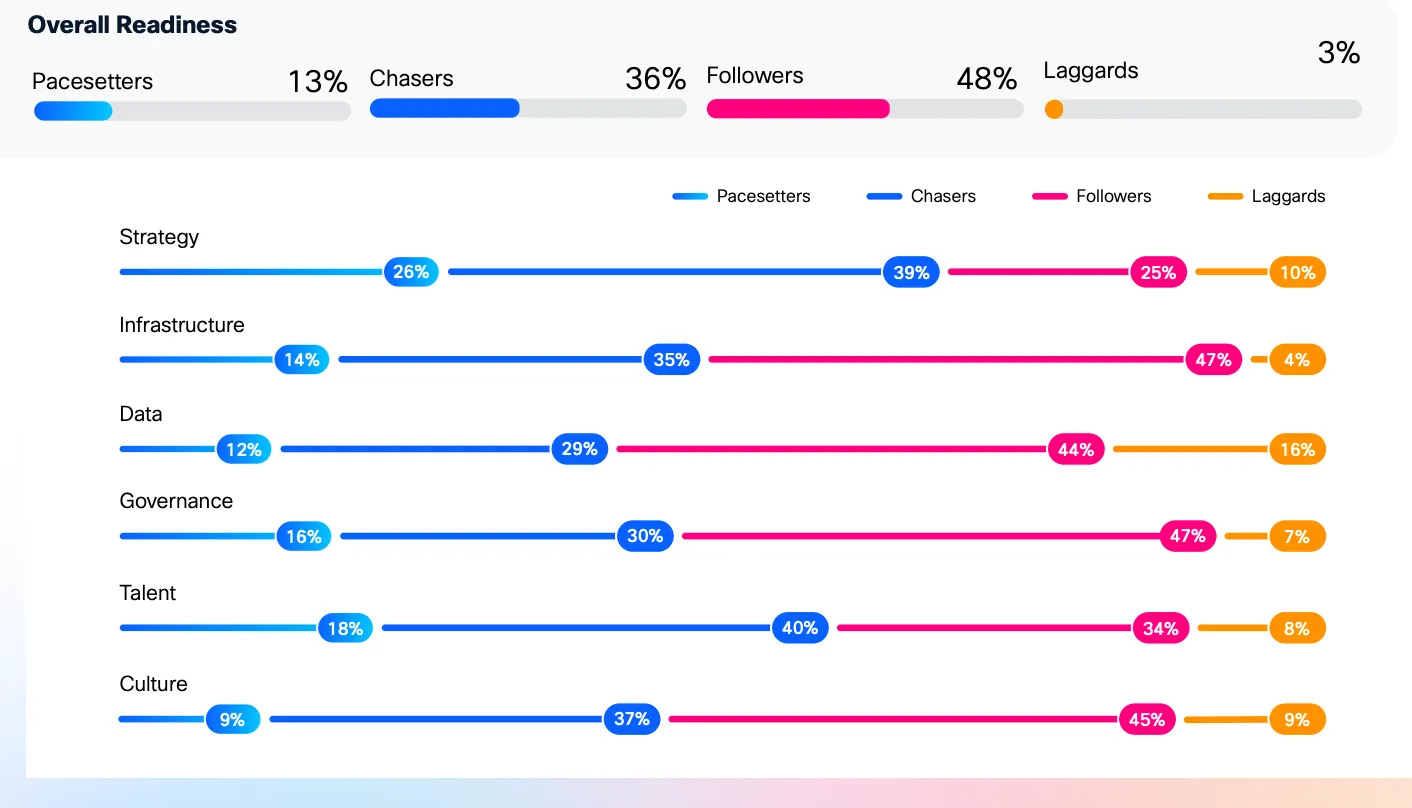

Cisco’s 2025 AI Readiness Index surveyed more than 8,000 executives across 30 markets. The result was sobering:

83 % of companies plan to deploy AI agents.

Only 13 % have the infrastructure to support them.

The rest are about to learn a costly lesson about the gap between ambition and capability. Cisco calls it AI infrastructure debt—the accumulation of shortcuts and compromises that come from rushing to deploy AI on systems never designed for it. It’s what happens when GPU scarcity meets legacy networks and “we’ll-fix-it-later” architectures.

Among those surveyed:

Only 26 % have adequate GPU capacity.

31 % feel prepared to secure autonomous AI agents.

85 % admit their networks can’t handle AI workloads at scale.

The “Pacesetters”—that top 13 %—show what readiness looks like. They deploy at ten times the speed, measure impact rigorously, and report 1.5× higher profitability.

Readiness is no longer about compute alone—it’s about placement.

Winners design for intent, not imitation. They split workloads between edge AI and cloud AI, aligning each with its natural strengths.

The companies I’ve seen that are effectively using AI to uplevel their operations all take this approach, using the cloud to learn and the edge to act. Their architectures behave more like organisms than systems—reflexive at the edges, thoughtful at the core.

So what separates the few who thrive from the rest who stall?

They build differently.

Treat infrastructure as product strategy. Decide early what runs local versus remote—it defines latency, cost, and trust.

Design for modularity. Edge and cloud systems evolve independently but connect seamlessly.

Automate lifecycle management. Synchronizing models across tiers is a living process, not a deployment step.

Secure from the ground up. Each node expands your attack surface; resilience must be systemic.

This is the playbook for the industrial age of AI. The winners aren’t just scaling models—they’re distributing intelligence where it creates the most value.

The Industrialization of Intelligence

AI’s next leap won’t look like another app launch. It’ll look like a skyline.

Steel, concrete, and high-voltage substations—the material artifacts of intelligence—are spreading across Texas, Arizona, and Inner Mongolia. Each facility is a bet that our hunger for computation will outlast any model hype cycle. And even if the frenzy cools, the grid we build to feed it will remain—like the railways of a previous revolution.

We used to ask whether a product had market fit.

Now we ask whether an idea has the infrastructure to exist.

The smartest model in the world is useless if you can’t keep the lights on.