The AI Value Chain Is Collapsing Inward

Nvidia captures the rent, OpenAI rewrites the rules, and everyone else gets squeezed in between

The AI boom has a margin problem.

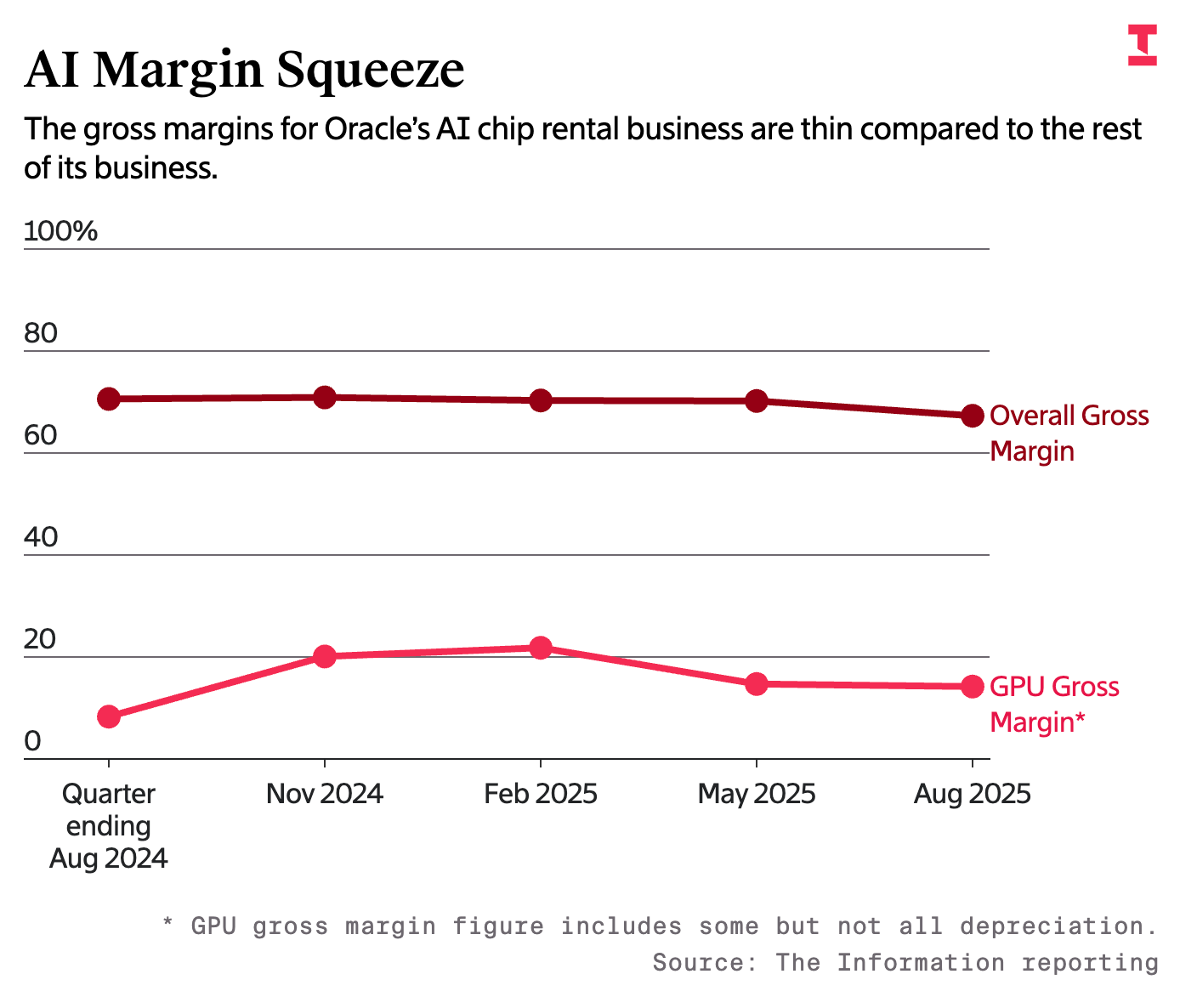

The world’s largest tech firms are renting out Nvidia GPUs at breakneck speed—and barely profiting.

Oracle, now a self-styled AI cloud leader, earns about fourteen cents for every dollar of GPU rentals. AWS and Google Cloud face similar pressure. The infrastructure driving this “AI revolution” runs on shrinking margins and rising depreciation.

In a market built on exponential scale, very few are capturing value.

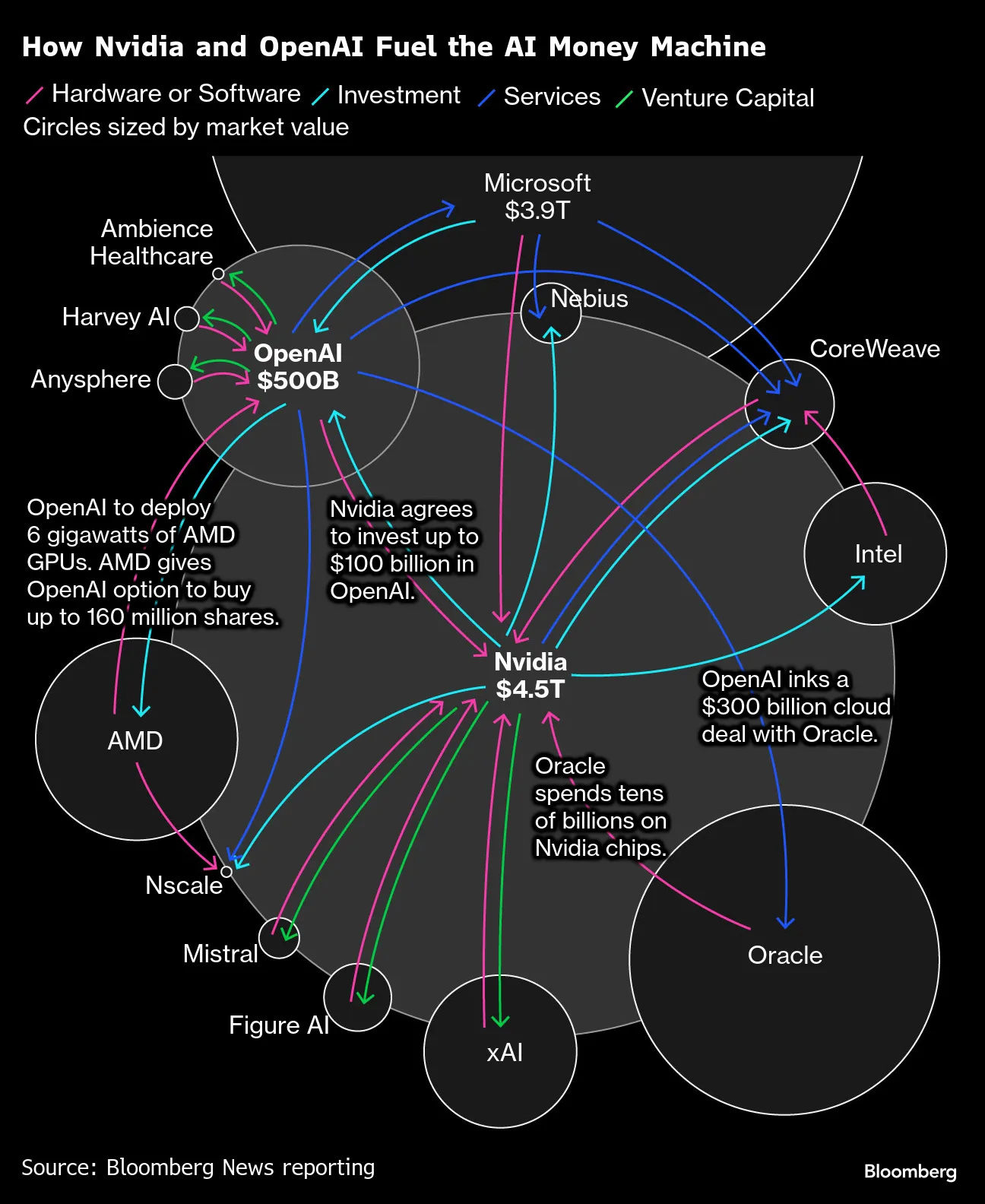

The Value Chain in Motion

The AI stack has collapsed inward. Chipmakers are moving up the chain; model developers are moving down. Cloud providers are caught in the middle.

Upstream, Nvidia reigns. It doesn’t just sell GPUs—it sells an ecosystem: CUDA, networking, developer tools. Each reinforces the other, keeping its operating margins around 70%.

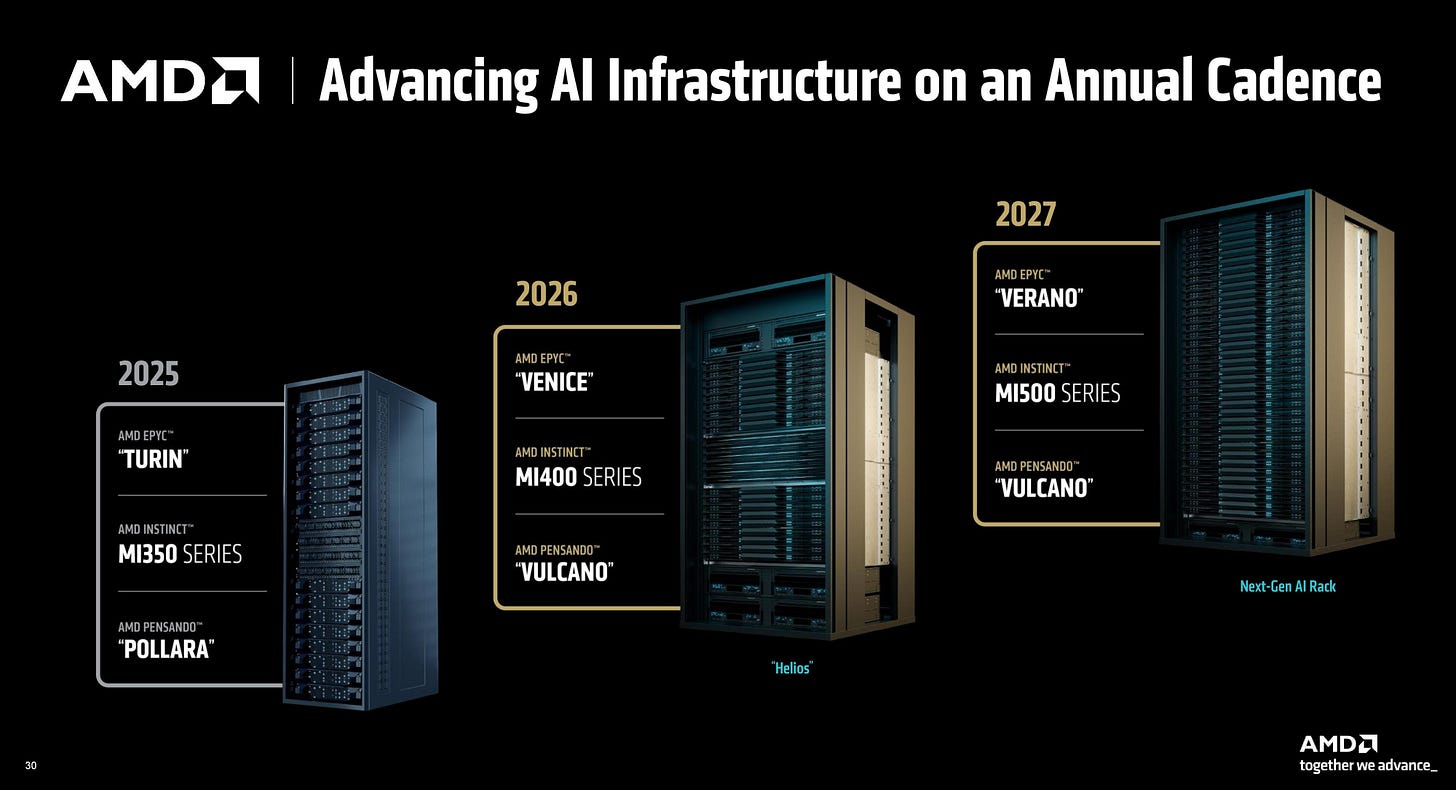

AMD plays the open alternative. Its partnership with OpenAI—supplying up to 6 gigawatts of Instinct GPUs plus a warrant giving OpenAI the right to buy 10% of AMD for one cent per share—aligns incentives. AMD gets a guaranteed buyer; OpenAI gets capacity and upside.

Broadcom, meanwhile, is becoming the quiet contractor of AI hardware—designing custom chips that encode model logic directly into silicon.

Midstream, the hyperscalers are squeezed. Their core business depends on Nvidia hardware whose costs they can’t control. AWS is countering with Trainium, its in-house AI chip now powering most Bedrock workloads. It’s slower than Nvidia’s top GPUs but cheaper—and that’s what matters when profitability depends on utilization, not peak performance.

Downstream, AI developers are rewriting the rules. OpenAI and Anthropic aren’t just cloud tenants anymore. They’re becoming infrastructure investors, equity partners, and chip designers. The line between buyer and supplier has vanished.

The Margin Chain

Every revolution hides a spreadsheet.

Oracle’s GPU business: $900 million in revenue, $125 million in gross profit—14% margins before depreciation. The culprit is utilization. AI hardware ages quickly; every new Nvidia release devalues the last. Oracle’s data centers run between 60% and 90% capacity, which means expensive GPUs sitting idle.

Key takeaway: the AI infrastructure economy is capital-intensive, not capital-efficient.

Nvidia alone escapes the gravity. Its hardware, software, and networking stack create self-reinforcing lock-in: more developers on CUDA, more optimization for CUDA, and no realistic alternative. While clouds fight for pennies, Nvidia earns dollars.

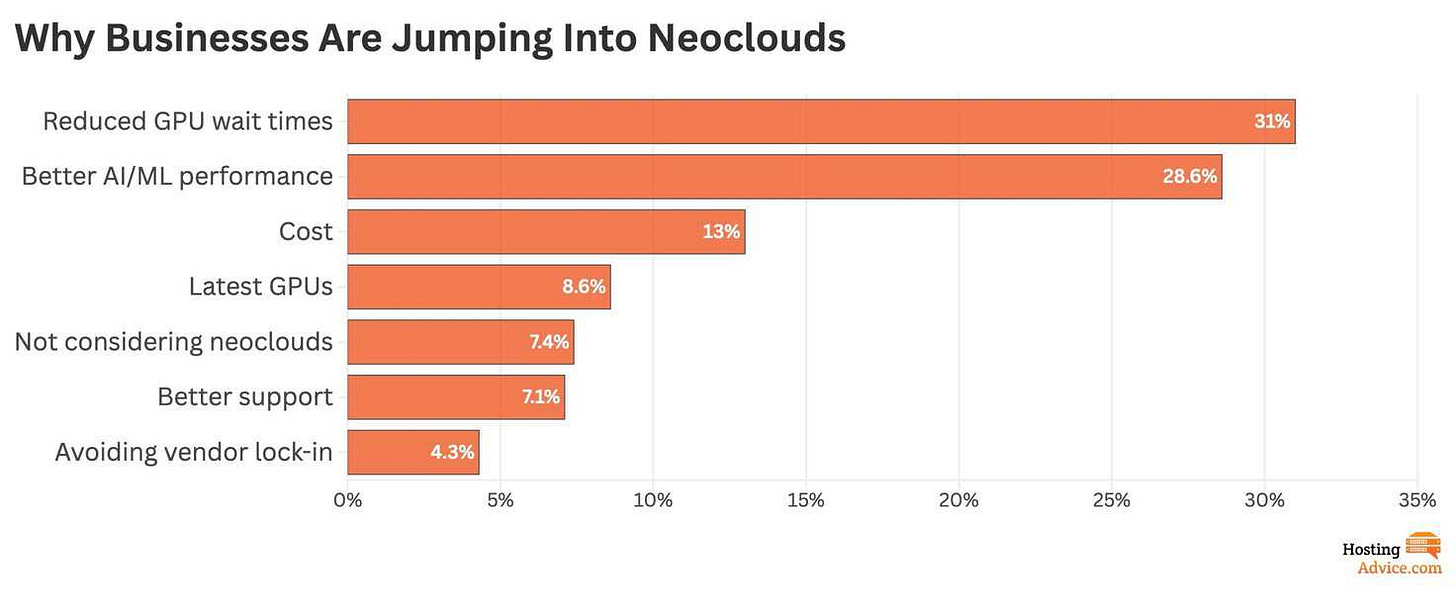

The Neocloud Play

The neoclouds—Together AI, CoreWeave, Lambda—are the industry’s margin experiment.

Rather than chase scale, they chase utilization. Together AI runs near full capacity by serving AI startups training or fine-tuning models, growing to a $300 million run rate with roughly 45% margins. Owning GPUs gives it control—but also exposure to obsolescence and capital costs.

Nvidia quietly finances these firms because they weaken the hyperscalers’ grip. Each neocloud increases competition for AWS and Azure while guaranteeing Nvidia full utilization.

Example: Together’s expansion into Saudi-backed data centers shows how geopolitics now intersects with AI supply. Compute has become a strategic export.

Integration as Strategy

AI has inverted the cloud’s founding promise of “scale without hardware.” Now, owning the hardware—or the economics behind it—is the only way to stay profitable.

OpenAI’s AMD deal isn’t just a chip order; it’s vertical integration through equity. By locking in supply and future ownership upside, OpenAI turns a cost center into a balance-sheet asset.

AWS does it differently. Trainium lets it swap Nvidia’s volatility for internal control. Bedrock customers never choose the chip powering their API call, giving AWS quiet leverage to optimize for margin rather than raw power.

Oracle, by contrast, leases both its data centers and its GPUs and relies heavily on OpenAI’s contracts. Its revenues may be soaring, but its negotiating power is shrinking.

Key takeaway: The new advantage isn’t scale—it’s integration. The winners absorb the most margin-dilutive layer of their own value chain.

Where the Power Actually Lives

Follow the money and you get an hourglass:

At the top, model providers like OpenAI and Anthropic control demand.

At the bottom, Nvidia controls supply.

In the middle, cloud providers and neoclouds compress their own margins to connect the two.

The real contest is over control, not capacity. Nvidia controls the software standards. AWS controls workload routing. OpenAI controls its own supply chain through financial leverage.

Everyone else fights for position in the narrow middle—essential, but structurally trapped. Their inputs scale linearly; their profits don’t.

Key takeaway: In AI, value accrues to those who manage dependency, not volume.

The Economics of Constraint

AI demand is theoretically infinite. Capital isn’t.

Each breakthrough increases consumption faster than efficiency gains can offset it. Sam Altman calls it the “10× → 20×” rule: every tenfold improvement in capability drives twentyfold higher usage. The result is an industry addicted to its own cost curve.

This forces every major player to become part financier, part manufacturer. Nvidia invests in neoclouds; AWS designs chips; OpenAI buys into AMD. Each is translating capex into leverage.

Key takeaway: The strongest differentiator isn’t access to GPUs—it’s the ability to finance them without eroding margins.

The New Map of AI Power

A new industrial hierarchy is forming:

Nvidia profits from selling scarcity.

AWS and Google profit from utilization.

OpenAI and Anthropic profit from turning compute into product revenue.

Oracle and the neoclouds absorb volatility for everyone else.

Every contract in this ecosystem is a negotiation over who bears the depreciation risk. When Oracle underprices GPU rentals to keep OpenAI’s business, it’s trading future margin for short-term growth. When OpenAI invests in AMD, it’s buying a hedge against its own input costs.

Key takeaway: AI’s power structure is defined less by model performance and more by financial engineering.

Value Flows, Not FLOPs

Nvidia doesn’t just sell chips—it monetizes every watt that passes through them.

AWS doesn’t just host workloads—it arbitrages its own supply chain.

OpenAI doesn’t just train models—it converts supplier relationships into equity.

The rest of the ecosystem—clouds, resellers, and neoclouds—remains caught between suppliers who charge more and customers who expect less. Their challenge isn’t scaling compute; it’s surviving its economics.

In the end, AI’s value chain isn’t about who owns the most GPUs, but who manages their depreciation the best.

Final takeaway: The real power in AI isn’t measured in flops or gigawatts—it’s measured in who gets paid when the chips start aging.