Sora, Shrimp Jesus, and the Future of Video

From Hollywood-grade imagination engines to uncanny AI slop, shortform video is about to change everything.

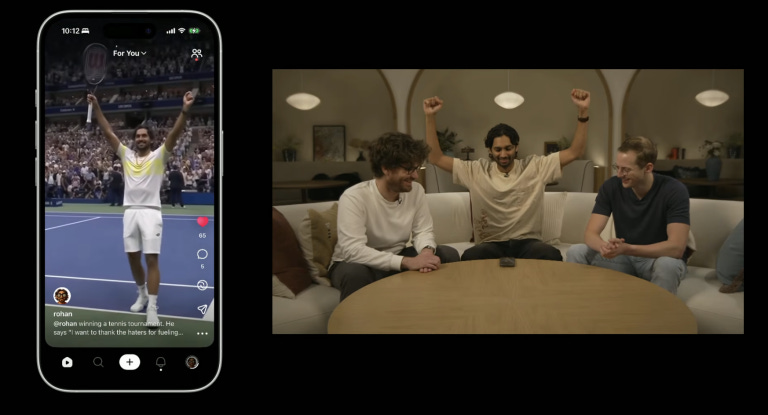

OpenAI’s Sora 2 doesn’t just generate video—it comes wrapped in a new social app built to rival TikTok. At first glance, it looks like a fun playground: drop yourself into a dragon ride with friends, remix clips, share them in a vertical feed. But underneath the spectacle sits something bigger. AI-generated video is colliding with shortform culture, and the result is a medium that’s equal parts creative frontier, deepfake factory, and engagement slop machine.

We’re entering an era where video is no longer filmed or produced in the traditional sense. It’s generated, remixed, and infinitely iterated—faster than attention spans can keep up.

The GPT-4 Moment for Video

The leap from Sora 1 to Sora 2 is as stark as the jump from GPT-1 to GPT-4. Gone are the fever-dream physics and glitchy morphs. In their place: realistic motion, synchronized audio, even accurate failure modeling. Ask Sora to show a skateboarder botching a kickflip, and it won’t “cheat” by teleporting the board back under their feet. The physics engine now lets the fall play out with believable gravity.

Then there’s Cameo. Record your face and voice once, and the system can insert you—or your dog, or your best friend—into any generated scene. You become a co-author of your likeness. You decide who can use it, revoke access at any time, and even remove yourself from videos after the fact. Sam Altman calls Sora “the most powerful imagination engine ever built.” He’s not exaggerating.

But just as important as the model itself is the decision to launch a standalone Sora app. Unlike TikTok or Instagram, where AI clips would blend invisibly with human content, Sora is an ecosystem of only AI-generated video.

That separation matters: it preserves trust, shields the creator economy, and lets OpenAI build moderation guardrails at the point of creation. It’s a product choice with deep philosophical stakes.

The Platform Gold Rush

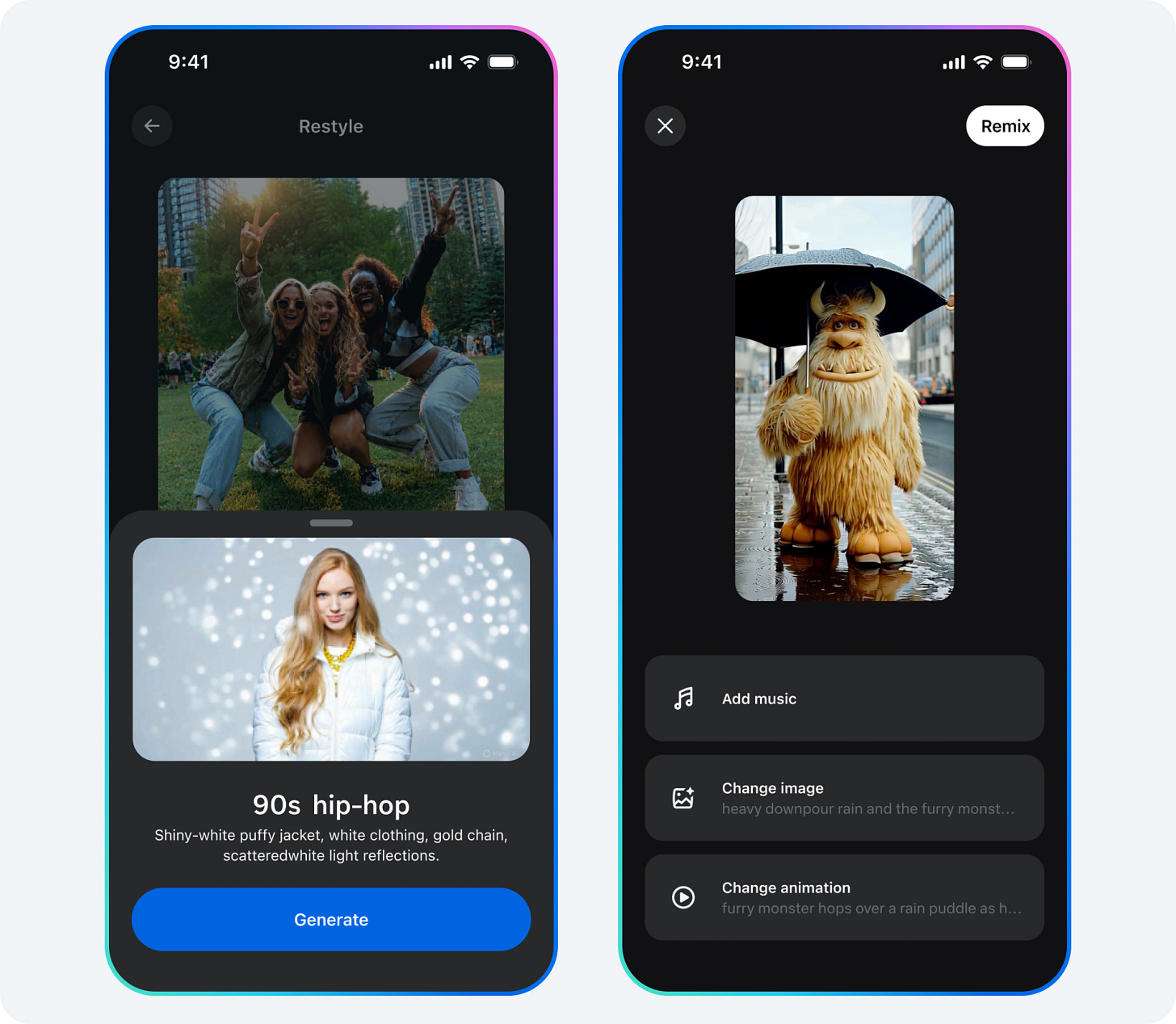

OpenAI isn’t alone. Every major platform is sprinting toward AI-first video.

Meta’s Vibes feed is a nonstop scroll of AI-generated clips, designed for remixing. The Verge called it “an infinite slop machine”—eye candy without story, novelty without substance.

YouTube Shorts will soon integrate Google’s Veo model. For now, it’s limited to green-screen backgrounds, but full six-second generated clips arrive next year.

TikTok barely needed to announce anything. It’s already flooded with uncanny, often surreal AI creations: pets doing choreographed motorcycle dances, “shrimp Jesus” sightings, fake vampire arrests by the U.S. government.

Each platform has a different angle, but the logic is the same: AI video keeps feeds full, engagement steady, and ad impressions flowing.

When Video Becomes Slop

If you’ve spent time on TikTok or Meta’s new feeds, you’ve probably seen what critics now call AI slop. These are uncanny, repetitive clips—cheaply generated, instantly forgettable, and endlessly produced.

Why does it spread? Platforms optimize for quantity over quality. A single creator can pump out dozens of clips per day at near-zero cost. An entire “AI side-hustle” economy has emerged around this, complete with courses, prompt packs, and tutorials promising passive income. One TikTok entrepreneur reportedly made $5,500 in a single month by mass-posting AI clips across multiple accounts.

The paradox is obvious: content is effectively infinite, but human attention is not. Slop thrives in this imbalance. It’s cheap, disposable, and perfectly suited to algorithms that reward novelty over meaning.

The Fault Lines

This isn’t just about silly pet videos. AI video is surfacing a set of deep risks:

Misinformation: Deepfake-style videos of public figures are only a few prompt restrictions away.

Copyright: OpenAI’s opt-out policy for training data flips the burden onto creators, forcing them to track down whether their work has been scraped. Opt-in would be the ethical choice, but it’s less convenient for model builders.

Economics: Human creators can’t compete with near-zero marginal cost. Without separation, AI could easily swamp existing creator economies.

Psychology: Like junk food, slop satisfies in the moment but erodes engagement over time. Novelty without narrative leaves audiences burned out.

Each of these fault lines points to the same tension: AI video is immensely powerful, but misaligned incentives risk turning it into a flood of meaningless filler.

Two Possible Futures

From here, the trajectory could fork.

In one future, AI becomes a true imagination engine. Tools like Sora unlock new forms of creative expression—friends starring in their own Hollywood-grade skits, creators remixing visual ideas as fluidly as memes. Storytelling becomes more participatory, more playful, more human.

In the other, AI devolves into slop machines. Feeds optimized for engagement drown users in disposable content. Storytelling gets replaced by spectacle. Platforms become infinite loops of empty calories.

Which future wins depends less on model capability and more on design choices: moderation policies, platform separation, economic incentives, and cultural norms.

The Attention Wars

AI video isn’t going away. It’s about to become the default format for how we consume, communicate, and play online. The real question isn’t what these models can generate—it’s what do we want to watch?

The early internet taught AI how to read and write. Social media taught it how to speak our language. Now, shortform video is teaching AI how to entertain—and distract—us at scale.

The battle isn’t over realism. It’s over attention, and whether imagination engines can coexist with the slop machines they inevitably enable.