Manus: Is the Hype Justified?

Promises of a revolutionary autonomous agent, but critics say it's just a clever repackaging of existing tech

If you’ve spent any time in the tech ecosystem recently, you’ve likely heard of or came across Manus. It’s the latest AI development causing a stir, promising to be the “DeepSeek moment” for fully autonomous agentic systems. Developed by Chinese startup Monica, Manus is an ambitious multi-agent system that claims to rival OpenAI’s ChatGPT Operator and Anthropic’s Computer Use.

Depending on who you ask, Manus is either the future of AI automation or a glorified UI layer over existing models. Let’s dig into what Manus actually does, where the hype is coming from, and whether this is a turning point in agentic system development—or just another case of repackaging existing technology.

What Actually Is Manus?

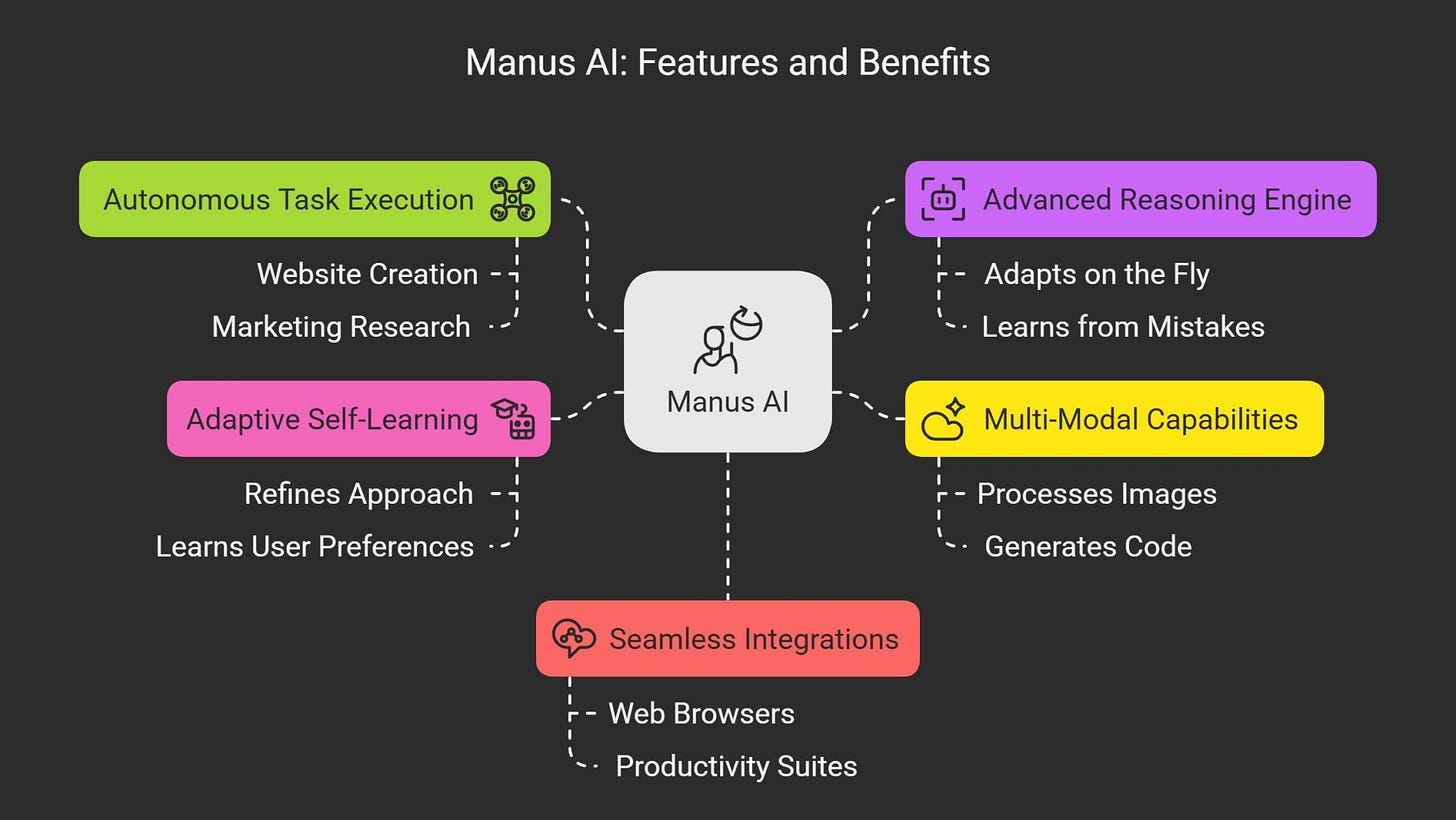

Manus bills itself as a fully autonomous agent that can take in high-level tasks and execute them independently—without requiring constant human oversight. Think of it as an AI-powered digital assistant, but instead of just answering questions, it can supposedly perform complex multi-step workflows, such as:

Generating full-fledged research papers

Designing and executing marketing campaigns

Developing and deploying software applications

Creating interactive educational content

Manus operates asynchronously in a cloud-based virtual environment, meaning users can assign it tasks and step away while it completes them autonomously. It integrates with multiple tools and APIs, enabling web browsing, automation, and data analysis.

This is a step beyond standard chatbot assistants, which rely on a single neural network and require user intervention to perform anything beyond basic text-based responses.

The Multi-Agent Architecture: Revolutionary or Just Good Engineering?

One of Manus’s key selling points is its multi-agent architecture. Instead of a single monolithic AI model, Manus functions like a project manager overseeing a team of specialized sub-agents:

Executor Agent: Manages task execution, delegating work to sub-agents.

Planner Agent: Breaks down high-level tasks into actionable steps.

Knowledge Agent: Retrieves relevant data and synthesizes information.

Developer Agent: Writes and executes code within a sandboxed environment.

When a task is assigned by the user, Manus works like a project manager would, dividing the task into smaller, easier to tackle chunks, and then assigning those chunks to a team of specialized agents—supervising their process along the way.

In theory, this structure allows Manus to be far more flexible and capable than traditional AI assistants. However, it’s worth noting that this is not a novel approach in AI research—multi-agent frameworks have been studied for years.

What Manus has done is bundle this approach into a productized system that integrates multiple existing AI models (primarily Anthropic’s Claude and fine-tuned Alibaba Qwen models).

The “Claude Wrapper” Controversy

Despite the hype, Manus has been criticized for being little more than a wrapper around existing LLMs. One X user managed to jailbreak Manus and discovered that its core intelligence is driven by Anthropic’s Claude, supplemented with 29 integrated tools. Additionally, Manus uses an open-source browsing tool called Browser-Use, allowing it to interact with web pages autonomously.

Shortly after the jailbreak went viral, the founder of Manus actually jumped into the conversation and offered a pretty detailed explanation addressing the original poster’s critiques.

This raises an important question: Is Manus actually innovating, or is it just a well-optimized orchestration layer over existing models?

This question isn't unique to Manus or Chinese AI companies. The entire AI industry has an evaluation problem. When capabilities are presented through carefully controlled demos rather than open testing, and when benchmarks are optimized for rather than reflecting real-world utility, how can users or investors make informed decisions?

There’s nothing inherently wrong with being a “wrapper”—many successful AI products (including OpenAI’s ChatGPT plugins) function similarly. The real test for Manus is whether its orchestration system offers genuine value beyond what users could achieve by manually stitching together existing LLMs and automation tools.

Hype vs. Reality: Early Performance and Issues

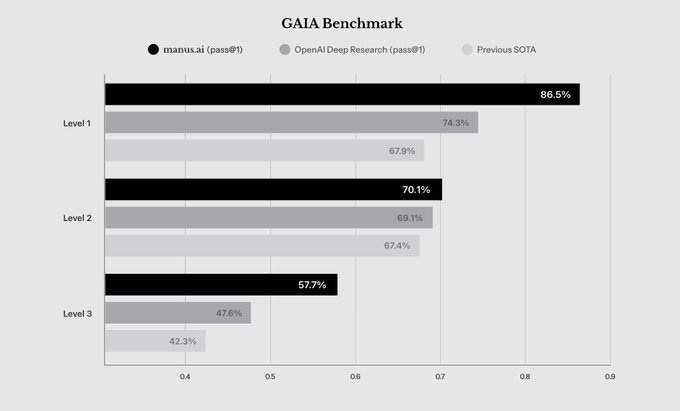

So far, Manus has demonstrated impressive benchmark results. It achieved state-of-the-art (SOTA) performance on the GAIA benchmark, outperforming OpenAI’s Deep Research agent.

I’ve been seeing some pretty impressive work from people across the web as well. I think we’ve only scratched the surface of what’s possible with these types of advanced agentic systems.

Coding a threejs game where you control a plane - @victormustar

Having Manus work as your real estate agent - @rowancheung

However, real-world user experiences paint a more nuanced picture:

Glitches and looping errors: Some users report that Manus gets stuck in repetitive task loops when dealing with complex workflows.

Execution delays: While Manus can complete multi-step tasks, it often takes significantly longer than expected, making it impractical for time-sensitive work.

Scaling concerns: Early testers encountered server overloads, crashes, and system instability, suggesting that Manus may struggle with large-scale deployments.

Moreover, Manus’s per-task cost is estimated at around $2 (supposedly)—one-tenth of OpenAI’s DeepResearch costs. While this makes it financially attractive, it remains unclear whether its performance will hold up as more users gain access.

The Broader Implications for AI Automation

Regardless of whether Manus is a true breakthrough or just clever packaging, its rapid rise signals an important shift in the AI landscape. Chinese AI startups are no longer content to follow in the footsteps of Western companies—they are shaping the conversation around autonomous agents in their own way.

If you're running a business that might use or compete with AI tools like Manus, here are the take-home points:

Agent capabilities are becoming commoditized faster than expected. The technical barrier to building Manus-like systems isn't as high as initial reports suggested.

User experience matters enormously. Manus gained traction partly because its interface makes complex AI interactions more accessible and intuitive.

Asynchronous operation is a killer feature. The ability to assign a task and check back later for results fundamentally changes how people can incorporate AI into workflows.

Price compression is coming. If Manus can deliver comparable (or superior) agent capabilities at 10% of competitors' prices, expect rapid price adjustments throughout the market.

Marketing still leads technology. The narrative around AI capabilities continues to run ahead of reality, creating both opportunities and risks for early adopters.

Final Thoughts: Is Manus the Future?

The most provocative question isn't whether Manus lives up to its hype today, but what it reveals about the trajectory of the AI industry:

The agent era is arriving faster than expected. The ability to act independently in digital environments is becoming a standard feature rather than a distant promise.

Costs will plummet. If rumors are correct that OpenAI plans to charge $2,000-$20,000 for advanced agent subscriptions, Manus's $2-per-task pricing suggests massive price compression lies ahead.

Transparency will become a competitive differentiator. As users become more sophisticated, they'll demand better information about what systems can actually do, not just what they claim to do.

Multi-agent architectures will become standard. The approach of specialized sub-agents coordinated by a supervisor is likely to become the default for complex task handling.

Ultimately, Manus matters not because it represents a fundamental breakthrough, but because it challenges assumptions about what's possible at what price point. It shows that the barriers to building capable AI agents are lower than many believed, and that the next competitive frontier lies in packaging, pricing, and user experience rather than raw model capabilities.

Whether Manus itself succeeds long-term is almost beside the point. The cat is out of the bag: AI agents that can operate independently are coming to market faster and cheaper than expected, and businesses that aren't preparing for this reality risk being blindsided by the acceleration.