Inside the Race to Keep AI’s Lights On

AI is scaling fast, but the infrastructure it needs is falling behind

In every major technological shift, the real story hides behind the interface. Railroads weren’t about locomotives; they were about steel, timber, and syndicated capital.

The internet wasn’t about websites; it was about fiber optics, data centers, and server farms. AI is no different. It may feel like a software revolution, but its future will be shaped by electricity and infrastructure.

We’re entering a new economic era, and AI is its engine. To understand where it’s going, we need to stop thinking in terms of code and start thinking in terms of concrete.

From Cloud to Compute: A New Infrastructure Layer

The cloud era taught us to scale elastically. Providers like AWS, Azure, and Google Cloud expanded rapidly, driven by a simple formula: more users created more data, which required more servers, and larger volumes brought costs down. This model worked well because cloud workloads ran on CPUs, which improved steadily in efficiency. You could pack more performance into each rack without a proportional increase in power.

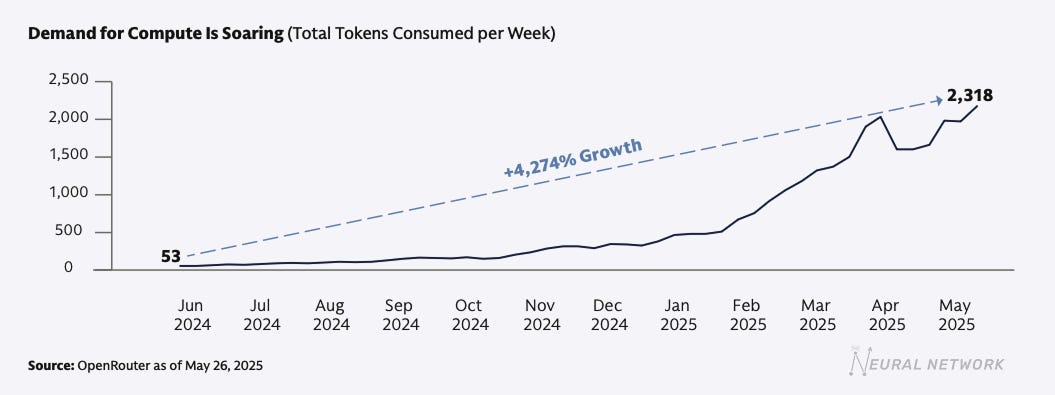

AI upended that model.

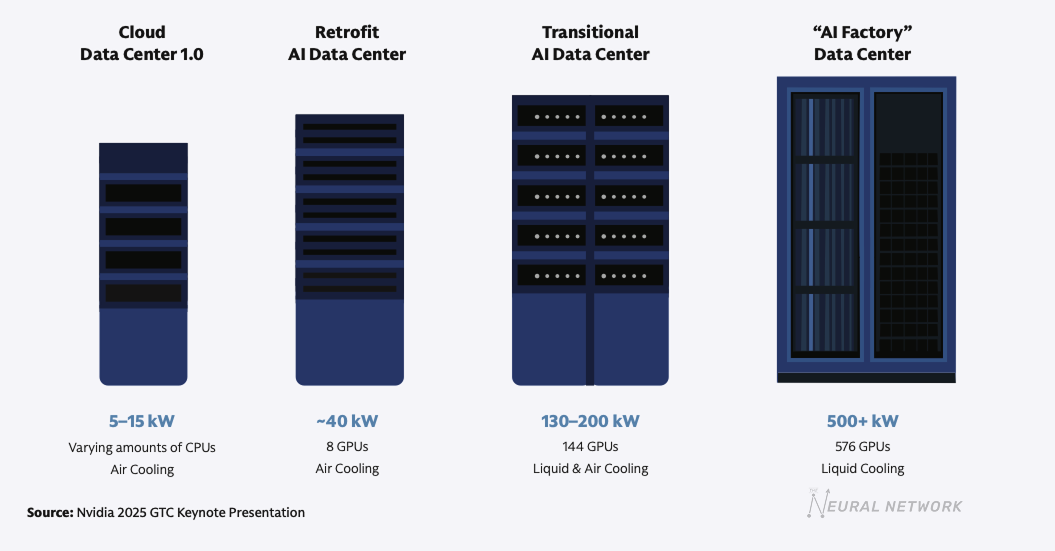

Training large foundation models like GPT-4 or Claude demands compute at an entirely different scale. A single AI server rack today consumes up to 50 times more power than a typical cloud rack from just a few years ago. These aren’t just upgraded machines. They are densely packed GPU clusters that generate immense heat, requiring advanced liquid cooling systems to stay operational.

We’ve moved from hyperscaling to hypersaturating.

Inference adds another layer of complexity. As AI is deployed into real-time user applications, latency becomes critical. That requires infrastructure closer to the user, even as training continues in remote areas optimized for cheap power and open space. The result is a sprawling physical footprint—one that pushes both inward toward the edge and outward into low-density regions.

This isn’t just a technical transition. It’s a financial one.

AI Isn’t Replacing the Cloud. It’s Expanding It.

Rather than cannibalizing cloud budgets, AI is increasing them. Hyperscalers are expected to invest more than $1 trillion in AI infrastructure by 2027. Alongside them, a new class of “neocloud” players—AI-native infrastructure startups—is spending heavily to compete.

The economics have shifted. In the cloud era, most of the capital went toward the building itself: land, cooling, and power. Today, the hardware inside an AI data center often costs three to four times more than the structure around it. These assets are harder to underwrite, too. The pace of innovation has shortened the usable life of AI hardware to a few years at best.

This creates a new challenge for investors and lenders: how to finance assets that are both expensive and rapidly evolving.

The Power Constraint

This brings us to the most pressing limitation: electricity.

While AI races ahead, the U.S. power grid remains stuck in the past. Much of the infrastructure is over 40 years old. After a decade of flat demand, data centers are now driving a projected 160% increase in electricity use by 2030. Nearly 60% of that demand will need to be met with new power generation.

Capital isn’t the issue. Power is.

Utilities are already behind. Natural gas is resurging, while wind and solar continue to expand. But renewables are intermittent, and energy storage has yet to catch up. Nuclear energy is returning to the conversation, but high costs and long lead times limit its near-term viability. Even previously shuttered plants like Three Mile Island Unit 1 are being brought back online to help meet the need.

Faced with these challenges, some companies are taking matters into their own hands.

Behind the Meter: Controlling the Energy Supply

Many hyperscalers and infrastructure developers are moving "behind the meter"—generating their own power or buying it directly from nearby plants. This strategy cuts interconnection delays and offers greater control over energy reliability.

Turnkey providers like PowerSecure and VoltaGrid are enabling this approach. During the 2021 Texas winter storm, PowerSecure’s microgrids supplied more than 2 GWh of backup energy while the main grid failed. In the AI era, that level of resilience is no longer optional.

Still, these setups come with trade-offs. Projects like xAI’s Colossus facility in Memphis have faced local backlash over pollution concerns. And efforts to colocate data centers with nuclear plants have sometimes been blocked by regulators, citing risks to ratepayers and local infrastructure.

Nonetheless, energy autonomy is quickly becoming a strategic advantage.

The Geopolitics of Data Centers

Unlike oil fields, which occur naturally, data centers can be built anywhere power, land, and policy align. That makes them useful geopolitical assets.

Nations that host AI infrastructure gain more than just economic development. They strengthen strategic alliances and increase their influence in the global digital economy. Brazil, for example—where 90 percent of electricity is renewable—is becoming a hub for AI infrastructure in Latin America.

At the same time, cross-border expansion introduces new risks. Export controls, data localization mandates, and regulatory changes complicate international deployments. U.S. hyperscalers may lead in model development, but their ability to scale globally now depends as much on diplomacy as on technology.

Data centers are quickly becoming as important as embassies.

Rewriting the Capital Stack

At the center of all this is capital—and a lot of it.

Global infrastructure investment needs are staggering. Digital infrastructure alone will require an estimated $2 trillion by 2030. Add $3 trillion for power and utilities, and $12 trillion for the broader energy transition.

To meet these needs, capital formation is evolving. Joint ventures are combining real estate, sovereign wealth, and corporate leasing. Stabilized data centers are being securitized and sold as single-asset bonds. Insurance firms, pension funds, and even retail investors are gaining exposure.

Goldman Sachs, for example, recently launched a Capital Solutions Group to integrate advisory, origination, and capital deployment across both public and private markets. The goal is to reduce friction and match investors with the right part of the infrastructure lifecycle.

These are no longer niche financings. They are the backbone of the next economy.

Final Thought: AI Is Becoming a Utility

The next phase of AI won’t be won by whoever has the smartest model. That phase is already over. GPT-4, Claude, Gemini—they’re all impressive and largely interchangeable.

What matters now is what comes after the model. Electricity. Hardware. Real estate. Supply chains. Capital markets.

AI is becoming a utility. And like any utility, it depends on infrastructure. The companies that win won’t just build smarter models—they’ll master systems thinking across compute, power, and finance.

They’ll treat power contracts as product inputs.

They’ll build with watts in mind, not just weights.

And they’ll understand that in the AI era, the most important innovation may be knowing how to keep the lights on.