Fast, Cheap, and Uneven: Three Truths About the Next Wave of AI

How intelligence became abundant, why measurement matters, and where progress stalls.

A few years ago, intelligence was the rarest resource in the digital economy. Companies hired armies of analysts and engineers to answer questions, write code, and make decisions that—today—fit inside a chat window.

The next wave of AI isn’t about access to intelligence anymore. It’s about what happens when intelligence itself becomes fast, cheap, and everywhere—and when progress stops being smooth.

Three ideas capture this shift:

Intelligence becomes a commodity

Verifier’s Law: Measurement drives progress

The jagged edge of intelligence: Some domains leap forward, others lag

Intelligence Becomes a Commodity

Every new AI capability follows a familiar pattern.

Stage 1: Frontier pushing. Researchers unlock a new ability—solving competition math problems, reasoning across documents, composing music.

Stage 2: Commoditization. Once that ability exists, the cost to access it falls toward zero.

That second stage is now accelerating. Models like OpenAI’s o1 introduced adaptive compute—spending more computation on hard problems and less on easy ones. Instead of brute-forcing every query equally, intelligence flexes like a muscle: efficient on simple tasks, deliberate on complex ones.

The result is profound. The cost per solved problem is collapsing even without larger models, reshaping what it means to “own” intelligence.

The Collapse of Time-to-Knowledge

Knowledge has entered an age of compression.

Before the internet, finding a niche fact meant hours of searching through books.

The internet reduced that to minutes.

Chatbots cut it to seconds.

And now, agentic systems that can browse, click, and query databases are collapsing that final layer of friction—delivering contextual answers almost instantly.

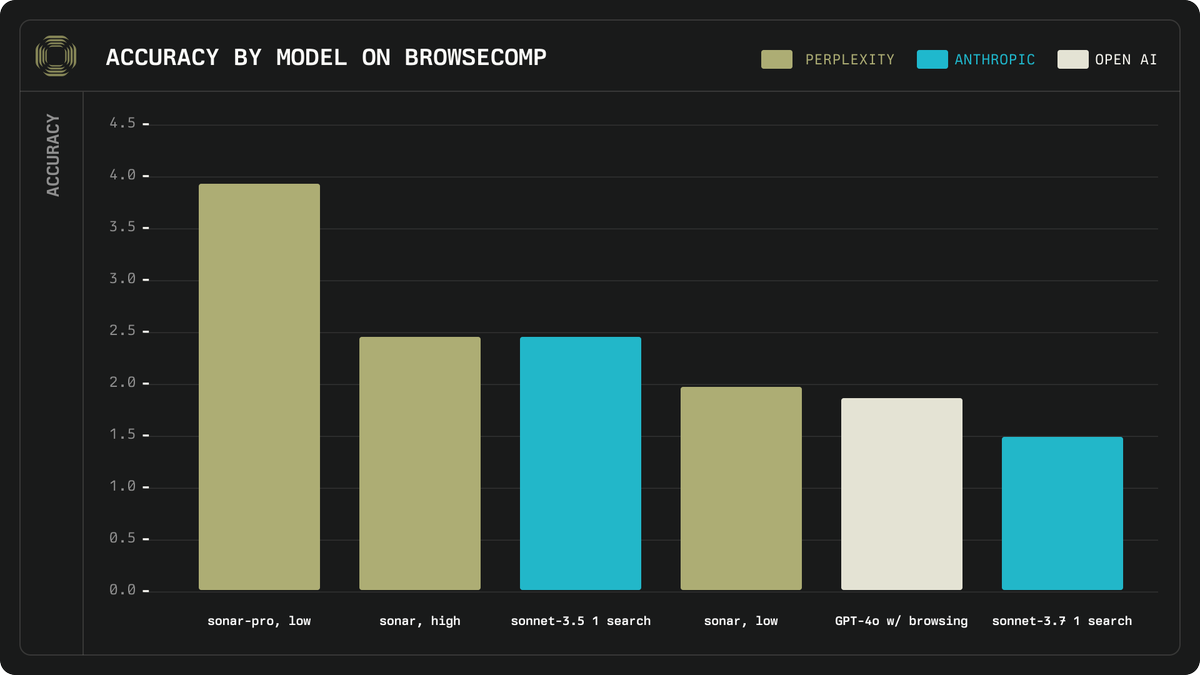

Benchmarks like BrowseComp capture this shift in quantitative form. They measure tasks that might take humans hours to research but only seconds to verify once the answer is found. Agentic systems can already complete a significant share of them autonomously.

What was once search is becoming verification: knowing not just where to look, but how to confirm correctness at machine speed.

This is where AI progress is heading—time-to-knowledge approaching zero.

The value chain is shifting from retrieval to reasoning, and from discovery to validation.

In a world where finding information costs almost nothing, the true differentiator becomes how precisely and confidently you can trust it.

The New Scarcity

As intelligence becomes abundant, information stops being the bottleneck. What matters now is:

Context — knowing which question to ask

Data — owning signals that aren’t publicly available

Trust — verifying that what’s generated is correct

In other words: public knowledge is free; private knowledge is priceless.

That shift explains why startups are embedding LLMs into proprietary data stacks, and why even small teams are building “personalized internets”—systems that reason over private, contextual information instead of generic web results.

The cloud made compute abundant. Agents will make intelligence abundant. What remains scarce is meaning.

The world’s next competitive edge won’t come from knowing more, but from knowing what matters.

Verifier’s Law — Measurement Drives Progress

Here’s the simple rule shaping AI’s trajectory:

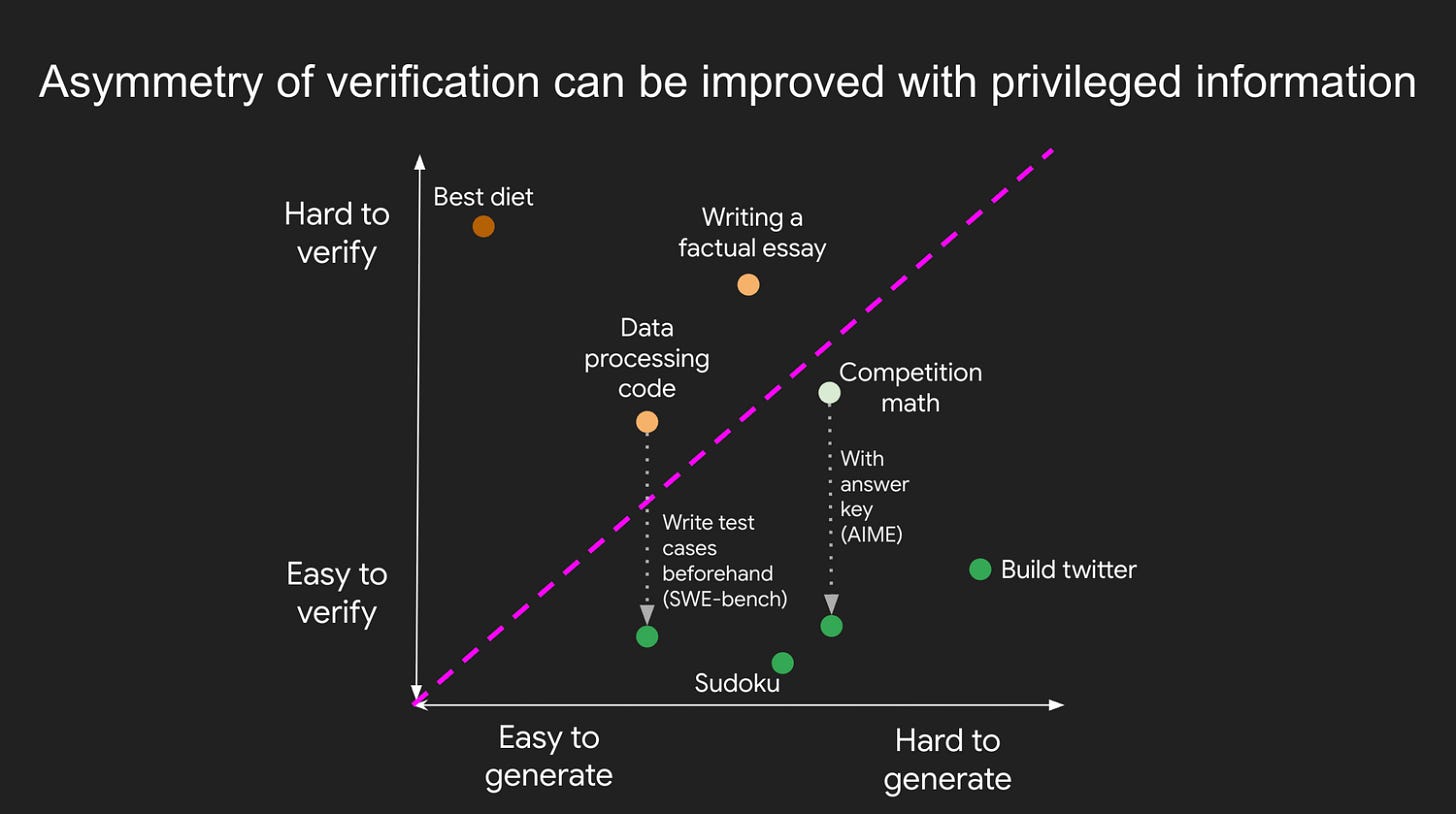

The rate of AI progress on a task is proportional to how easily that task can be verified.

This principle explains why AI outperforms humans in coding, Sudoku, and math, yet still hallucinates in essays or medical advice. Code and math have clear verifiers—there’s a right answer, a quick test, and a feedback loop that runs at machine speed.

When verification is strong, progress compounds. When it’s weak, models stall.

The Asymmetry of Creation vs. Verification

Some tasks are hard to create but easy to check.

Writing a working function or proving a theorem takes skill, but correctness is binary.

Others are easy to create but hard to check.

Writing an essay or designing a logo is fast, but quality is subjective.

AI devours the first type and struggles with the second. Systems like DeepMind’s AlphaEvolve illustrate why: they generate thousands of candidates, score each precisely, feed back the best, and iterate. When the metric is solid, performance climbs rapidly. When it’s noisy, progress flattens.

Verification as the Next Frontier

Most AI breakthroughs happen in domains with tight feedback loops—translation (BLEU), classification (accuracy), retrieval (F1), or coding (unit tests). But that also means entire industries are stuck because their metrics are fuzzy or inconsistent.

If you can invent a reliable metric, you can accelerate progress.

That’s why a new role is emerging: the verifier engineer—designing grading systems, evaluators, and reward models that close the loop between generation and truth.

AI doesn’t improve where it can’t measure itself. Verification is the real accelerator.

In a world of cheap intelligence, measurement becomes leverage. Whoever defines the metric defines the market.

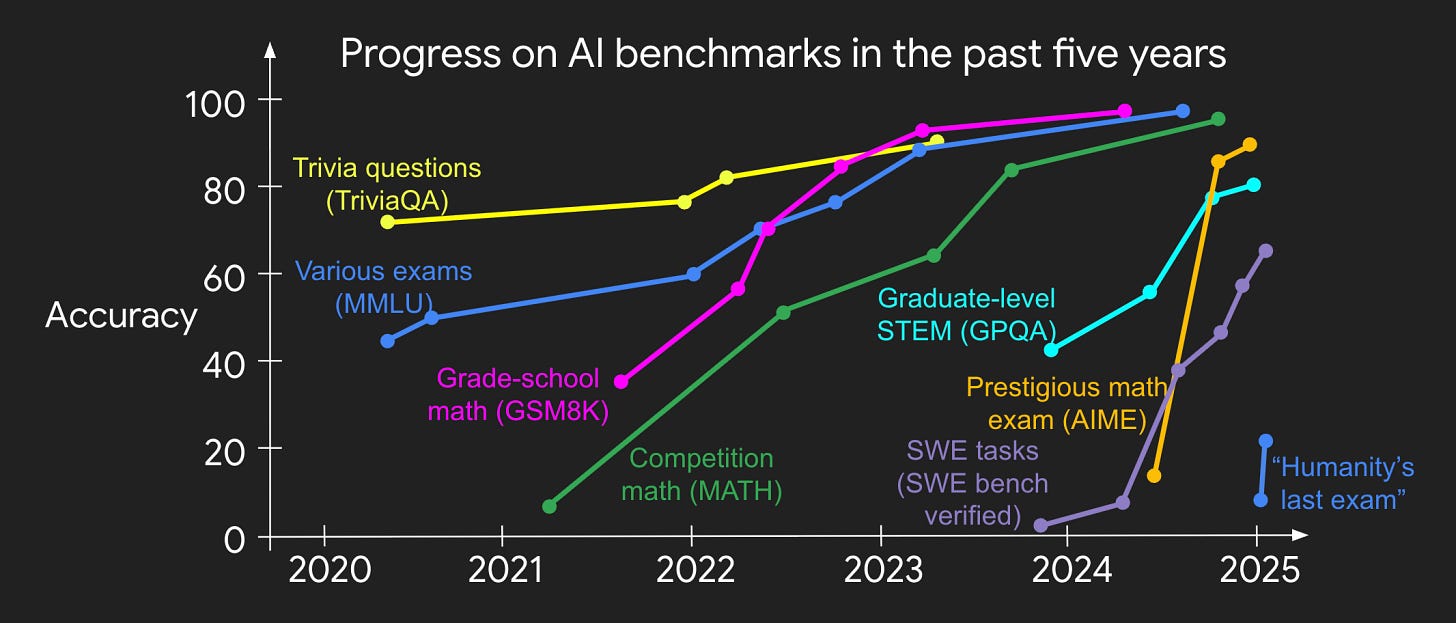

The Jagged Edge of Intelligence

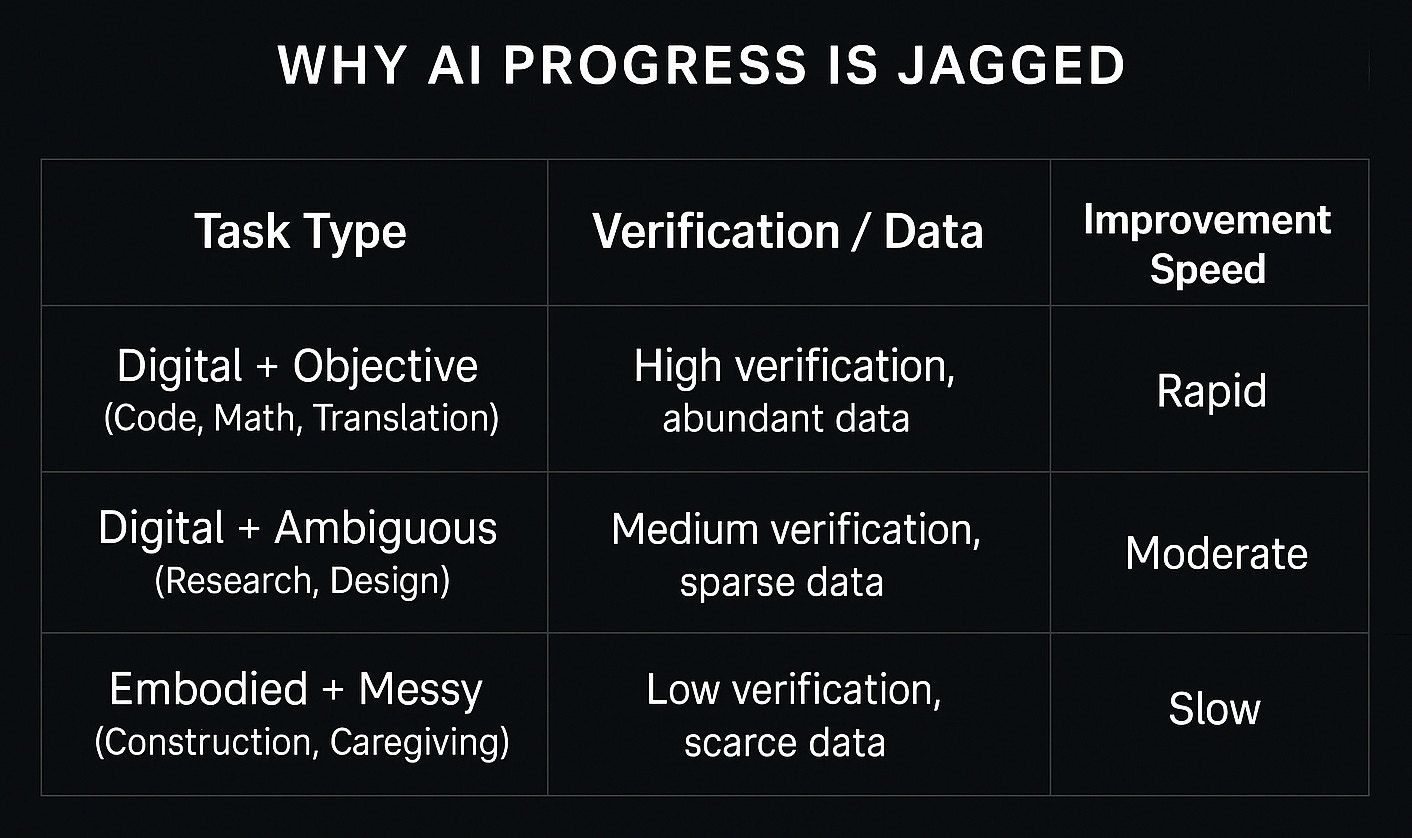

For all the talk of “fast takeoff,” AI’s progress isn’t smooth—it’s jagged.

Some capabilities, like program synthesis or image generation, leap forward. Others—common sense, causal reasoning—barely move. There’s no single progress curve, only a serrated edge advancing unevenly across domains.

Why the Edge Is Jagged

AI’s rate of improvement depends on three things: digitization, measurement, and data.

Digital, data-rich, and objective tasks improve fastest because feedback loops are clear and compute scales easily.

Embodied or subjective tasks lag because data is messy, costly, or hard to label.

This divergence creates AI inequality—a widening gap between digital domains that accelerate exponentially and physical or social ones that remain largely human.

Forecasting the Next Wave

To forecast where AI will move next, ask three questions:

Is the task digital? (Can it be simulated?)

Is it measurable? (Is there a clear definition of success?)

Is it data-rich? (Can it iterate quickly?)

If all three are true, automation will move fast. If not, progress will slow—until someone invents a better verifier.

The shape of progress isn’t a curve—it’s a coastline, advancing where measurement is strongest.

Understanding that contour helps predict where disruption lands first.

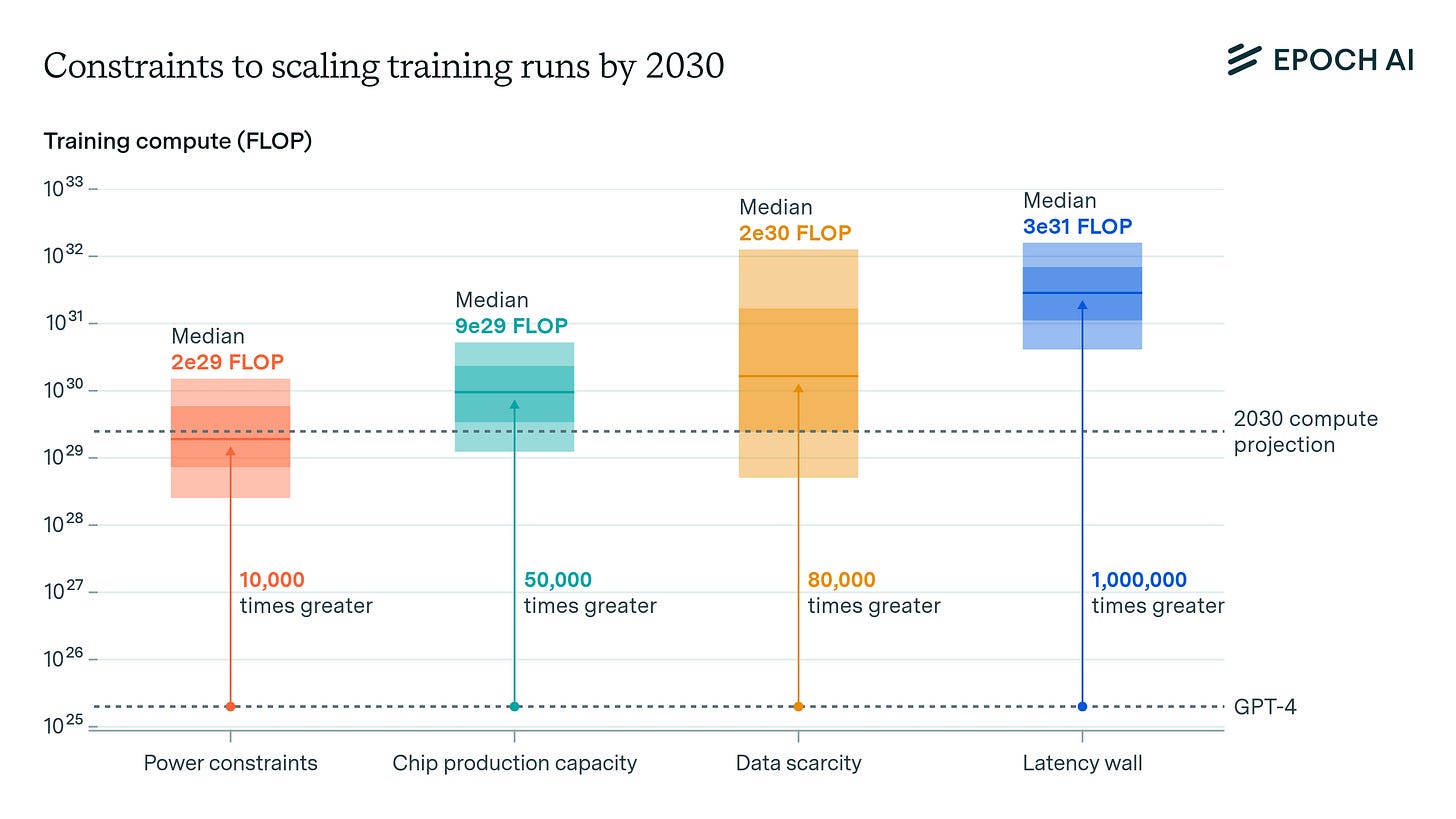

Intelligence as Infrastructure

This all leads to a larger realization: intelligence is becoming infrastructure. Like electricity or bandwidth, it’s becoming cheap, ubiquitous, and quietly essential.

But infrastructure spreads unevenly. Electricity reached cities long before rural areas; broadband reached white-collar work before factories. AI will follow the same path: digital and measurable domains light up first, while embodied or contextual work takes years to catch up.

That’s not failure—it’s structure. Progress has always arrived in waves, not walls.

Closing Thoughts

As intelligence becomes free, advantage shifts from those who possess it to those who can measure it.

The next decade of AI won’t belong to whoever builds the biggest model—it’ll belong to whoever builds the best verifier.

Because once you can measure progress, you can automate it.

And once you can automate it, the cost of intelligence falls to zero.