Building for the Future: Google's Bet on a World Model

Going beyond the tool race to own the substrate layer of AI computing

Google made a lot of announcements at I/O this year. Deep Think in Gemini 2.5 Pro, Veo 3, Gemini Ultra, Project Mariner, and Project Astra to name a few. Across the board, AI features and tools are being introduced across the Google ecosystem.

But underneath the surface, it’s clear the company is chasing something much more ambitious: a “world model.”

Not just another foundation model with extra knobs, but the foundation of a full-stack AI operating system—one that could define how humans and machines interact for the next decade.

From Model Zoo to Operating System

Demis Hassabis, CEO of Google DeepMind, didn’t mince words: Gemini isn’t just getting smarter—it’s evolving into a system that can simulate the world, plan, and act. Think less autocomplete, more cognitive engine. The goal is to endow Gemini with a brain-like internal model of how the world works—physics, context, causality—not just to understand prompts, but to anticipate needs, chart next steps, and execute them across devices.

That means a new layer in the AI stack: not infrastructure, not just models, but something higher-order. A logic layer. An AI OS.

What a “World Model” Actually Means

The concept of a world model isn’t new—it’s long been a staple of cognitive science and reinforcement learning. In human terms, it’s what lets you look at a messy room and imagine how to clean it, even if you’ve never seen that exact room before. In AI, it means building systems that don’t just pattern-match, but infer, reason, and simulate.

For Google, that shows up in prototypes like Genie 2, which generates interactive, dynamic environments from still images or text. It’s the foundation for future AI agents that can operate in digital and physical contexts—autonomously, fluidly, and with foresight.

Why does this matter? Because it’s the difference between a chatbot that responds, and an assistant that proactively gets things done.

The Distribution Game: APIs vs Walled Gardens

One of the clearest signs of Google’s strategic shift is how it’s distributing its world model capabilities. Rather than locking them inside flagship products like Chrome or Android, Google is opening access through the Gemini API. Project Mariner—its agentic browser automation tool—isn’t launching as a Chrome-exclusive. Instead, it’s being handed to partners like UiPath and Automation Anywhere to build their own layers on top.

This isn’t just product strategy. It’s distribution strategy. And in the AI race, distribution is increasingly won through design.

OpenAI’s dominance didn’t start with AGI. It started with a clean, approachable chat box. A tool that felt intuitive. Friendly. Helpful. It turned a cutting-edge model into a default habit. And that’s what every player in the space is now chasing: the interface that becomes invisible. The assistant that blends in. The design that distributes itself.

Google seems to be taking that lesson seriously. Rather than compete feature-for-feature with every vertical, it’s trying to win the substrate: the logic layer that other companies build with.

With over 20 million developers already inside its ecosystem—the largest pool of builders in tech—Google doesn’t need to win every product category. If it can convince developers to build on Gemini, then Gemini becomes the standard. Quietly, pervasively, by default.

This is the deeper bet: that contextual awareness, thoughtful integration, and platform openness will spread faster than any single killer app. That in the AI era, distribution is no longer about shipping an app—it’s about being the layer every app calls.

Project Mariner, the company’s agentic browser automation tool, is a prime example. Rather than keep it in-house as a Chrome-only feature, Google is letting partners like UiPath and Automation Anywhere build on it. In effect, Google isn’t just building an assistant. It’s trying to become the assistant layer other companies build with.

That’s a major shift. Most Big Tech players—especially Apple—move toward vertical integration. Google is betting on platform dominance.

The company has over 20 million developers already in its ecosystem. If it can convince them to adopt Gemini’s world model logic as their interface layer, it doesn’t need to win every product. It just needs to win the layer they all depend on.

That ecosystem already includes over 20 million developers—more than any other platform vendor. If Google wins this layer, it won’t need to win every app. It will own the substrate.

The Strategic Stakes: Search, Speed, and the AI OS Crown

But make no mistake—this is also a survival play. Search still funds Google. And in a world where AI agents answer questions, book flights, and summarize documents before you type anything into a browser, the economic model of paid links starts to wobble.

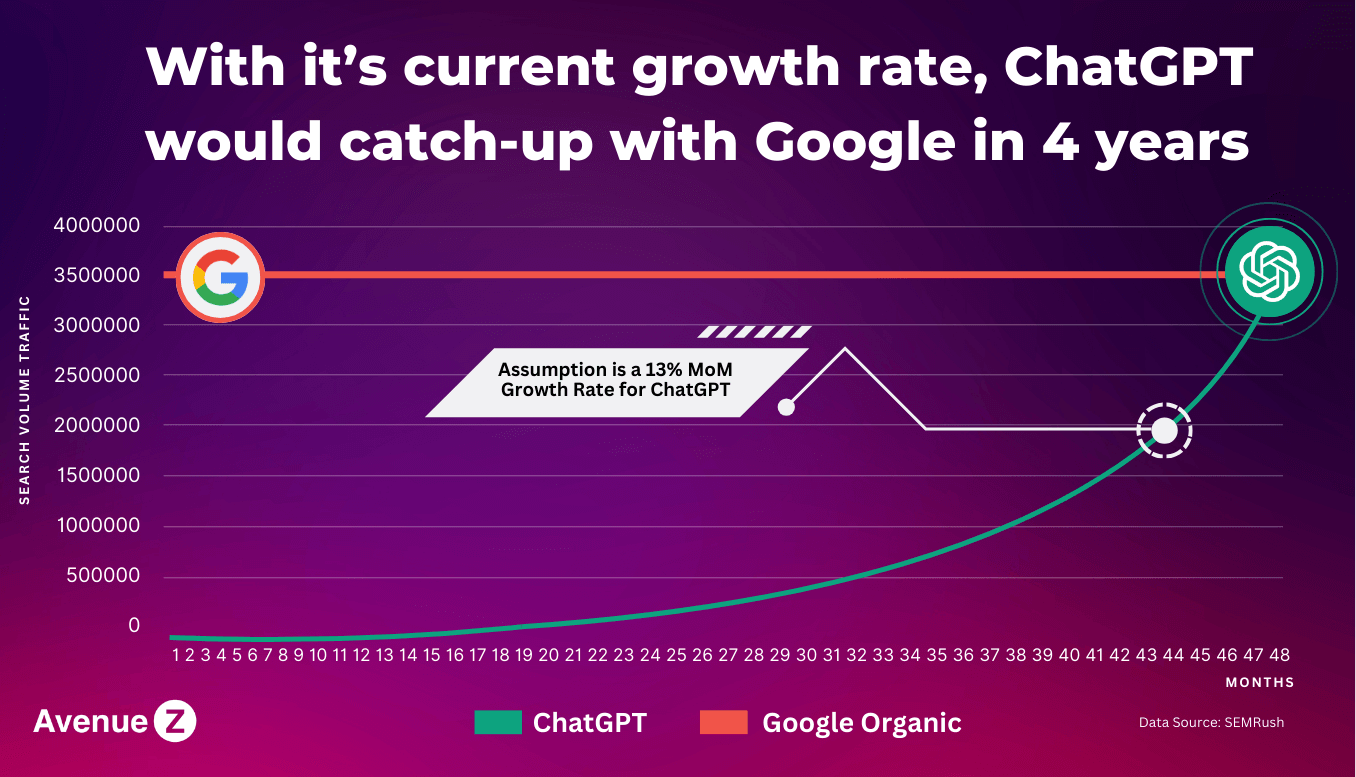

That erosion is already underway. Chat-based LLMs like ChatGPT and Perplexity are capturing real user behavior that once defaulted to Google Search. Why wade through blue links when you can get a concise, context-aware answer in one shot? Even more concerning: some of these platforms are beginning to monetize those interactions—with ad units, suggested links, or even vertical integrations. That’s not just traffic leakage. It’s a direct assault on Google's core business model.

Which is why the world model isn’t just a moonshot—it’s also a moat. By controlling the assistant layer, Google protects its position as the starting point for user intent. And by offering APIs to developers, it’s trying to head off the enterprise penetration that Microsoft is accelerating with Copilot and Azure AI Foundry.

Time, however, is not on Google’s side. While the company has recently shown faster execution—evidenced by a packed roadmap, strong enterprise adoption, and solid product cadence—its rivals are moving fast. Microsoft’s agentic platform is deeply integrated across Office 365. OpenAI is building its own ambient computing layer and hardware device with Jony Ive. And Apple’s delayed AI strategy suggests that whoever defines the new interface layer first might lock it in for the next decade.

Implications for Enterprises and Builders

So what does all this mean for enterprise leaders and product teams?

Retooling is inevitable: If Google’s bet pays off, the UI is no longer an app—it’s an agent. You don’t tap icons; you speak, gesture, or contextually trigger actions. Any software not designed for this paradigm may need to be re-architected sooner than expected.

APIs are the onramp: Gemini’s world model capabilities will flow through APIs. Companies that want to plug into this logic layer—from CRMs to ERP systems—should prepare for deep integration, not just LLM wrappers.

Be pragmatic, not tribal: Google’s world model is visionary but long-range. Microsoft’s Copilot suite is shipping now and fits neatly into existing workflows. OpenAI may reinvent the endpoint, but hardware moats take time. For most orgs, the smart play is diversification—adopt the best of each layer and design for an increasingly open, agentic web.

The Endgame: A New Default Interface

So where does this all lead?

If Google’s world model strategy works, Gemini won’t feel like a product you launch. It’ll feel like infrastructure—ambient, responsive, embedded in the fabric of daily workflows. You won’t open it. You’ll notice when it’s missing.

This is the real goal: to move from assistant-as-app to assistant-as-environment. Instead of launching tools, you’ll express intent—schedule a meeting, summarize a doc, prep for a flight—and the system will coordinate across contexts to make it happen. No interface hunting, no app juggling. Just continuity.

And that shift has enormous consequences—not just for Google, but for everyone building in the ecosystem. Because if the default interface changes, everything built on top of it changes too.

This isn’t a story about model benchmarks or GPT-vs-Gemini debates. It’s a story about what becomes invisible. The layer that captures intent, routes logic, and shapes what gets surfaced first—that’s where the leverage is.

If Google owns that layer, it owns the frame through which every other product is seen.