Bias in AI, Blind Spots & More

How to understand biases and address them in the age of AI products.

As a Product Manager, you need to be able to spot bias and address bias in action. Anytime you integrate AI into your product, bias inherently comes with it. Many of us are familiar with common forms of bias like recency bias, confirmation bias, and the sunk cost fallacy, as well as concepts like ‘diversity of thought’ or ‘design thinking’ as methods for addressing these biases. However, understanding biases and how to approach them as part of your product framework is just the first step, but certainly not the last step.

For AI products, the stakes are much higher. The level of scale PMs now have to contend with comes with all sorts of issues, the number of touches is much higher and the ripple effects can have drastic consequences. At scale, the amount of impact AI products can have is extremely rewarding, but this also means the negative or harmful effects of bias in improperly designed products can be devastating. Robustness and reliability are of paramount importance. AI and its outputs are already being incorporated into every aspect of our lives, but to get the personalization of services and goods we expect, in the time frame we want, the use of AI is absolutely necessary.

How does bias manifest in an AI product?

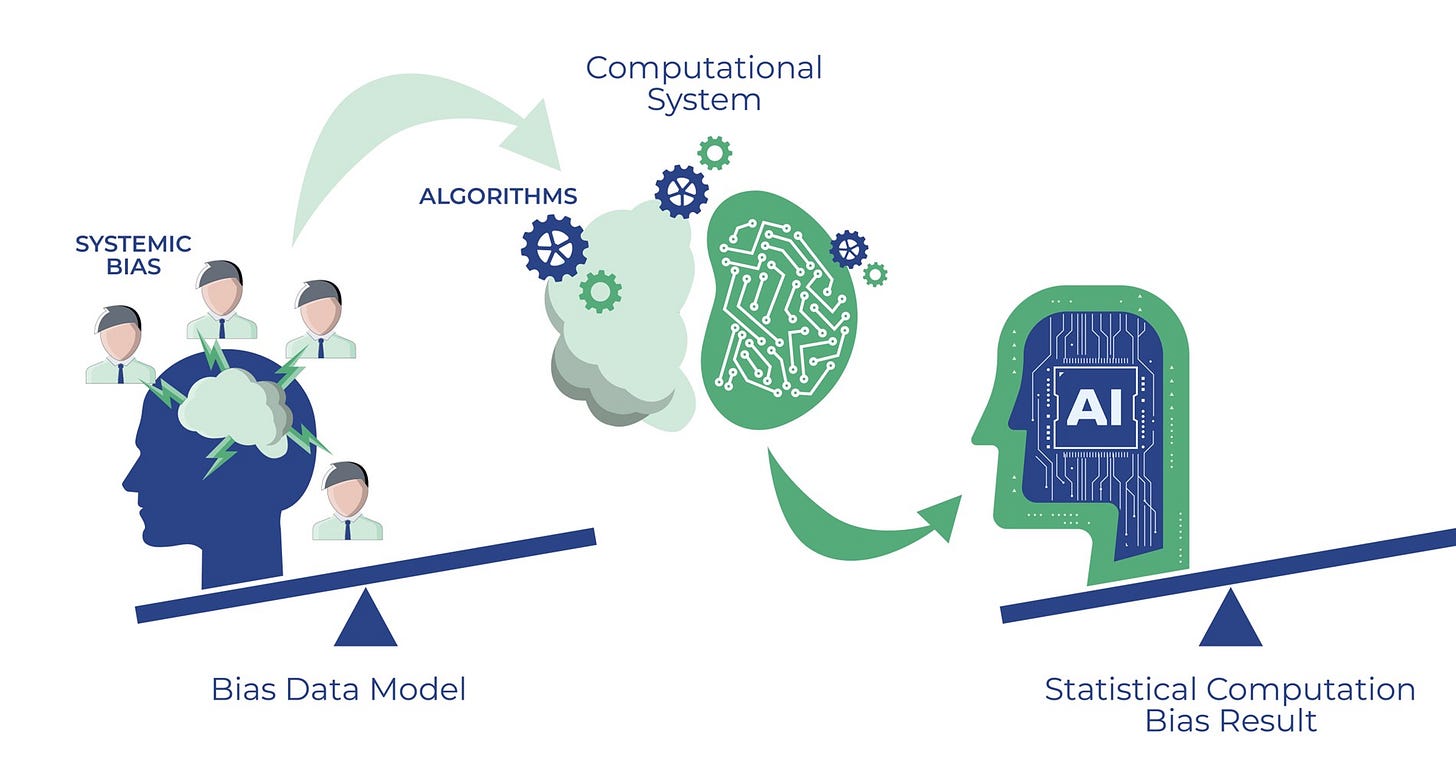

When we think about the nature of AI products and agentic applications, recognizing and addressing bias becomes a much more difficult problem. At the output stage, because of how complex these new products are, it becomes increasingly challenging to identify the root causes and go back and fix them. Additionally, each layer of the stack can compound biases present in the previous layer, making it nearly impossible to untangle and address issues with certainty. You might fix one thing, thinking you solved the issue, but the real problem could be something else entirely. Furthermore, because of the wealth of data AI is trained on, we might over-ascribe objectivity to its outputs – making us blind to underlying issues.

In my role as a PM working with AI on a daily basis, I always remind myself that correctness is largely a coincidence – it’s nothing more than an expression of probabilistic certainty. It’s our responsibility as PMs to consider how inputs shape the outputs, along with thinking critically about how biases can affect every stage of the process.

When we think of bias, in this context, it can be viewed as a systematic error in outputs. There’s some kind of outcome being favored in a way that disadvantages a population. Keeping that definition in mind, here are some sources of algorithmic bias that I think about as part of my AI product framework:

Biased training data. Data comes from systems with inherent bias. Collection and aggregation introduces and creates overrepresentation or even misrepresentation in some cases. There are a number of historical societal biases at play, and past discriminatory practices can become encoded into data over time.

Example: Medical datasets often contain more data from white male patients, potentially leading to worse performance for other demographics. There can also be contextual gaps in data, where a MRI recognition system trained mostly on white patients may perform poorly with different demographics.

Proxy discrimination. There’s a few definitions for this depending on the context, but generally speaking, proxy discrimination occurs when a facially neutral trait is used as a stand-in for a prohibited trait. I’ll break this down a bit further and explain a few ways this manifests:

Correlated variables. Variables that are seemingly neutral can be accidentally correlated with protected attributes. Historical patterns and an erroneous combination of variables can create hidden connections that disadvantage certain populations.

Example: Say we compile a data set to be used for a BNPL (buy now pay later) app that uses AI to provide interest rates. Zip code data can serve as a proxy for race due to historical housing segregation patterns.

Feature selection problems. Even if we remove protected attributes, bias can emerge through interdependent variables that are pattern matched to represent a population.

Example: Imagine we have an AI HR tool used to evaluate resume fit. Even if gender is removed, variables like “part-time work” or “career gaps” may proxy for gender in scoring decisions

System Learning. AI can create unexpected relationships between variables and pick up on subtle patterns in seemingly unrelated features. In optimizing for a solution, an AI model may find subtle discriminatory patterns.

Example: A lending algorithm used by a bank might “discover” that browser type or device model correlates with socioeconomic status.

Flawed problem formation. AI can be incredibly smart, but it’s only solving the problem that you tell it to solve. If you oversimplify your success metrics, then complex goals can be reduced to single measures and critical dimensions of the solution can be pushed aside.

Example: a medical diagnosis system optimized only for overall accuracy might sacrifice performance on rare, but critical conditions affecting small sub-sections of the population.

You could also have misaligned optimization targets where AI solves for proxy objectives or short-term metrics that don’t capture the true goal of your product. As an example, a content recommendation system optimized for engagement time might promote divisive or extreme content, ignoring the societal impact.

Feedback Loops. This happens when a system’s outputs influence its future inputs and decisions, creating a self-reinforcing cycle that can amplify initial biases over time. Think of it like a snowball effect. Small skews can become major distortions. For example, a job recommendation system showing high-paying jobs more often to men leads to more men applying and getting hired, which reinforces the pattern in future training data.

We can also have self-fulfilling predictions, where early system predictions influence future outcomes and create a circular confirmation of biased assumptions. Example: a credit scoring system denying loans to certain neighborhoods reduces investment there, leading to economic decline that “validates” the original lower scores.

The bias caused by these feedback loops can be extremely hard to detect because the effects often build gradually over time, and we have multiple factors that interact simultaneously – making it difficult to prove causality.

What does bias look like in agentic applications?

Now that we’ve considered a few forms of algorithmic bias individually, we can begin to imagine how these forms of bias can overlap and combine in a multitude of ways when there are multiple AI models interacting with each other. Additionally, there’s increasing overlap between human bias and model bias as AI “thinks” more like a human.

Keeping the forms of algorithmic biases mentioned above in mind, here are a few agentic workflow-specific biases. The algorithmic biases will still exist, but the way the issues manifest will have some important distinctions.

Path dependency bias. Agents can get stuck in initial solution patterns, overcommit to early decisions, and then fail to consider alternative approaches once a solution path has been chosen. If an agent first solves a problem using a greedy algorithm, it might keep applying that approach even when dynamic programming would be more optimal.

Example: Let’s consider an agentic hiring system that uses multiple AI agents to screen candidates, conduct initial interviews, and make recommendations.

The system successfully hires several engineers from top-tier tech companies early on. It then establishes a pattern of favoring candidates with similar backgrounds, becoming locked into this “successful hiring pattern”.

However, the underlying foundation model was trained on historical tech industry data and it learned to associate “successful engineers” with certain proxy indicators

Communication style (favoring native English speakers)

Technical terminology usage (reflecting specific educational backgrounds)

Project descriptions (preferring well-known company frameworks)

The result? The agent appears highly effective at finding good fits, and each successful hire reinforces the chosen path and the proxy indicators. However, it is actually creating a self-reinforcing cycle of decreasing diversity. A candidate from a community college with innovative projects gets consistently passed over for a candidate from a top university with standard projects.

Optimization tunnel vision. In searching for a solution, an agentic workflow unintentionally optimizes for local maxima, while missing global maxima – overemphasizing measurable metrics while ignoring harder-to-quantify factors.

Example: Consider an agentic content moderation system for a social media platform that uses multiple AI agents to review posts, manage user reports, and enforce community guidelines.

The system is optimized primarily for speed of moderation and reduction in reported content. The agents aggressively remove content to maximize these metrics, and at a glance, appears successful due to the decreasing number of user reports.

However, the underlying foundation model was trained primarily on English-language content coming from Western cultural contexts. The training data was also skewed towards certain types of violations that commonly occurred on platforms with a dominant US user base.

The result? The system appears effective by metric standards and high removal rates are seen as “successful” moderation, but is actually aggressively removing posts using AAVE (African American Vernacular English) or cultural references it doesn’t understand. The metrics show “improvement” while in reality, the platform is becoming less diverse, cultural expressions are suppressed, and non-Western communities become less engaged – thereby harming the overall user experience.

Temporal bias. Agents can have a skewed treatment of time-based decisions. This results in an over-emphasis on immediate outcomes, discounting the benefits of long-term solutions on a broader time-horizon.

Example: Consider an agentic personal finance system that uses multiple AI agents to analyze spending patterns, make investment recommendations, and provide personalized financial planning.

The system heavily weights recent financial behavior, overemphasizes short-term market performance, and makes recommendations based on immediate gains. On a short time horizon, say 3-4 months, the system is actually quite successful and generates a significant immediate profit for the user.

However, the underlying foundation model is learning from user acceptance of its recommendations. Users who follow immediate-reward advice continue to provide positive feedback. The system learns that “successful” advice means quick and immediate returns.

The result? Long-term and sustainable financial planning becomes increasingly undervalued. The agent will continue to push short-term financial decisions that provide an immediate reward. Over time, users churn out of the app as returns inevitably decline and those seeking long-term strategies gradually disengage – creating a data void that fails to support more sustainable revenue streams like loan referrals or asset management fees.

Addressing bias: act early and often

Bias issues mainly occur in two ways: bias in design and bias in evaluation.

Oftentimes, we aren’t being proactive enough or critical enough about bias in our design process. It’s easy to solve for the immediate problem or loudest problem without considering the long-term implications or underlying causes. As PMs, it’s our responsibility to thoroughly interrogate system level decisions and think about how small actions can affect outcomes down the line. Seemingly innocent issues can snowball into impossible problems very quickly if left unchecked.

Bias also occurs frequently in the evaluation process. When setting metrics for success or determining outcome related goals, we need to be holistic in our approach. Short-term or immediate wins can seem attractive, but they’re almost always unsustainable. It’s crucial to consider the fairness and ethics of our problem solving approach, and how this gets connected to tangible outcomes.

For myself, I like to focus on the following two issues. Taken together, they provide a good framework to surface emerging biases and guide corrective actions:

Interpretability and explainability. When AI systems work together in complex, multi-step processes, tracing how and why they reach specific decisions becomes increasingly difficult. As data flows through multiple layers or stages it influences and builds upon others’ outputs, and the chain-of-thought or chain-of-reasoning grows more intricate and opaque.

Understanding these interconnected decision paths is essential not only for validating the system’s choices, but also for ensuring that stakeholders can meaningfully oversee and trust the system’s process. Without clear insight into how a model or system arrives at their conclusions or outcomes, it becomes nearly impossible to identify and address potential biases that may emerge from their interactions.

Robustness and reliability. As AI systems interact and build on each other’s work, their individual biases don’t just add up, they multiply and cascade throughout the system. What begins as a minor flaw in one layer’s processing can snowball as it passes through multiple steps, with each subsequent layer potentially magnifying the error.

This interconnected nature means that ensuring consistent, reliable performance becomes exponentially more complex than with a simpler application. Like a chain reaction, a single unreliable component can trigger a cascade of errors throughout the entire process. The challenge isn’t just about catching individual biases, but understanding how these biases might compound and transform as they flow through the layers of decision making and processing.