AI Safety, DeepSeek, Agentic AI & More

Navigating the balance between innovation and responsibility

Here's the thing about AI safety that most articles won't tell you: it's not actually about AI. It's about business risk management, customer trust, and regulatory compliance. The rest is implementation details.

Let me tell you a story about a company called DeepSeek. They built an AI chatbot that became the most downloaded app in the United States. Impressive, right?

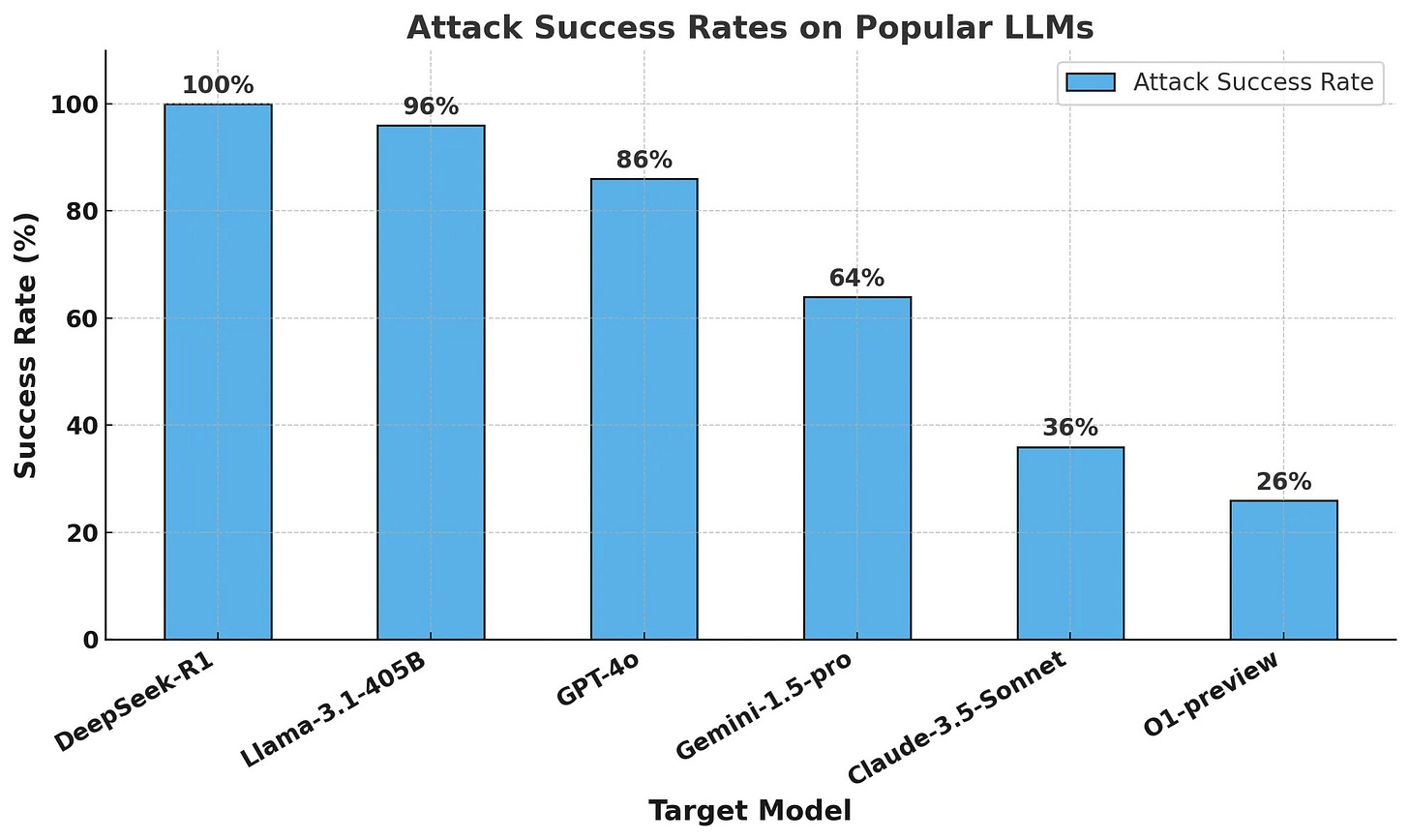

Here's what happened next: security researchers discovered their model had a 100% failure rate at blocking harmful prompts. Not 99%. Not 95%. One hundred percent. This is a huge difference compared to other leading models, which demonstrated at least partial resistance.

But wait, it gets better: they were transmitting user data without encryption to servers controlled by ByteDance (yes, that ByteDance, the TikTok company). And they had undisclosed connections to China Mobile, a telecommunications company that's banned from operating in the US.

This is what happens when you optimize for the wrong things.

The real cost of “moving fast and breaking things”

The hard truth is that cutting corners on AI safety isn't technical debt. It's an existential risk.

When you skip safety measures to save money, you're not making a technical trade-off. You're making a bet that could sink your company. As AI systems become increasingly integrated into critical areas like healthcare, transportation, and financial services, the risks become more sophisticated and influential.

An unsafe system has the potential for negative consequences that could affect millions of lives.

The cost structure of AI safety looks like this:

Implementation: Expensive

Maintenance: Ongoing

Failure: Potentially infinite

If you're thinking "we'll add safety later," you're thinking about it wrong. It's like saying you'll add security to your banking app after you get customers. That's not how this works. That's not how any of this works.

What actually matters in AI safety

Let me break this down into what you actually need to care about:

1. Technical safeguards that actually work

Your AI system needs three things:

Alignment (making sure it does what you want)

Robustness (making sure it keeps doing what you want)

Verifiability (proving it did what you wanted)

Everything else is an implementation detail.

2. Governance that doesn’t suck

Most AI governance frameworks look like they were written by a committee of lawyers who've never seen code. Here's what actually works:

Real-time monitoring dashboards (because you can't fix what you can't see)

Health score metrics (because complex systems need simple indicators)

Automated alerts (because humans are terrible at continuous monitoring)

Audit trails (because when things go wrong, and they will, you need to know why)

3. Human oversight that makes sense

The thing about AI oversight is that most companies over-index on certain factors, leading to less-than desirable results. They either have:

Too much oversight (making the system useless)

Too little oversight (making the system dangerous)

The wrong kind of oversight (making everyone miserable)

You need a system where humans are in the loop for decisions that matter and out of the loop for everything else.

The special case of agentic AI

If your AI system can take actions in the real world (booking appointments, making purchases, modifying systems), you're playing a different game entirely.

This isn't like regular AI where the worst case is generating bad content. This is like giving an intern unlimited authority over your production systems.

For me, the non-negotiables for production systems are:

Separation of powers (one system decides, another executes)

Kill switches that actually work

Default behaviors that err on the side of caution

Logging that would make an auditor weep with joy

The business case for AI safety

Let me make this absolutely clear, AI safety is not a cost center; rather, it's a competitive advantage for the following reasons:

Regulatory compliance is coming whether you like it or not. The EU AI Act isn't a suggestion. GDPR isn't optional. Getting ahead of this now is cheaper than retrofitting later.

Customer trust is a moat. When your competitors are explaining their data breaches, you want to be the company that did it right from the start.

Technical excellence compounds. The same measures that make your AI system safer also make it more reliable, more maintainable, and more scalable.

What you should do tomorrow

Audit your AI systems' safety measures. If you don't have any, congratulations, you've identified your first priority.

Implement basic monitoring. At minimum: usage patterns, error rates, and anomaly detection.

Document your safety protocols. If you can't explain your safety measures to a regulator, you don't have safety measures.

Set up proper governance. This means clear ownership, clear procedures, and clear accountability.

Plan for failure. Systems will inevitably fail at some point, and your response shouldn't be improvised. Planning for failure is the best exercise in creating robust and safe systems.

The bottom line

AI safety isn't optional. It's not a nice-to-have. It's not something you can bolt on later. It's fundamental to building AI systems that don't blow up in your face.

The companies that get this right will define the next decade of AI. The ones that get it wrong will be case studies in what not to do.

If you're thinking "this seems like a lot of work," you're right. It is. That's the point. AI systems that can meaningfully impact the world require meaningful safeguards. If you're not willing to invest in safety, you shouldn't be building AI systems in the first place.