AI at Escape Velocity: Why the Real Shift Has Just Begun

Key takeaways from the recently released Bond report on AI Trends

The 2025 BOND AI Report delivers a clear signal through the noise: artificial intelligence isn’t easing into the world—it’s slamming into it at full speed.

Adoption is outpacing every previous platform shift. Compute spend is setting records. And the way we work, govern, build, and interact is being redefined faster than institutions can respond.

Keep reading for my take on the report’s key themes and data, as well as a strategic view of where AI is heading, what’s driving it, and why this moment marks the start of something structurally new.

At-a-Glance

AI adoption is outpacing every past tech shift, driven by a surge in users, infrastructure investment, and falling inference costs.

Training costs are skyrocketing, while inference costs are collapsing—driving usage but straining monetization models.

Open-source and geopolitical dynamics (not just model performance) are reshaping market structure and competitive moats.

AI is now physical. It’s embedded in machines, defense systems, and agricultural tools—not just apps and chatbots.

The next billion users will come online via AI-first interfaces, skipping browsers and going straight to agents.

Work is being redefined. The most competitive professionals won’t be replaced by AI, but by those using it reflexively and effectively.

Control over the interface layer—not just the model stack—will determine who captures value.

Change Is Happening Faster Than Ever

There’s a common pattern to major tech shifts: initial breakthroughs, followed by years of uneven adoption. AI is skipping that middle phase. ChatGPT hit 100 million users in two months. Developer tools built on open models are doubling every quarter. Governments are moving from regulation to infrastructure funding in under a year.

It’s not just the rate of change—it’s the compounding layers. Better models, cheaper inference, smarter agents, more data, more users. The result: AI is growing faster than any tech platform we’ve seen, including the internet and smartphones.

AI User + Usage + CapEx Growth = Unprecedented

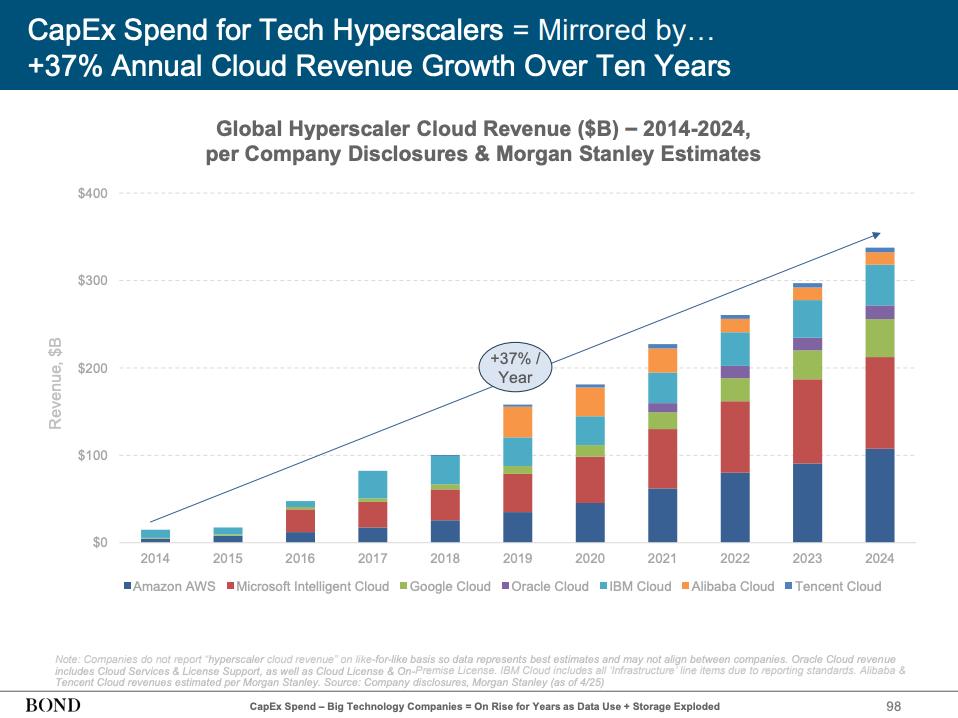

This isn’t a hype cycle—it’s a capital cycle. In 2024, tech giants poured over $200B into AI infrastructure, much of it into compute, power, and chips. NVIDIA’s developer ecosystem has doubled in four years. Model providers are launching not just products but entire datacenters to keep up with demand.

Education, research, and government agencies are ramping up too. AI isn’t just a Silicon Valley play anymore—it’s a sovereign asset. Countries are investing in AI the way they once did in electricity or broadband. For the first time, students are learning research methods with AI. Public policy is being drafted by AI copilots.

And the tools themselves are evolving fast. Yesterday’s chatbots gave answers. Today’s AI agents complete tasks. Soon, they’ll manage workflows across systems without a human in the loop.

AI Compute Costs Are High—But Inference Is Getting Cheap Fast

Model training costs are now regularly exceeding $100 million. For top-tier LLMs, we’re already talking billions. But here’s the twist: while training gets more expensive, running the models—called inference—is getting radically cheaper.

NVIDIA’s Blackwell GPU is 100,000x more efficient per token than its 2014 counterpart. Algorithmic improvements have driven down per-token inference costs by 99.7% in just two years. That’s not a margin improvement—it’s a cost collapse.

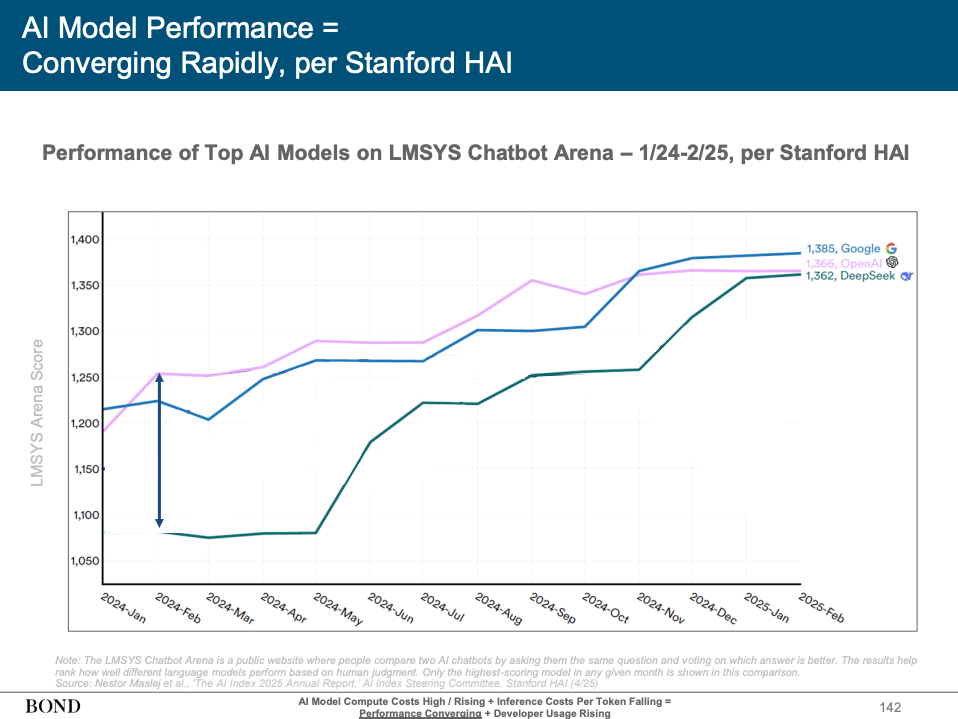

And it’s changing developer behavior. Builders no longer need access to GPT-4 to get strong results. Local models or cheaper APIs are often "good enough." We’re seeing the start of a developer-led explosion—cheaper tools, faster iteration, and more infrastructure abstraction. Model performance is converging. Usage is diverging.

AI Usage, Cost, and Losses Are All Scaling—Together

In typical disruption cycles, growth and monetization rise together. Not this time. AI is growing faster than revenue can catch up. Startups are spending millions per month on inference. Even giants like OpenAI and Anthropic are balancing explosive growth with equally explosive costs.

It’s not just about scale—it’s about physics. Chips, cooling, power, and land are all constraints now. Data centers are being treated like strategic assets. The supply chain—transformers, GPUs, turbines—is under pressure.

The flywheel is clear: more usage → more compute → more cost → more infrastructure. Rinse and repeat.

Monetization Faces Three Threats: Competition, Open-Source, and China

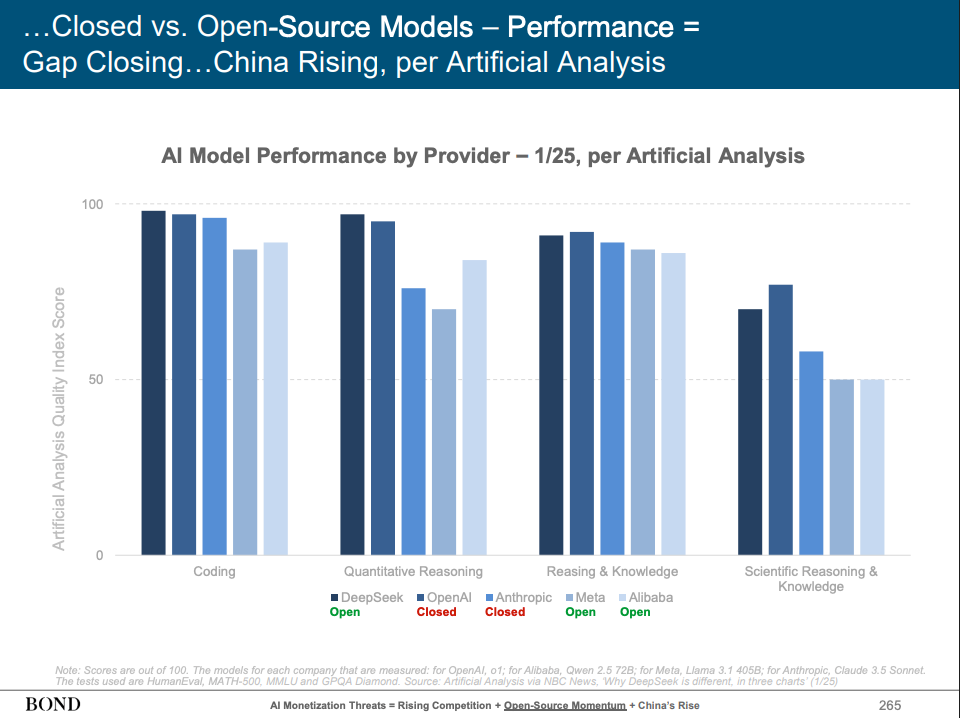

The first threat is commoditization. If every model gets “good enough,” pricing power vanishes. Developers will choose the cheapest or fastest option. And for many tasks, they already are.

The second is open-source. Llama, Mistral, and DeepSeek are leveling the playing field. While closed models dominate enterprise and consumer usage today, open models are making rapid gains—especially in developer ecosystems and sovereign deployments.

The third is geopolitical. China is scaling its own AI ecosystem—top apps, closed infrastructure, and national alignment. ChatGPT dominates globally, but not in China, where homegrown players like ERNIE and Kimi lead. AI isn’t just a tech race—it’s a geopolitical one. Chips, data centers, and foundation models are now pillars of national strategy.

AI in the Physical World Is Scaling Just as Fast

We often focus on chatbots and copilots, but the real frontier might be kinetic. AI is now embedded in physical systems: drones, tractors, defense networks. Companies like Anduril are deploying autonomous edge intelligence. Carbon Robotics is using vision models to replace herbicides in farming.

The shift is profound. What used to be a static capital asset—a drone, a sensor, a car—is now a software endpoint. AI turns machinery into software with velocity and intelligence. The real-world ramifications go far beyond pixels.

Global Internet Growth—Now Powered by AI from the Start

Roughly 2.6 billion people are still offline. But that’s changing—fast. Low-orbit satellites are bringing connectivity to new corners of the globe. And when these users come online, they won’t be met with search bars or static pages. They’ll start with AI.

Imagine a first internet experience where the user talks to the web, in their native language, through a multimodal agent. They won’t need apps—they’ll need a single interface that understands their intent. For this cohort, the web starts with agents, not browsers. It’s a once-in-a-generation platform reset.

The Evolution of Work Is Real—and Rapid

Let’s be clear: AI won’t replace all jobs. But it will redefine what we mean by “work.” Traditional knowledge work—reading documents, making decisions, routing requests—is increasingly in AI’s core competence. The role of the human? Shifting upstream.

In this new configuration, humans will supervise, refine, and teach machines. The rise of reinforcement learning with human feedback (RLHF) is already creating new labor markets. Entire companies are forming around the idea of teaching machines how to think.

Productivity is no longer just about hours—it’s about compute. Data centers are becoming proxies for labor supply. And reflexive AI usage is now table stakes: you won’t be replaced by an AI—but by someone using it better than you.

The Genie Is Not Going Back in the Bottle

Imagine going a week without the internet. For most, it’s unthinkable—every task, transaction, and conversation now runs through the web. In a decade or two, we’ll likely say the same about AI.

What started as academic research is now embedded infrastructure. AI powers discovery in labs, efficiency in factories, and decision-making in boardrooms. It’s accessible on every mobile phone and being embedded into every interface. Tools that once required PhDs are now drag-and-drop. Agents are replacing dashboards. And inference costs are falling so fast that scale is no longer a luxury—it’s a given.

Capital is flowing just as fast. Chipmakers, hyperscalers, and nation-states are all building out the compute layer with urgency. That urgency isn’t just about economics. It’s about sovereignty, influence, and control. The U.S.–China rivalry over AI systems is no longer theoretical—it’s defining global tech posture, industrial policy, and even military planning.

Meanwhile, AI is reaching places even the web never could. Satellite internet and AI-native apps are making the next billion users not just digital-first—but AI-first. Their first interface won’t be a search bar. It’ll be a conversation.

And at work, AI is altering the unit economics of labor itself. We’re entering a world where a data center is a workforce—and where intelligence is not just a feature, but the operating system.

The genie is out. And in this new game, speed isn’t optional—it’s survival.