AI Agents, the New SaaS, & More

How AI agents are creating the next era of SaaS applications

Welcome to “The Neural Network”, a weekly newsletter where I provide insights into the world of AI and present actionable ideas to help you become a good AI leader.

Interested in a 1-on-1 chat with me? If you refer this newsletter to 10 of your friends, you can book a call with me. Claim that and other exclusive bonuses listed below at the end of the email.

Key insights for AI leaders

The rise of “Service-as-a-Software” is enabled by AI’s ability to perform complex reasoning through “agentic workflows” that go beyond simple pattern matching. This allows AI to tackle a wider range of tasks and problems.

The economics of AI are driving this shift - costs are plummeting much faster than Moore’s Law, while infrastructure and models are becoming more accessible, allowing startups to compete with incumbents.

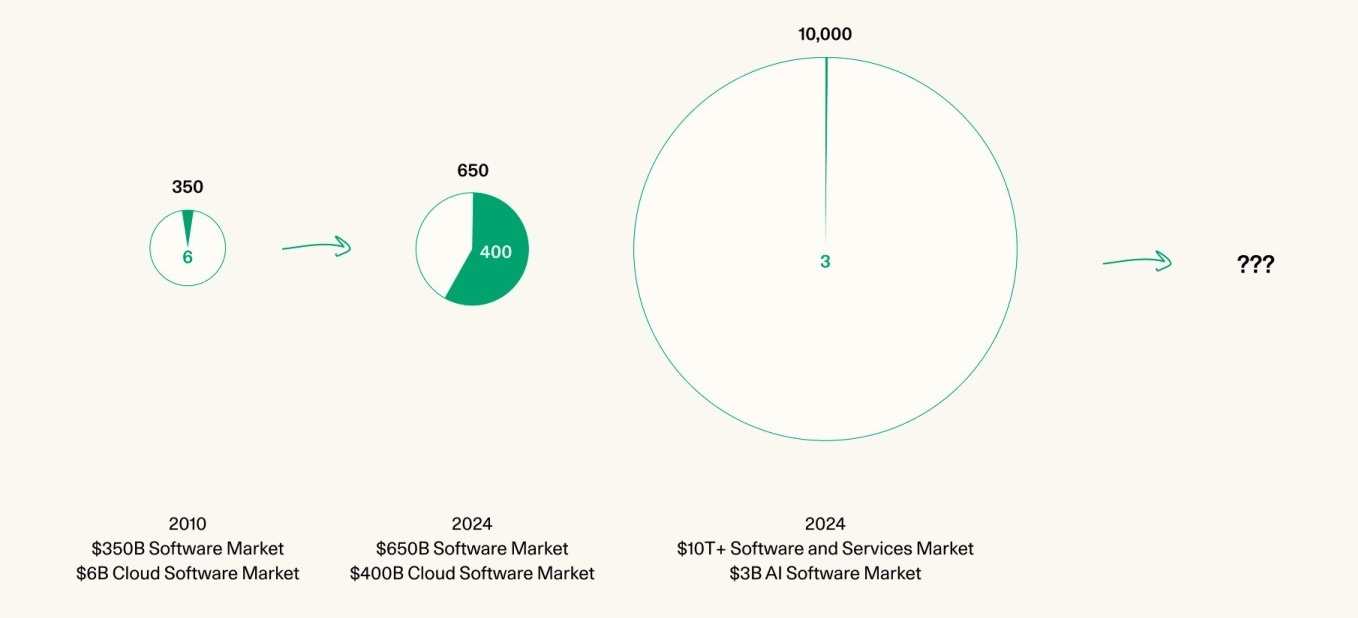

Service-as-a-Software targets the massive services market ($10T+) rather than just the software market, with companies selling AI-powered “labor” on a per-outcome basis rather than a per-seat model.

This transition is creating a new cohort of “agentic applications” that integrate multiple AI models and tools to automate and augment human work, with the potential to dramatically boost productivity and lower costs across industries.

A new era of SaaS

OpenAI’s new o1 model leaked last Friday and the ramifications are wild. Using a “stop and think” approach, agentic workflows and AI’s burgeoning ability to perform complex reasoning is enabling a new “service-as-a-software” model that targets the massive services market rather than just the software market.

Of course, none of this happened in a vacuum. Over the last two years, at the foundation layer, the Generative AI market has stabilized. Only scaled players with robust economic engines and access to vast sums of capital remain in play. The focus is now on the development and scaling of the reasoning layer.

Let’s unpack what this all means and how it will impact the next generation of AI companies.

Why now?

The shifting economics of AI

AI is declining in cost faster than any technology ever measured. The cost of operating AI models is halving every 4 months—which is 4-6x faster than Moore’s Law. It’s clear that the future of AI will be accompanied by increasingly cheap and plentiful next-token predictions. We also now have a plentiful assortment of open source models, the growth of which has been accompanied by the commoditization and increasing availability of compute.

Market consolidation and infrastructure availability

The early hypothesis at the outset of the Generative AI market was that a single model company would eventually capture all of the market share and subsume all other applications. However this is clearly not the case. There’s plenty of competition at the model layer and there doesn’t appear to be a solidified pathway for a single winner to emerge.

I say this because the productization of models has yet to be fully realized. Apart from one-offs like ChatGPT, we know now that the implementation of LLMs and Generative AI is messy and complex—especially at an enterprise scale. Also, we’ve seen the structure of AI teams change drastically over the course of the last two years. It’s better to let researchers research. Finding PMF is no easy task, and is a role better left for product teams and developers rather than AI researchers.

By now, we’ve seen the eye-watering fundraises by companies like OpenAI and Anthropic ($6.6B at 157B and $7.6B at $40B respectively). It’s become apparent that funding the research and development of generative AI models, along with creating and supporting the necessary compute infrastructure requires substantial amounts of capital only available to a small number of big players. In the absence of a breakout product, it is nearly impossible to continuously replenish the war chest necessary for a startup to overcome the current incumbents.

Lucky for us, at the application level, model democratization and the advent of inference clouds has leveled the playing field—making it possible for any new company to spin up and deploy models at scale without the need for costly local clusters.

The reasoning revolution

“Thinking longer about what the capital of Bhutan is doesn’t help” -Noam Brown

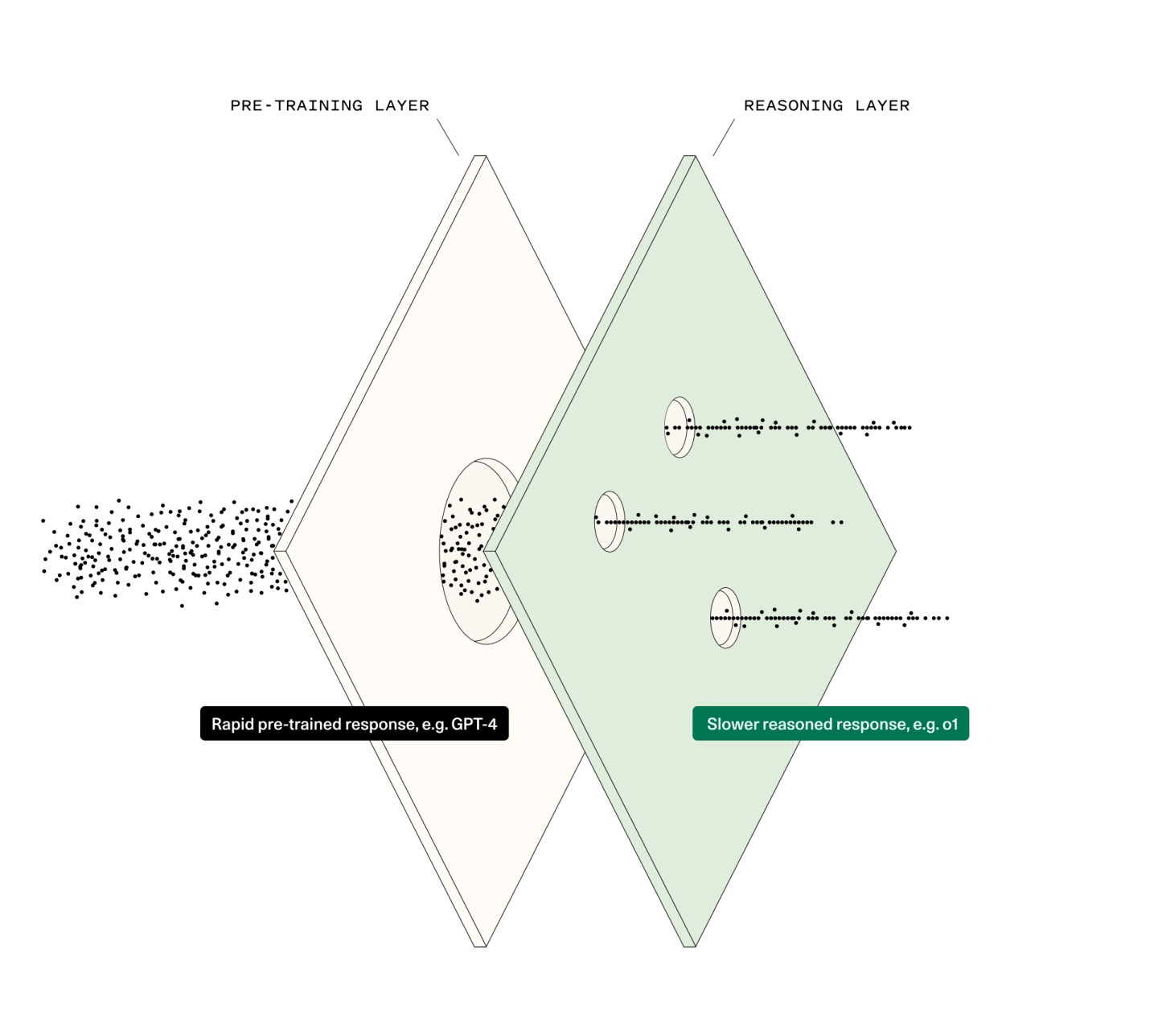

We can think of AI capabilities as happening in two stages. First, “System 1” which utilized billion-plus parameter models to deliver pre-trained instinctual responses. Now, with “stop and think” models like o1 and AlphaGo, we’re in the “System 2” stage where the aim is to endow AI systems with deliberate reasoning, problem-solving and cognitive operations that go beyond rapid pattern matching. It’s not enough for models to simply know things - they need to pause, evaluate and reason through decisions in real time.

This shift from pattern matching to chain-of-thought reasoning enables models to think through complex novel situations, especially those out of sample. Essentially, we’re mimicking the way a human thinks about solving a specific task - breaking down the larger task into a series of discrete tasks, rather than just looking for a singular generalized answer.

This type of chain-of-thought reasoning introduces a new concept of an agentic workflow which is iterative, takes feedback into account, and has the model think and reason - similar to a human agent. We can further breakdown this concept into four main agentic reasoning design patterns:

Reflection: asking an agent to complete a certain task, and then reprompting the agent to reflect on its answer

Tool use: the agent utilizes outside resources or interact with outside applications to improve the returned response

Planning: the agent determines sequences of actions to achieve its instructed goal

Multi-agent collaboration: placing multiple agents, each with specific contexts, in communication with each other to complete a larger task

With these new agentic workflows and reasoning design patterns, the set of tasks that AI can do will dramatically expand—leading to specific services that can write the right software for each particular problem.

Understanding services-as-a-software

Because of agentic reasoning, the current AI transition is service-as-a-software. Software companies can now turn labor into software.

You might be thinking: what’s the difference? Don’t we already have programmers writing software?

Let me explain. Thinking back to the cloud transition, this was where we saw the introduction of software-as-a-service. In this business, a company may sell access to its platform or tool, but customers are still responsible for using that tool to achieve the desired outcome. Software companies became cloud service providers—selling software on a $ per seat basis.

In the services business, responsibility for achieving the desired outcome sits with the company selling the service. Now, with agentic reasoning, AI companies are selling labor on a $ per outcome basis. For example, instead of QuickBooks, you offer tax services—in this case, done by an AI accountant. You have a job to be done. The agent does it. The AI company gets paid accordingly.

What does this mean for AI startups?

The addressable market is no longer just the software market, but the services market measured in the trillions of dollars. This is a massive opportunity because the services market spans numerous industries and functions.

Take for instance enterprise companies where services get chopped up into IT services and business process services. Instead of outsourced IT services teams, you can have AI customer service agents, AI troubleshooters, and AI assistants for any type of problem. On the business process services side, repetitive tasks such as data entry, audio transcription and account settlement can all be serviced by AI agents. For sales and marketing, AI can replace the traditional SDR, sourcing and writing outbound emails, scheduling demos and sending follow up emails.

Because compute is becoming more widely available and models are far more accessible, startups are on an even playing field in terms of developing products that compete with the offerings of existing SaaS companies. With companies like Lambda or Foundry offering on-demand GPU cloud computing, you no longer need to purchase and maintain a costly local server. As more companies continue to enter this space, it’s inevitable that the cost of compute will continue to fall. On the model side, a quick look at Hugging Face shows that there are more than a million open source models available for use. Combined with an open source agentic framework like LangChain or Camel, you can spin up an AI agent in a matter of days.

At the application level, it’s no longer necessary to deal with creating foundation models tailored for specific use cases. Foundation models are messy, enterprises can’t integrate a black box, and consumers will find it difficult to navigate cumbersome workflows. You might recall the early days of LLM products that were essentially “wrappers”—providing a repackaged user friendly way for consumers to harness the capabilities of AI. At the onset, many of these wrapper-type products were just simple apps with a clean and intuitive UI where the developer had incorporated some basic integrations and did the prompting and RAG legwork for you.

With advancements in LLM capabilities, the next evolution of wrappers is building “cognitive architectures”. These “cognitive architectures” are similar to wrappers in that they provide user-friendly UIs on top of a foundation model, but that’s just about where the similarities end. Beyond the UI component, cognitive architectures are actually sophisticated architectures that typically include multiple foundation models and application logic capable of handling complex workflows. This is also accompanied by the necessary infrastructure to support enterprise scaling such as vector and/or graph databases for RAG as well as guardrails to ensure compliance.

A new type of AI company

So you might be asking—how does this work in practice?

Let’s imagine a company that provides AI immigration lawyer agents. These agents are trained on legal materials such as law textbooks, case law, and procedural best practices. They have essentially become subject matter experts in the field of immigration law. This company can now sell the labor of their agents to an immigration law firm to handle general inquiries or low-fidelity high-touch cases.

For example, say you had a problem with your visa eligibility but aren’t sure what to do next. First, You would prompt the agent with your visa problem and provide it with a PDF of your application. Next, the agent would process your query, decide the appropriate response based on the data it was trained on, and then respond with information regarding your visa problem or ask you further questions. Then, depending on the nature of your problem, the agent could provide you with the appropriate supplementary documents, recommend the best approach for fixing your visa application or even flag other issues with your application before you re-submit it for review.

By utilizing an agent for the initial intake process, versus a human lawyer, the immigration law firm can handle a higher volume of cases with the same number of employees, while freeing up time for their human attorneys to dedicate their attention to important matters. In this example, the AI company gets paid per resolution, there’s no such thing as “a seat”. The outcome-oriented approach aligns the cost of deploying the agent with the associated business value. Compared to a traditional SaaS product, the AI company in this instance doesn’t have a financial motivation to limit usage of their product and can grow alongside their customers. In fact, they would generally encourage more usage of their product since more use helps them gather better data.

As a whole, the product opportunity for agentic applications isn’t some all-encompassing complex software-as-a-service that requires significant configuration and customization. Rather, the AI company is creating a flexible agent, with the ability to reason and think within a specific domain of expertise, capable of navigating automatable pools of work.

Closing thoughts

Although we’re still in the early stages, agentic applications will quickly become much more sophisticated and robust. Deeper integration of AI tools has the potential to improve the productivity of existing employees and we can foresee a near future where AI agent to AI agent interactions, in combination with biological agents, enables a substantial increase in output.

Going back to the realm of AI infrastructure and model development, there will still be a strong emphasis on improving reasoning ability and inference-time compute, but as we’ve seen by now, the tools are already available for startups to build successful products with tangible applications. This current form of availability and accessibility will continue to change how we think about productization and segmentation for AI-native applications. Sometimes we’ll want quick one-shot answers. In other cases, we’ll want our model to think for longer and provide a higher quality answer.

Needless to say, we’re clearly in a new era of SaaS companies. The AI ecosystem looks very different from what it was only 2 years ago. The advent of agentic applications is bringing down the cost of services, coinciding with the plummeting cost of inference, unlocking massive market opportunities. That being said, one thing that comes to mind is this idea that “today is the worst AI will ever be”. From what we’ve seen so far with the rapid pace of growth in the AI space, the time horizons are continuing to shrink and at this point the only limitation is our imagination.